Behavior segmentation method based on human body motion capture data character string representation

A technology of human motion and data characters, applied in the field of human motion capture data processing, can solve the problems of inability to judge the same behavior of the sequence to be segmented, high segmentation accuracy, etc., achieve superior effectiveness, good accuracy, and meet actual needs Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0052] In order to illustrate the present invention more clearly, the present invention will be further described below in conjunction with preferred embodiments and accompanying drawings. Similar parts in the figures are denoted by the same reference numerals. Those skilled in the art should understand that the content specifically described below is illustrative rather than restrictive, and should not limit the protection scope of the present invention.

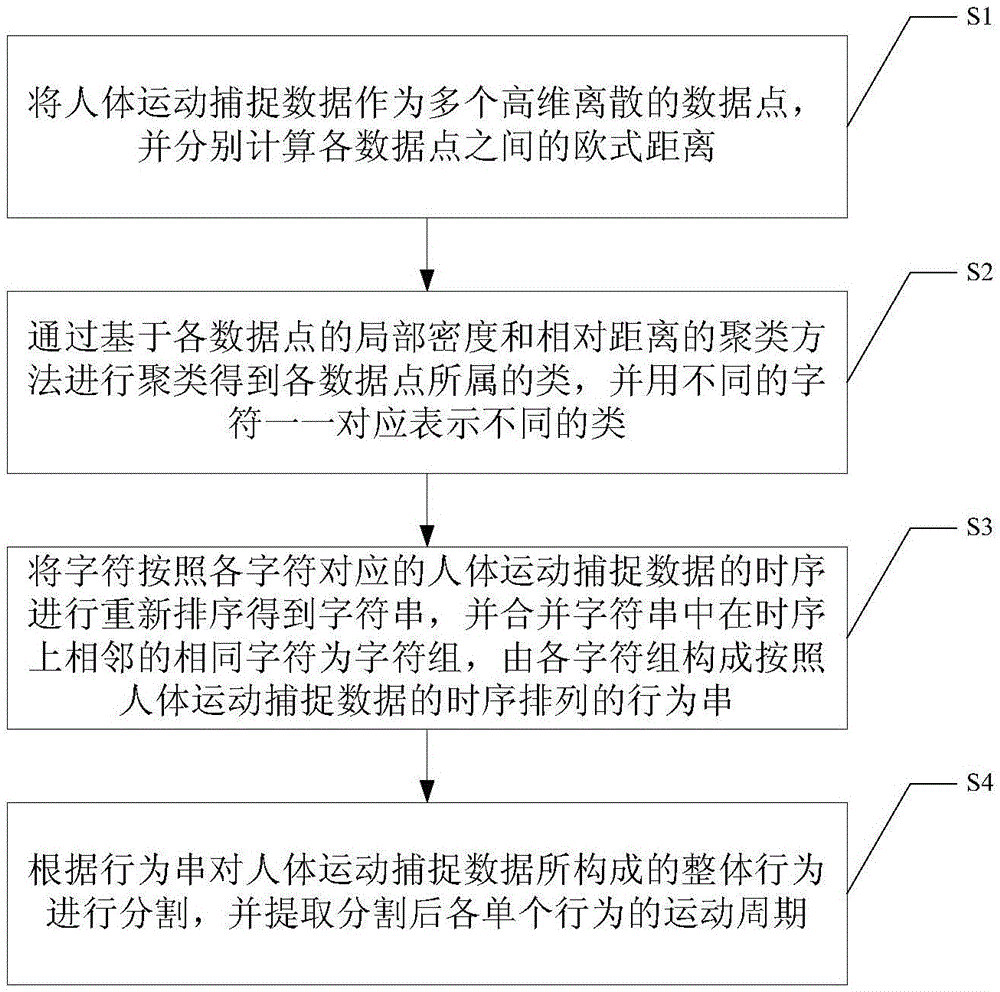

[0053] Such as figure 1 As shown, the behavior segmentation method based on the human body motion capture data string representation provided by this embodiment includes the following steps:

[0054] S1. Take the human body motion capture data as multiple high-dimensional discrete data points, and calculate the Euclidean distance between each data point;

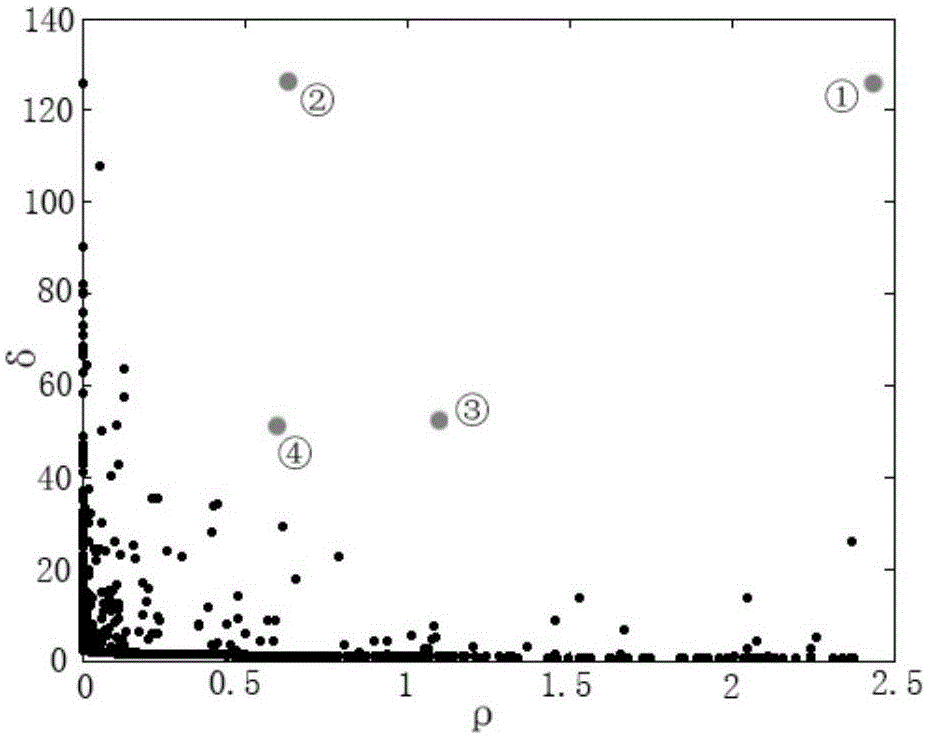

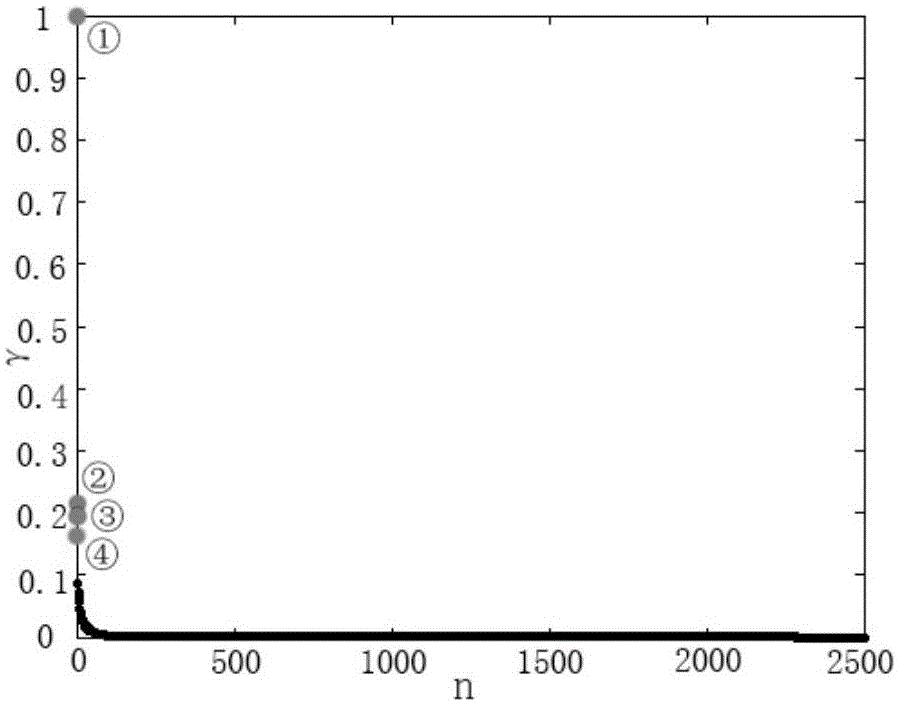

[0055] S2. Perform clustering based on the local density and relative distance of each data point to obtain the class to which each data point belongs, and use differen...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com