A virtual cpu scheduling optimization method for numa architecture

An optimization method and architecture technology, applied in the field of virtualization, can solve problems such as destroying the transparency of the virtualization layer, failing to meet requirements, optimizing shared resources and remote memory access overhead, etc., to achieve low overall cost, improve performance, and optimize the system performance effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0018] In order to make the objectives, technical solutions and advantages of the present invention clearer, the present invention will be further described in detail below with reference to the accompanying drawings and examples.

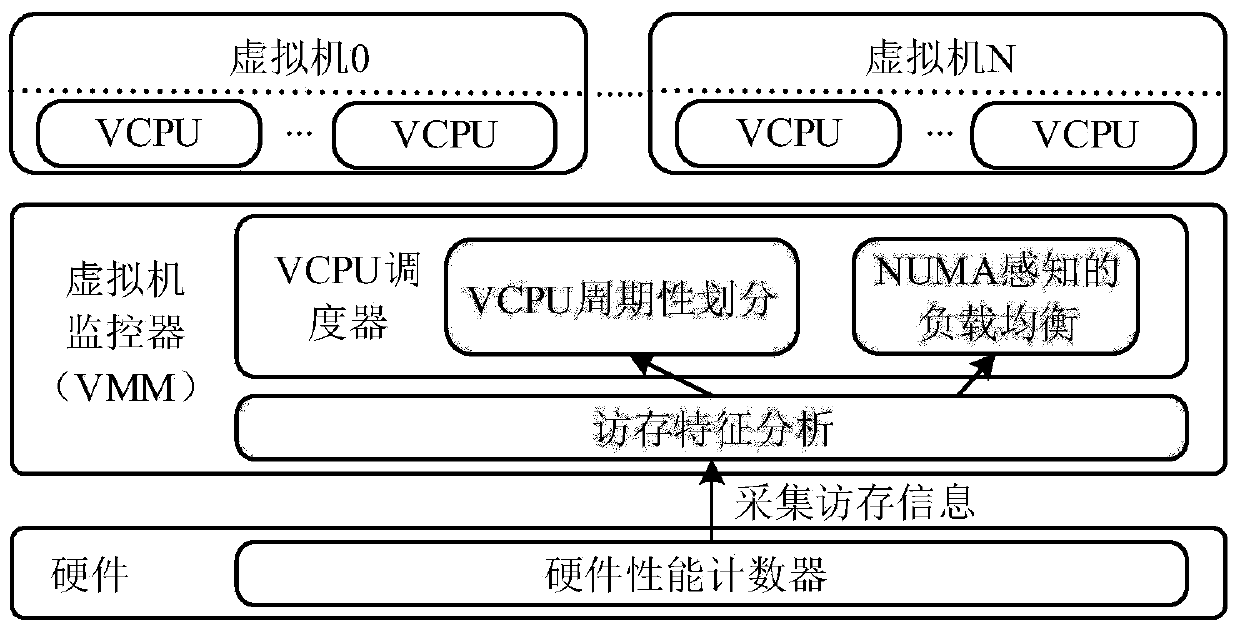

[0019] like figure 1 As shown, under the NUMA architecture, each node has an independent memory block, a memory access controller and a shared cache, and data is transmitted between nodes through an interconnect bus. In a virtualized environment, the virtual machine monitor (VMM), which is located between the underlying hardware and the upper-level guest operating system, is the core of virtualization technology. VMM is responsible for the allocation and management of underlying hardware resources, and can support multiple independent virtual machines running on the same physical machine. Each virtual machine has its own VCPU, which is used to run the applications in the virtual machine. In particular, the VCPU scheduler in VMM is responsible for...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com