Gpu memory buffer pre-fetch and pre-back signaling to avoid page-fault

A buffer and memory technology, applied in the direction of instruments, data conversion, image memory management, etc., can solve the problems of GPU processing inefficiency, lack of technology to stop and resume highly parallel operations, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

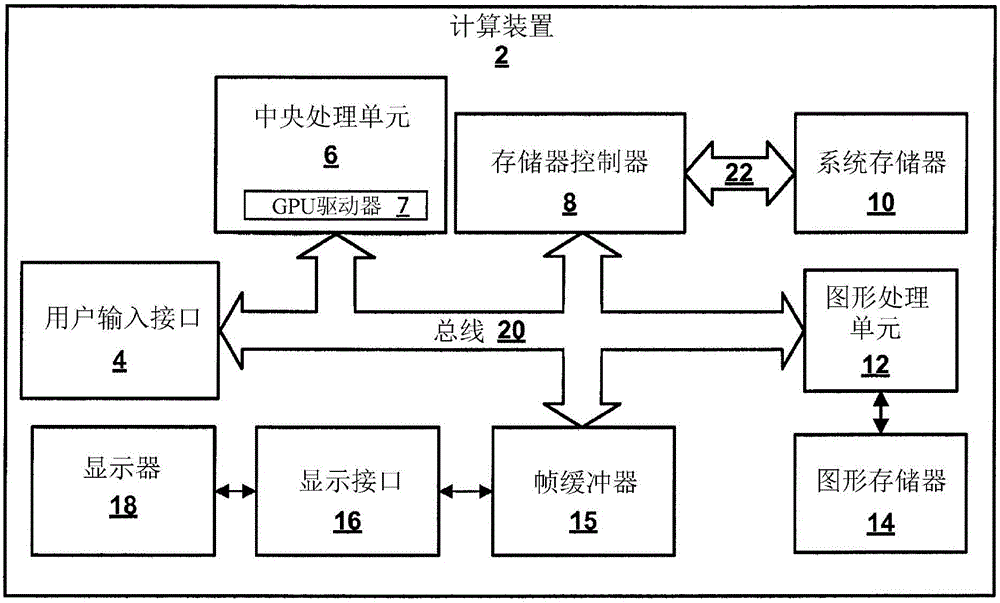

[0017] The present invention relates to techniques for graphics processing, and more particularly to techniques for prefetch and prebackup signaling from a graphics processing unit for avoiding page faults in a virtual memory system.

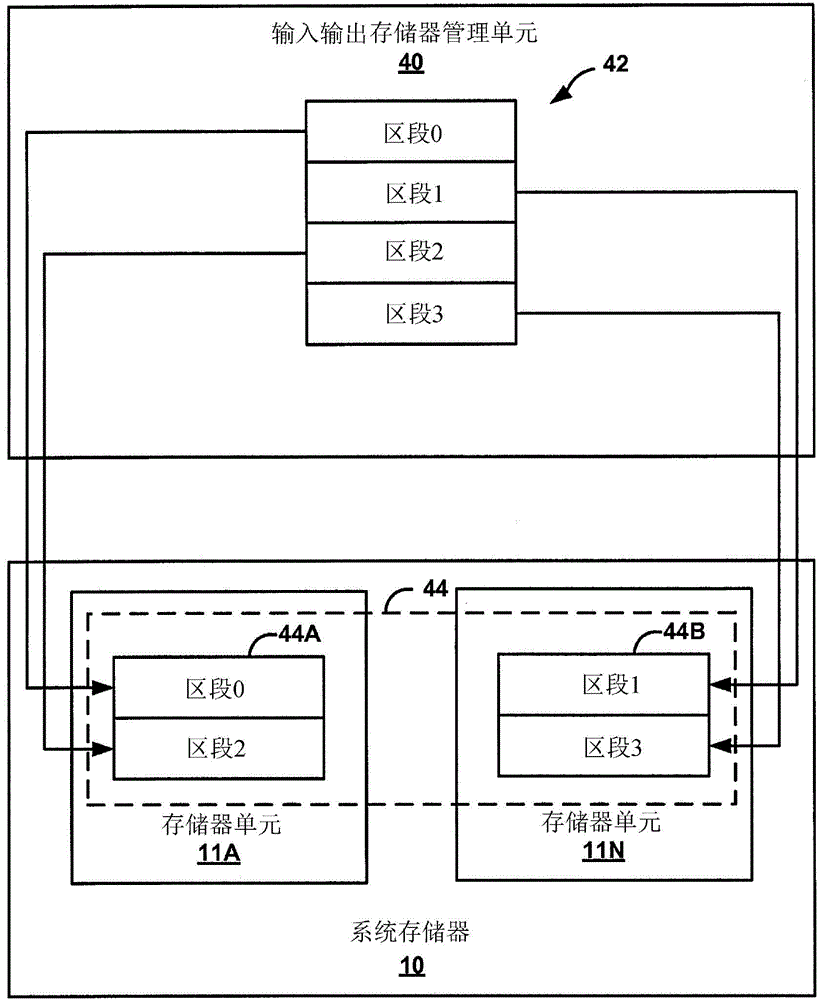

[0018] A modern operating system (OS) running on a central processing unit (CPU) typically uses a virtual memory scheme to allocate memory to the multiple programs operating on the CPU. Virtual memory is a memory management technique that virtualizes a computer system's physical memory (eg, RAM, disk storage, etc.) such that application requirements refer to only one set of memory (ie, virtual memory). Virtual memory consists of contiguous address spaces that map to locations in physical memory. In this way, segments of physical memory are "hidden" from application programs, which can instead interact with contiguous blocks of virtual memory. Contiguous blocks in virtual memory are usually arranged into "pages." Each page is some fixed-length ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com