Self-adaptive indoor vision positioning method based on global motion estimation

A global motion and visual positioning technology, applied in the field of image processing, can solve the problems of difficult positioning, large amount of calculation, slow parameter estimation speed, etc., and achieve the effect of improving effectiveness, improving positioning accuracy, and shortening execution time

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0035] The present invention will be further described below in conjunction with the accompanying drawings and embodiments.

[0036] The wired camera used in the experiment has a pixel size of 640×480, a resolution of 96dpi, and a video frame rate of 30fps.

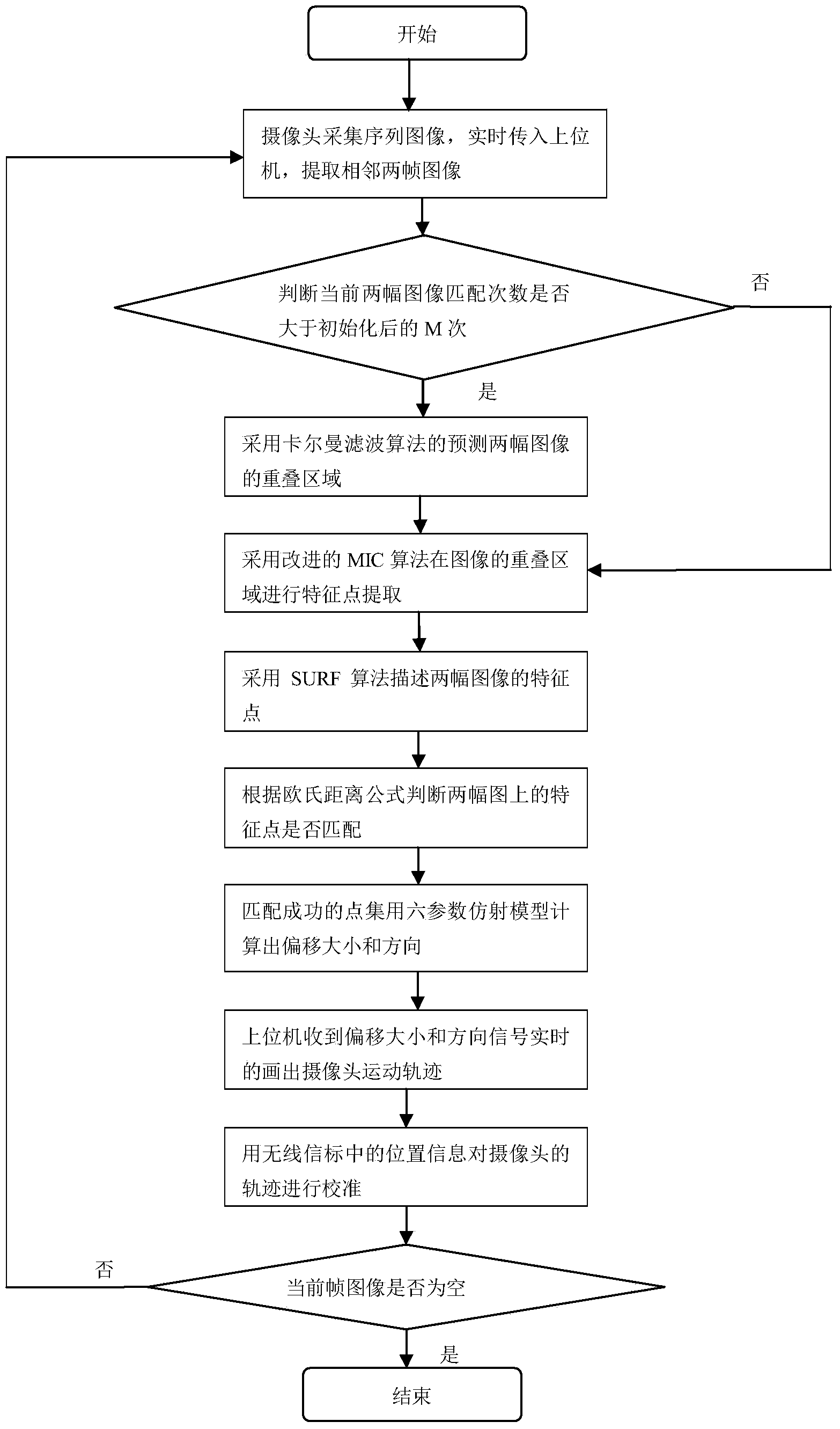

[0037] The flowchart of the method of the present invention is as figure 1 , the specific implementation process is as follows:

[0038] (1) The camera collects ground image information, and extracts two images from the zeroth frame image of the sequence, the zeroth frame is the reference frame, and the other image is the current frame. The image is preprocessed by adaptive smoothing filter, and then the color image is converted into a grayscale image.

[0039] (2) Before the Kalman filtering algorithm can accurately predict the overlapping area, M matchings are required. If the number of times the two images are successfully matched is less than M times, perform step 3 on the original image; if it is more than M time...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com