Object illumination moving method based on gradient operation

A lighting migration and gradient technology, applied in the fields of virtual reality and computer vision, can solve the problem of the geometric difference between the target face and the reference face, and achieve the effect of real results.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0022] The present invention will be described in detail below in conjunction with the accompanying drawings.

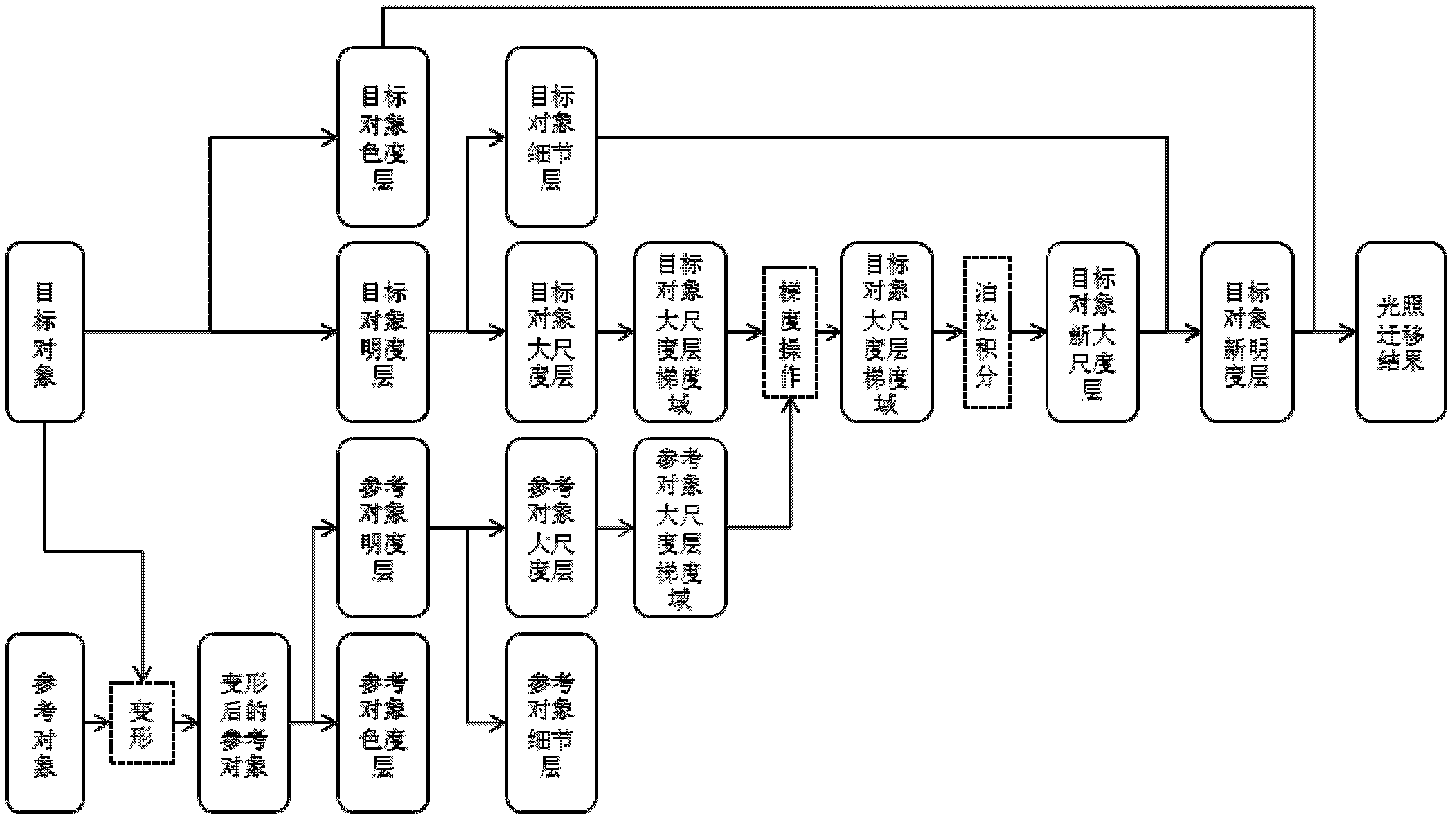

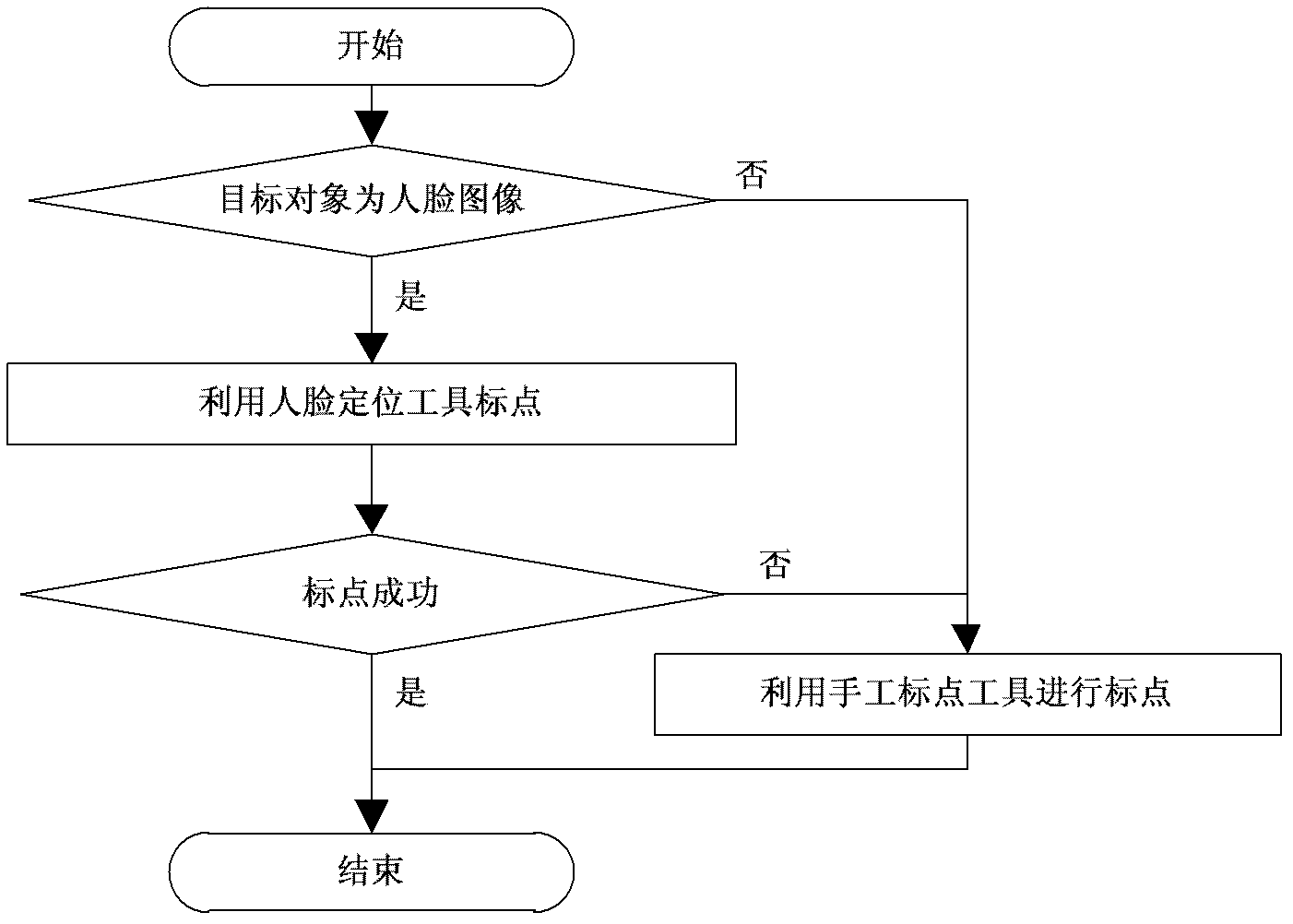

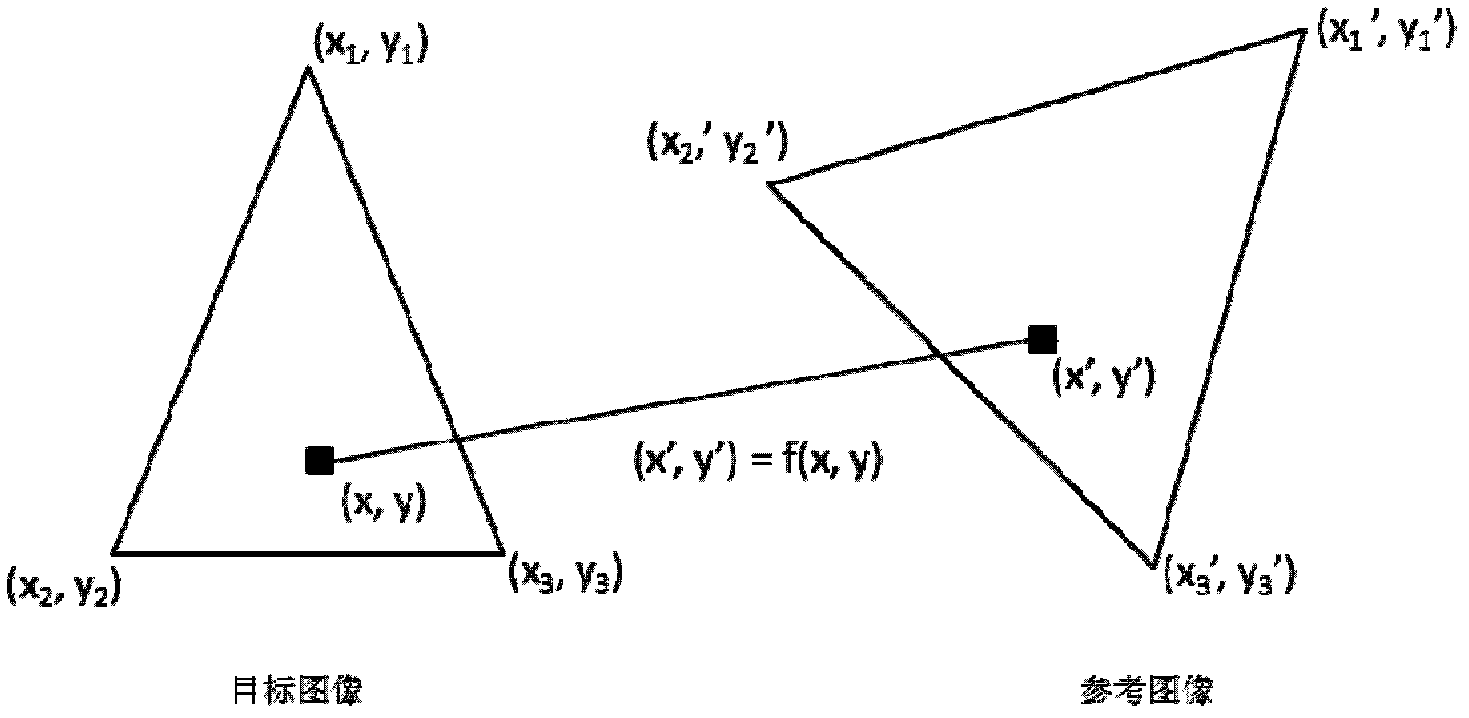

[0023] refer to figure 1The main flow chart of the present invention, the object illumination migration method based on the gradient operation of the present invention includes the following basic processes: first, use the active contour model face positioning tool and image deformation method to convert the reference object (that is, the part of the reference object area in the input image) ) is aligned to the target object (that is, the part of the target object area in the input image), and then both the reference object and the target object are decomposed into a luma layer and a chroma layer, and a least squares filter is used to divide the luma layer into a large-scale layer and a In the detail layer, all operations are carried out on the large-scale layer; the present invention first converts the large-scale layer of the reference object and the target object ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com