[0025]In general, in another aspect, the invention features a

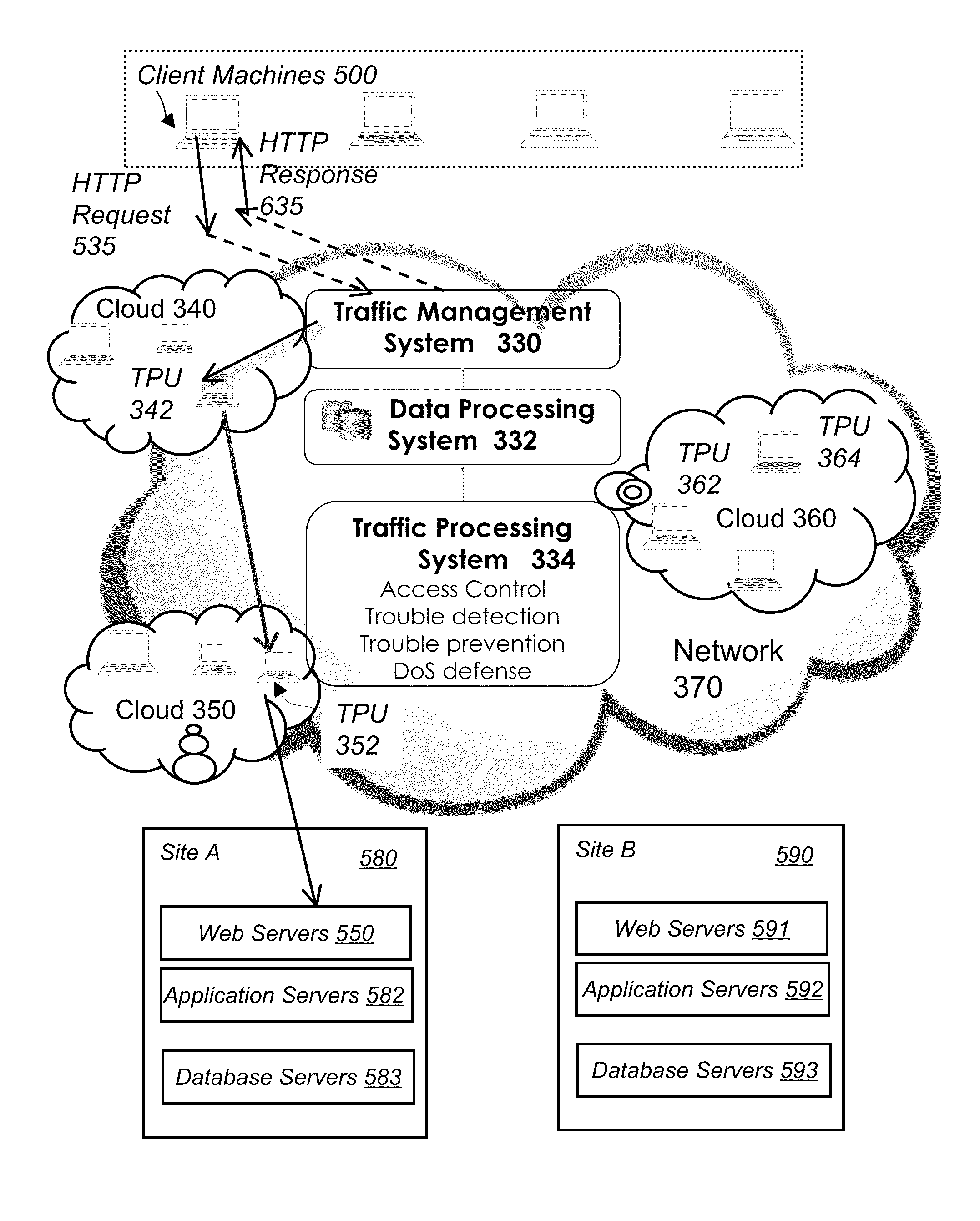

system for providing load balancing among a set of computing nodes running a network accessible computer service. The

system includes a first network providing network connections between a set of computing nodes and a plurality of clients, a computer service that is hosted at one or more servers comprised in the set of computing nodes and is accessible to clients via the first network and a second network comprising a plurality of traffic processing nodes and load balancing means. The load balancing means is configured to provide load balancing among the set of computing nodes running the computer service. The

system also includes means for redirecting network traffic comprising client requests to access the computer service from the first network to the second network, means for selecting a traffic processing node of the second network for receiving the redirected network traffic, means for determining for every client request for access to the computer service an optimal computing node among the set of computing nodes running the computer service by the traffic processing node via the load balancing means, and means for routing the client request to the optimal computing node by the traffic processing node via the second network. The system also includes real-time monitoring means that provide real-time status data for selecting optimal traffic processing nodes and optimal computing nodes during

traffic routing, thereby minimizing service disruption caused by the failure of individual nodes.

[0026]Among the advantages of the invention may be one or more of the following. The present invention deploys

software onto commodity hardware (instead of special hardware devices) and provides a service that performs global

traffic management. Because it is provided as a web delivered service, it is much easier to adopt and much easier to maintain. There is no special hardware or

software to purchase, and there is nothing to install and maintain. Comparing to load balancing approaches in the prior art, the system of the present invention is much more cost effective and flexible in general. Unlike load balancing techniques for

content delivery networks, the present invention is designed to provide

traffic management for dynamic web applications whose content can not be cached. The

server nodes could be within one

data center, multiple data centers, or distributed over distant geographic locations. Furthermore, some of these

server nodes may be “Virtual Machines” running in a

cloud computing environment.

[0027]The present invention is a scalable, fault-tolerant

traffic management system that performs load balancing and

failover. Failure of individual nodes within the traffic

management system does not cause the failure of the system. The present invention is designed to run on commodity hardware and is provided as a service delivered over

the Internet. The system is horizontally scalable. Computing power can be increased by just adding more traffic processing nodes to the system. The system is particularly suitable for traffic management and load balancing for a computing environment where node stopping and starting is a common occurrence, such as a

cloud computing environment.

[0028]Furthermore, the present invention also takes session stickiness into consideration so that requests from the same client session can be routed to the same computing node persistently when session stickiness is required. Session stickiness, also known as “

IP address persistence” or “

server affinity” in the art, means that different requests from the same client session will always to be routed to the same server in a multi-server environment. “Session stickiness” is required for a variety of web applications to function correctly.

[0030]The present invention may also be used to provide an on-demand service delivered over

the Internet to

web site operators to help them improve their

web application performance,

scalability and availability, as shown in FIG. 20.

Service provider H00 manages and operates a global infrastructure H40 providing

web performance related services, including monitoring, load balancing, traffic management, scaling and

failover, among others. The global infrastructure has a management and configuration

user interface (UI) H30, as shown in FIG. 20, for customers to purchase, configure and manage services from the

service provider. Customers include web operator H10, who owns and manages

web application H50.

Web application H50 may be deployed in one

data center, or in a few data centers, in one location or in multiple locations, or run as virtual machines in a distributed

cloud computing environment. H40 provides services including monitoring, traffic management, load balancing and failover to

web application H50 which results in delivering better performance, better

scalability and better availability to web users H20. In return for using the service, web operator H10 pays a fee to

service provider H00.

Login to View More

Login to View More  Login to View More

Login to View More