Cache memory and method for handling effects of external snoops colliding with in-flight operations internally to the cache

a cache memory and internal snoop technology, applied in the field of cache memories in microprocessors, can solve the problems of cache coherence, increase the timing and complexity of the cache control logic to handle the cancelled in-flight operation, and achieve the effect of improving the processing cycle timing and reducing the complexity of other caches

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

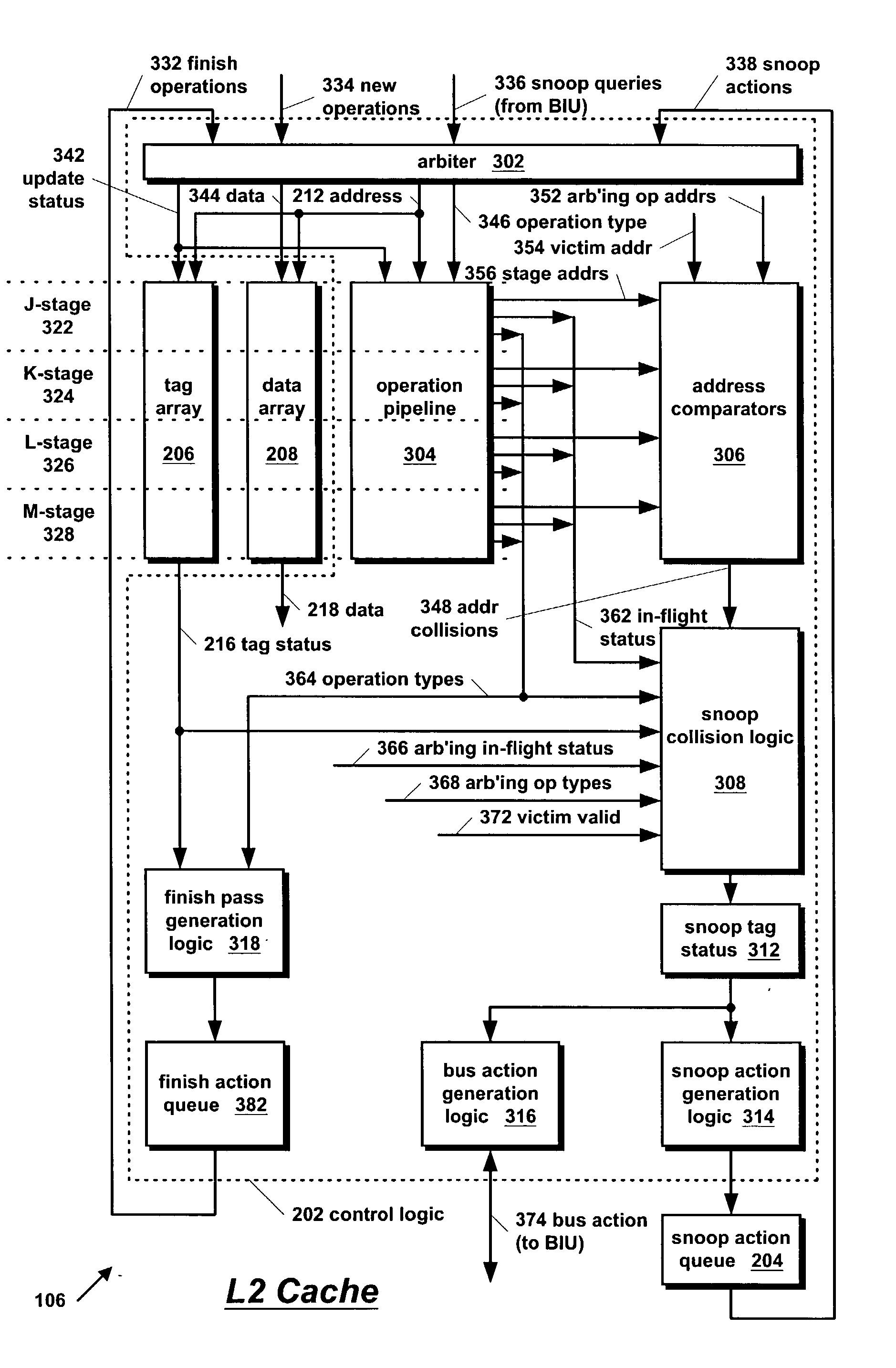

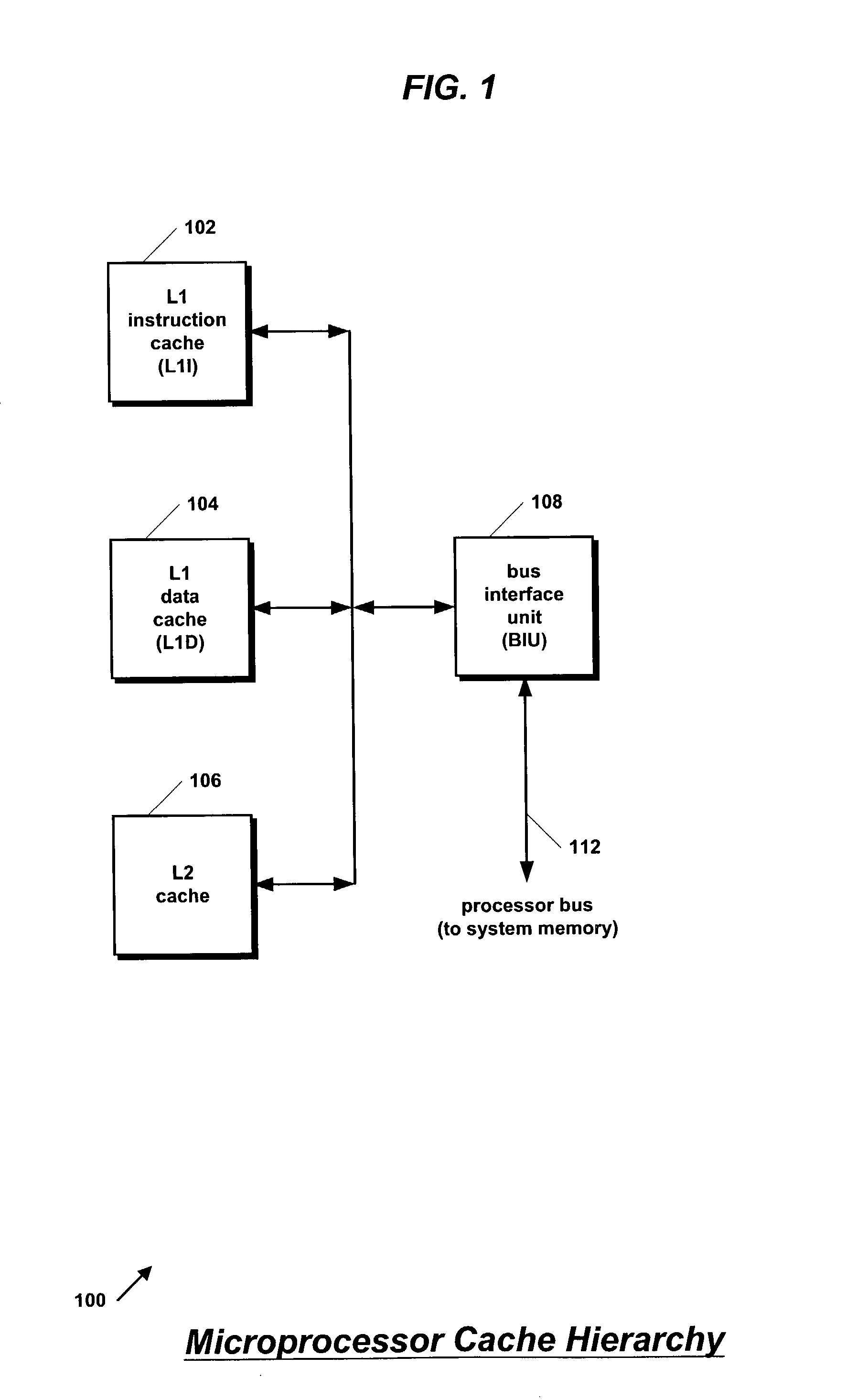

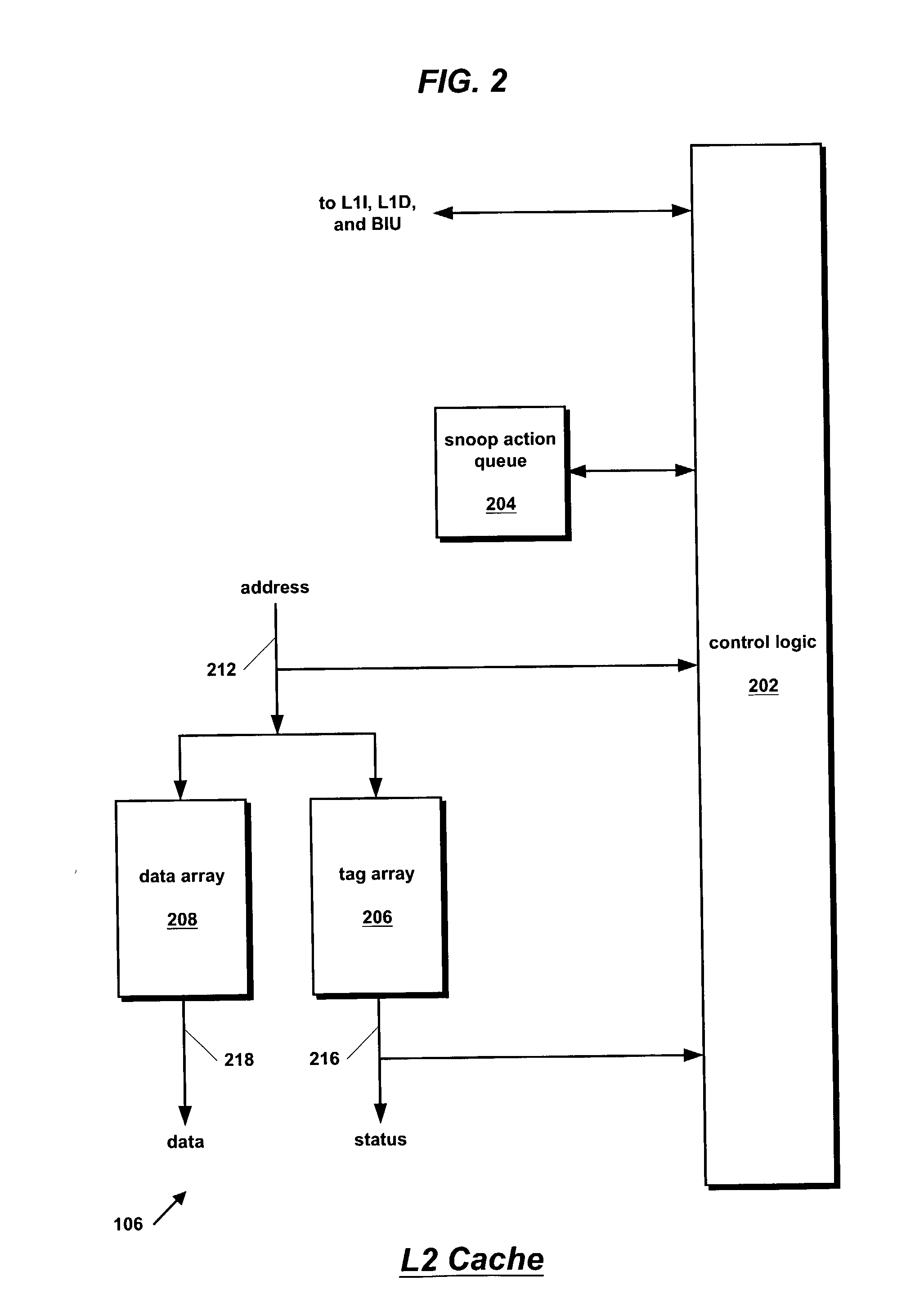

[0030] Referring now to FIG. 1, a block diagram illustrating a cache hierarchy in a microprocessor 100 according to the present invention is shown.

[0031] Microprocessor 100 comprises a cache hierarchy that includes a level-one instruction (L1I) cache 102, a level-one data (L1D) cache 104, and a level-two (L2) cache 106. The L1I 102 and L1D 104 cache instructions and data, respectively, and L2 cache 106 caches both instructions and data, in order to reduce the time required for microprocessor 100 to fetch instructions and data. L2 cache 106 is between the system memory and the L1I 102 and L1D 104 in the memory hierarchy of the system. The L1I 102, L1D 104, and L2 cache 106 are coupled together. The L1I 102 and L2 cache 106 transfer cache lines between one another, and the L1D 104 and L2 cache 106 transfer cache lines between one another. For example, the L1I 102 and L1D 104 may castout cache lines to or load cache lines from L2 cache 106.

[0032] Microprocessor 100 also includes a bu...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com