Underwater scene depth evaluation method and system based on monocular vision

A scene depth, monocular vision technology, applied in the field of computer vision, can solve the problems of limited practical application scenarios, sensitive to light in the working range, over-reliance on hardware devices, etc., to achieve the effect of saving computation and reducing restricted conditions

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

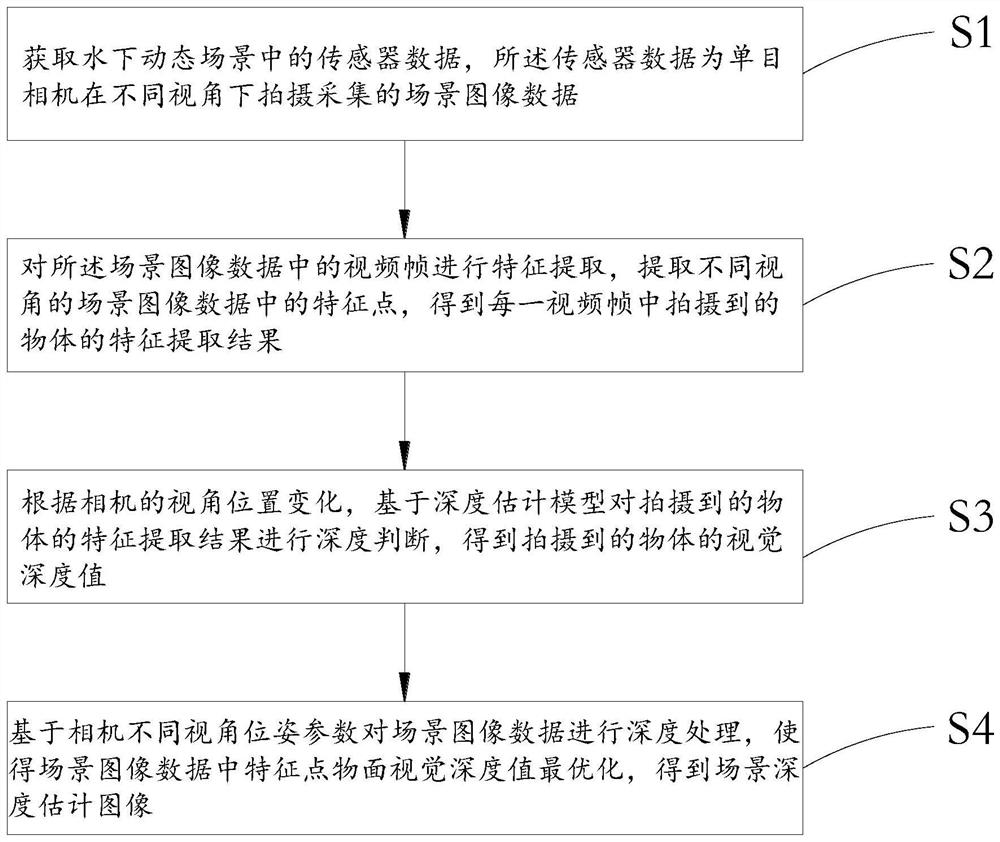

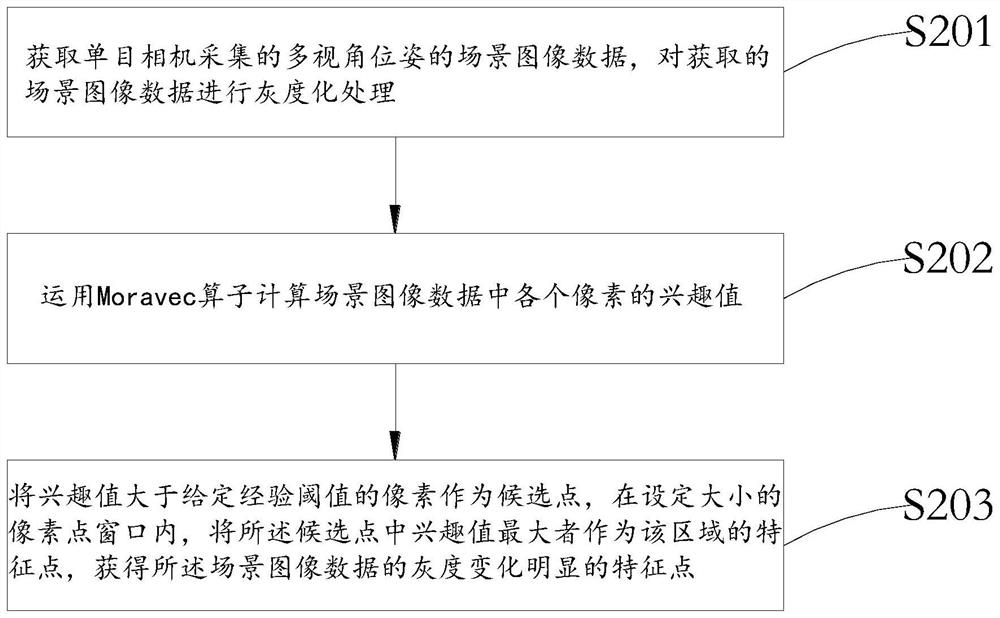

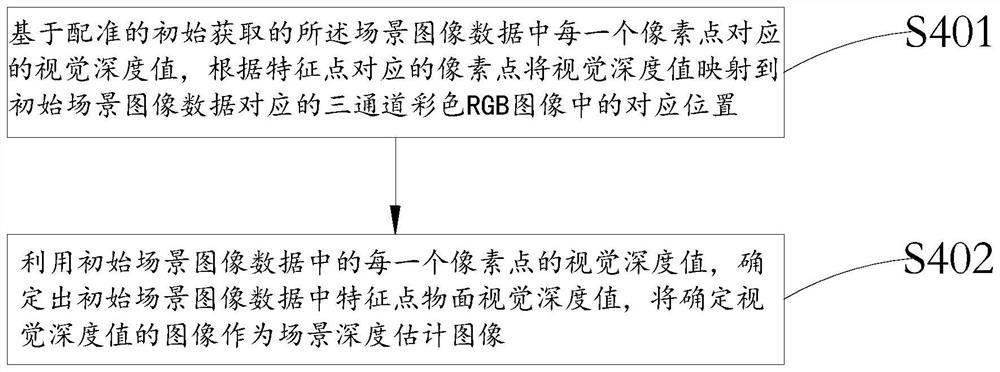

[0045] see figure 1 shown, figure 1 A flowchart of a method for evaluating depth of underwater scene based on monocular vision provided by the present invention. An embodiment of the present invention provides a monocular vision-based underwater scene depth assessment method, comprising the following steps:

[0046] S1: Acquire sensor data in an underwater dynamic scene, where the sensor data is scene image data captured and collected by a monocular camera under different viewing angles.

[0047]In this embodiment, the scene image data is that a monocular camera is mounted on an underwater device or an underwater robot enters an underwater dynamic scene, and shoots image data in the scene from different rotational perspectives.

[0048] Before the monocular camera captures, it also includes establishing the global coordinate system of the underwater motion scene, establishing the coordinate system by gridding, and establishing the gridded intersection between the pixels imag...

Embodiment 2

[0082] like Figure 4 As shown, in a preferred embodiment provided by the present invention, a monocular vision-based underwater scene depth assessment system includes an acquisition unit 100 , a feature extraction unit 200 , a depth judgment unit 300 and a depth assessment unit 400 . in:

[0083] The acquiring unit 100 is configured to acquire scene image data captured by a monocular camera in different viewing angles in an underwater dynamic scene.

[0084] In this embodiment, each video frame image in the scene image data captured and collected by the acquisition unit 100 corresponds to one angle of view of the monocular camera, and the image data in the scene is captured at different rotational angles of view.

[0085] The feature extraction unit 200 is configured to extract feature points in scene image data from different perspectives, and obtain feature extraction results of objects captured in each video frame.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com