Video tracking system and method based on target feature space-time alignment

A target feature and video tracking technology, which is applied in the field of video tracking, can solve the problem of not considering the mismatch or mismatch of the front and rear frames, and achieve the effect of good discrimination

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

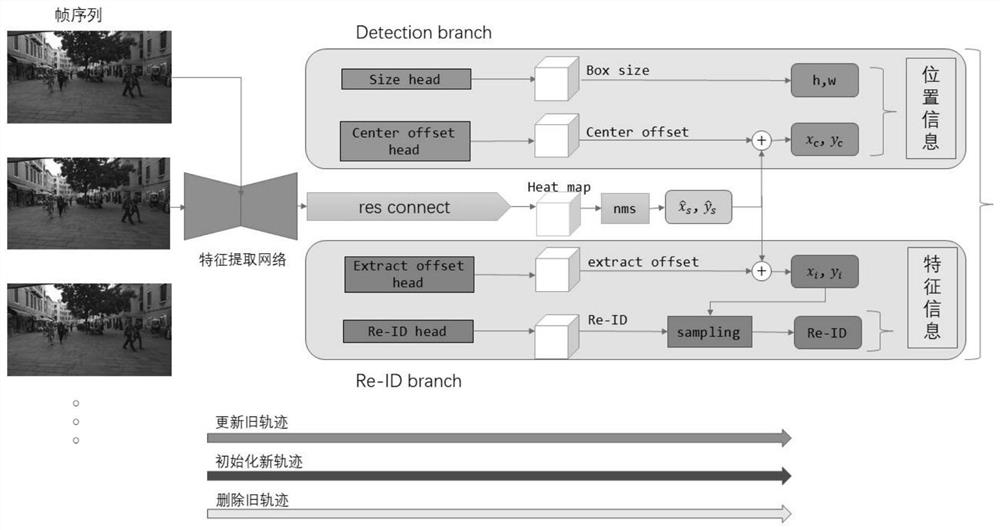

[0021] Such as figure 1 and figure 2 As shown, a video tracking system based on temporal and spatial alignment of target features involved in this embodiment includes: a global feature extraction module, a target position prediction module, a target feature extraction module, and a target tracking module.

[0022] Such as image 3 As shown, the global feature extraction module includes: a feature extraction network and an adjacent frame similarity calculation unit, wherein: the feature extraction network generates the corresponding original frame and the feature map of the reference frame according to the original frame and the reference frame of the video to be tested , and its size after downsampling is (C, H, W). The adjacent frame similarity between the two feature maps calculated by the adjacent frame similarity calculation unit.

[0023] The similarity calculation of the adjacent frame feature map adopts the method of spatial correlation, that is, each pixel on the c...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com