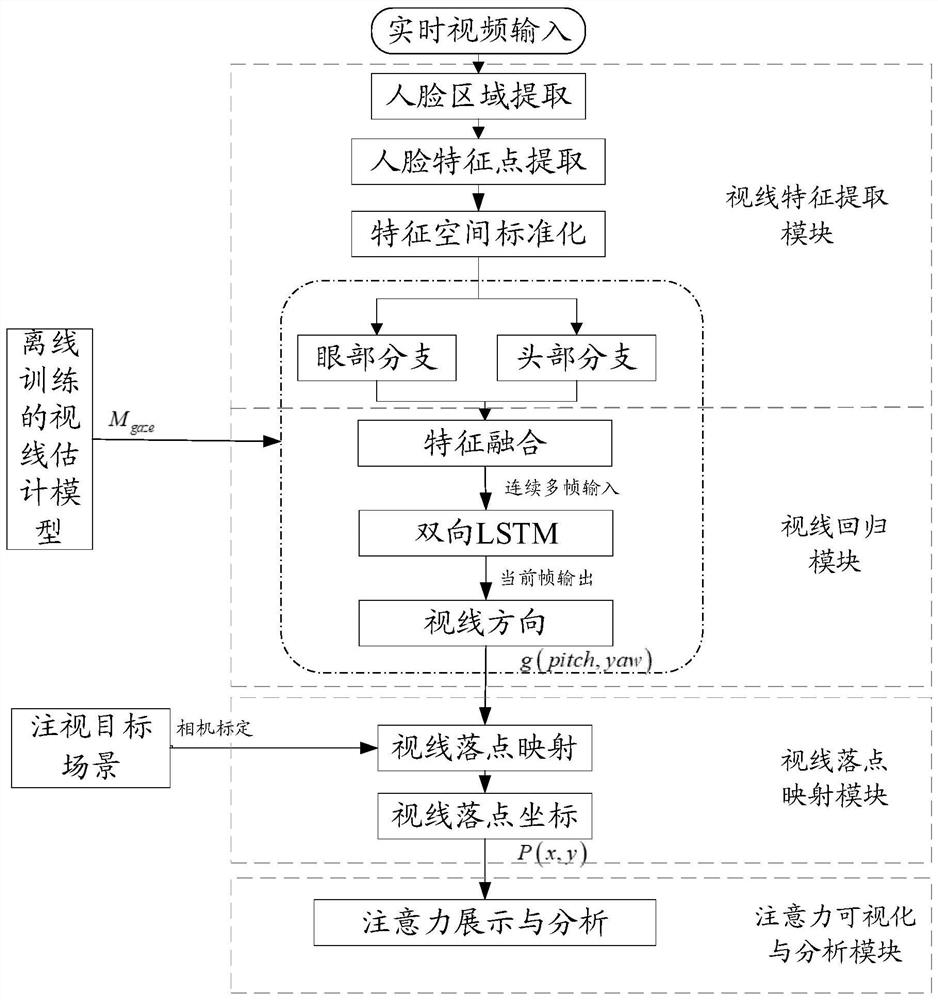

System and method for realizing sight line estimation and attention analysis based on recursive convolutional neural network

A neural network and line-of-sight estimation technology, applied in the field of computer vision, can solve the problems of inability to form an end-to-end system, low practicability, difficulty in model training and deployment, and achieve the effect of enriching the data output interface

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

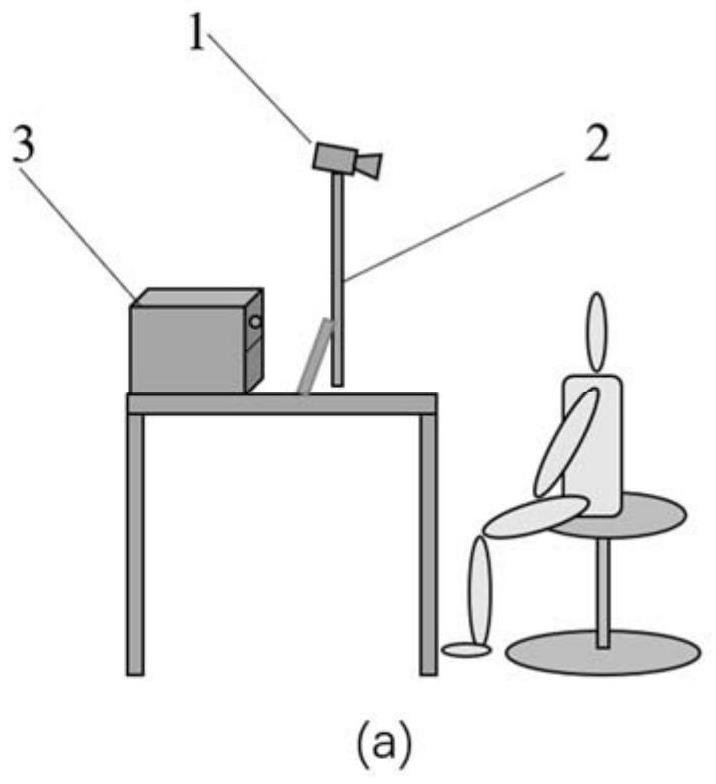

[0087] Embodiment 1: Sight Estimation System Based on Screen Stimuli

[0088] The sight estimation system based on screen stimuli means that the user only accepts the information on the terminal display screen as a source of visual stimuli, so he only pays attention to information such as the fixation point position and fixation time within the screen range.

[0089] The schematic diagram of the application scenario of the system is shown in Fig. 2 . Combined with the labels in the illustration, the hardware composition of the system mainly includes 1. Visual acquisition device (monocular camera, optional USB camera or network camera); 2. Terminal display; 3. Controller. There are two ways to install the camera, one is stick-on installation, that is, it is installed in the middle of the upper or lower edge of the terminal display screen, and the other is independent installation, that is, it is independently installed beside the screen through the camera bracket. , the above ...

Embodiment 2

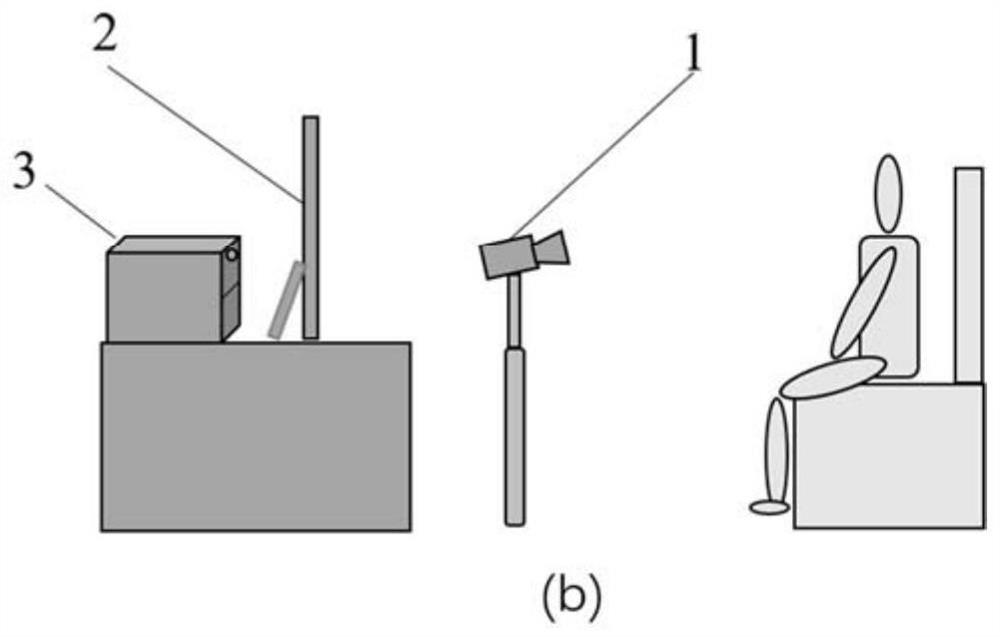

[0123] Embodiment 2: Sight Estimation System Based on Physical Stimulation

[0124] The eye-tracking system based on physical stimulation is defined as the user's gaze scene is a three-dimensional space, which can be a gaze plane in the space, or a person or object with different depths of field. Since there is no limit to the scenes that cause visual stimulation, its application range is wider. The schematic diagram of the application scene of the system is shown in Figure 8(a) and Figure 8(b), where Figure 8(a) indicates that the gaze scene is a plane in three-dimensional space, and Figure 8(b) indicates that the gaze scene is a plane in space people as well as objects.

[0125] Combined with the labels in the scene schematic diagram, the hardware components of the sight tracking system based on physical stimuli mainly include: 1. User-oriented visual acquisition equipment, the specific camera selection can be an ordinary USB monocular camera or a network camera, both of wh...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com