Method for improving continuous control stability of intelligent agent

An intelligent body and stability technology, applied in the direction of instruments, computing models, artificial life, etc., can solve problems such as difficult modeling, inability to enhance robustness, inability to know the difference between new environment and training environment, etc., to achieve enhanced robustness , the effect of improving stability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

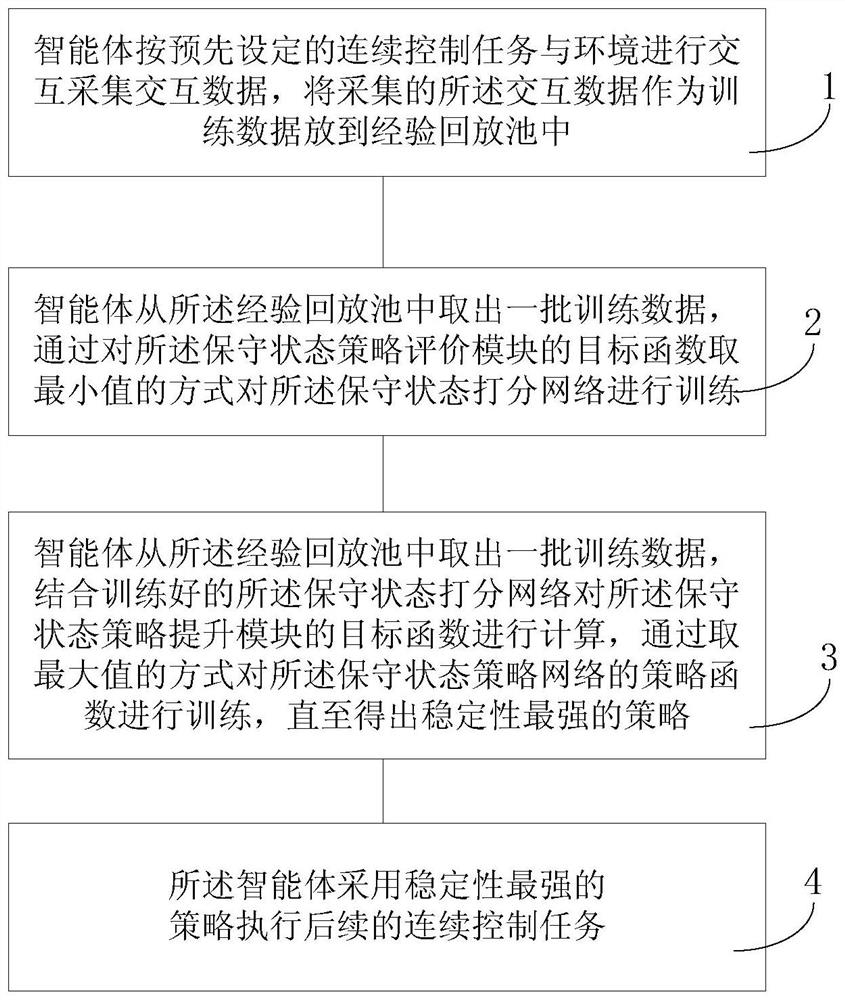

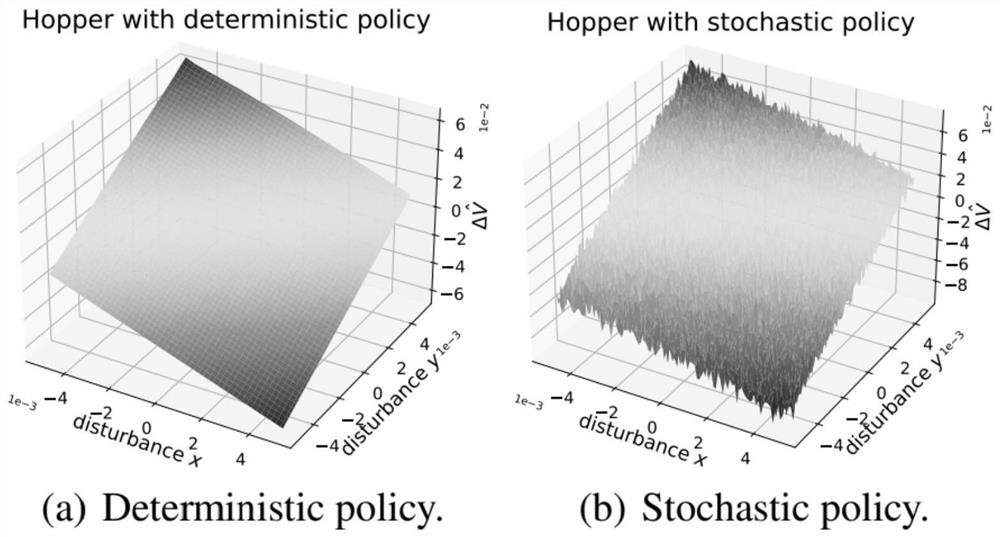

[0088] The embodiment of the present invention provides a method for improving the stability of the continuous control of the agent, which can be called the conservative state strategy optimization method (SC-SAC), which is based on the existing conservative state Markov decision process and the corresponding conservative state strategy iteration and Based on the value iteration algorithm, a new robust reinforcement learning method is formed, which includes two parts: the conservative state policy evaluation network and the conservative state policy promotion network, and uses the gradient regularization term to efficiently solve its optimization goal.

[0089] Among them, the conservative state policy evaluation network is used as the scoring network of the agent, and the following objective function (1) is minimized to train the scoring function Q(critic):

[0090]

[0091] in is defined as:

[0092]

[0093]

[0094] The conservative state policy boosting network...

Embodiment 2

[0124] This embodiment provides a method for improving the stability of the continuous control of the agent, including:

[0125] Preparation Phase:

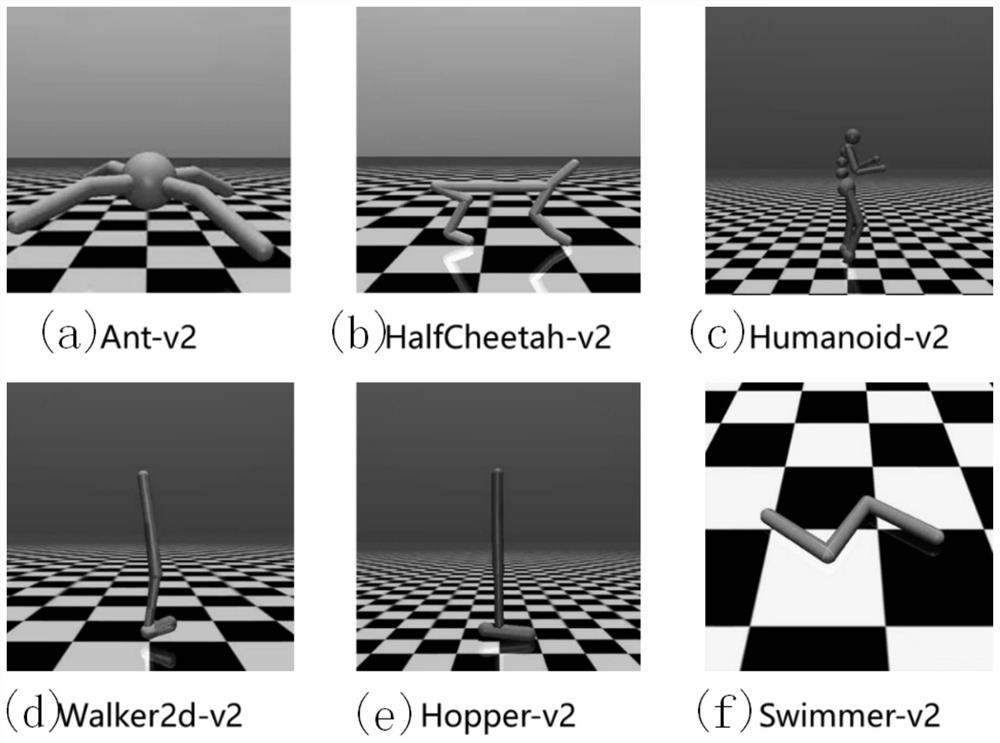

[0126] Select the current task to be tested and split it into two parts: the agent and the environment. The agent accepts the state selection action of the environment feedback; the environment accepts the action to determine the new state, and then abstracts the actions that the agent can perform. And set the rewards accepted by the agent. Maximizing the cumulative value of rewards is the goal of the present invention.

[0127] Training phase:

[0128] Use the deep learning method to implement the above pseudo-code, deploy it in the agent, the agent follows the corresponding process in the pseudo-code to interact with the training environment, and use the data collected interactively for the training of the strategy, repeating a period of time train;

[0129] Verification phase:

[0130] Deploy the learning strategy obtaine...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com