Model training method and device, task processing method, storage medium and processor

A technology for model training and task processing, applied in the field of deep learning, can solve problems such as poor anti-risk active perception and resilience, and inability to achieve model training, so as to improve active perception and resilience, deal with model performance degradation, and improve robustness. self-enhancing effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

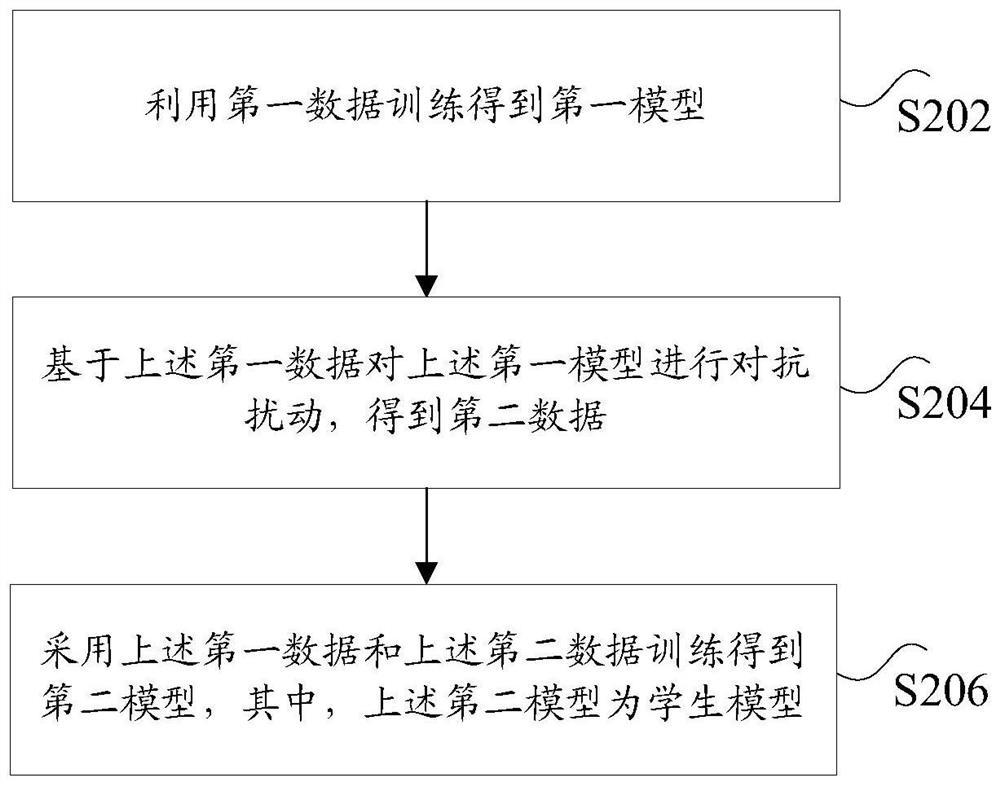

[0029] According to an embodiment of the present invention, an embodiment of a model training method is provided. It should be noted that the steps shown in the flowcharts of the accompanying drawings can be executed in a computer system such as a set of computer-executable instructions, and, although in The flowcharts show a logical order, but in some cases the steps shown or described may be performed in an order different from that shown or described herein.

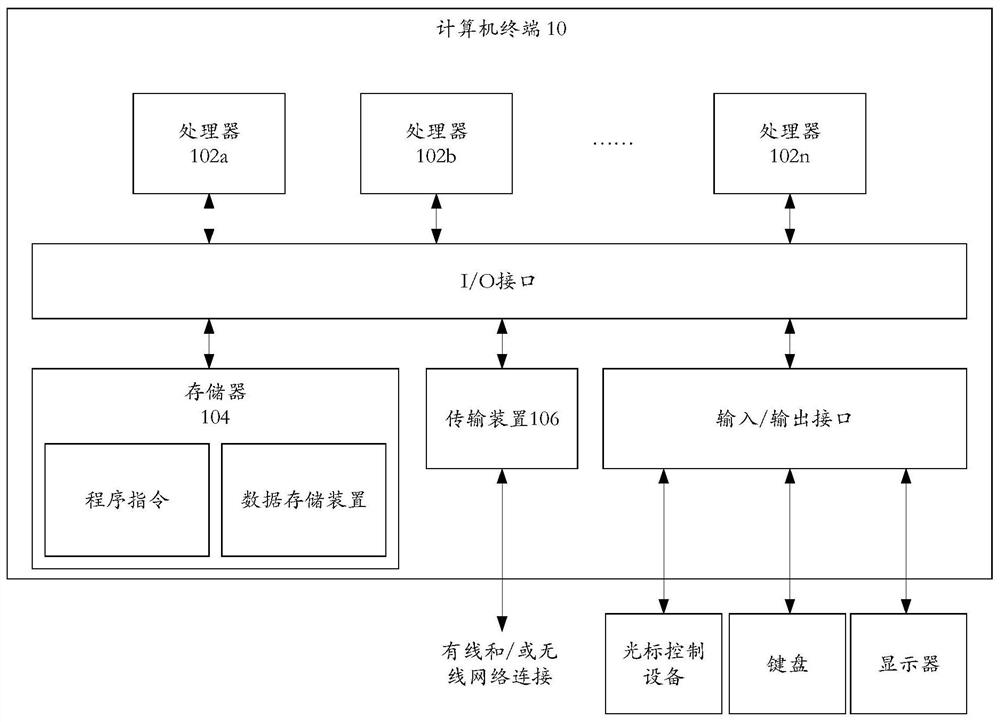

[0030] The method embodiment provided in Embodiment 1 of the present invention may be executed in a mobile terminal, a computer terminal, or a similar computing device. figure 1 A hardware structural block diagram of a computer terminal (or mobile device) for implementing the model training method is shown. Such as figure 1 As shown, the computer terminal 10 (or mobile device 10) may include one or more (shown by 102a, 102b, ..., 102n in the figure) processor 102 (the processor 102 may include but not limited to a mi...

Embodiment 2

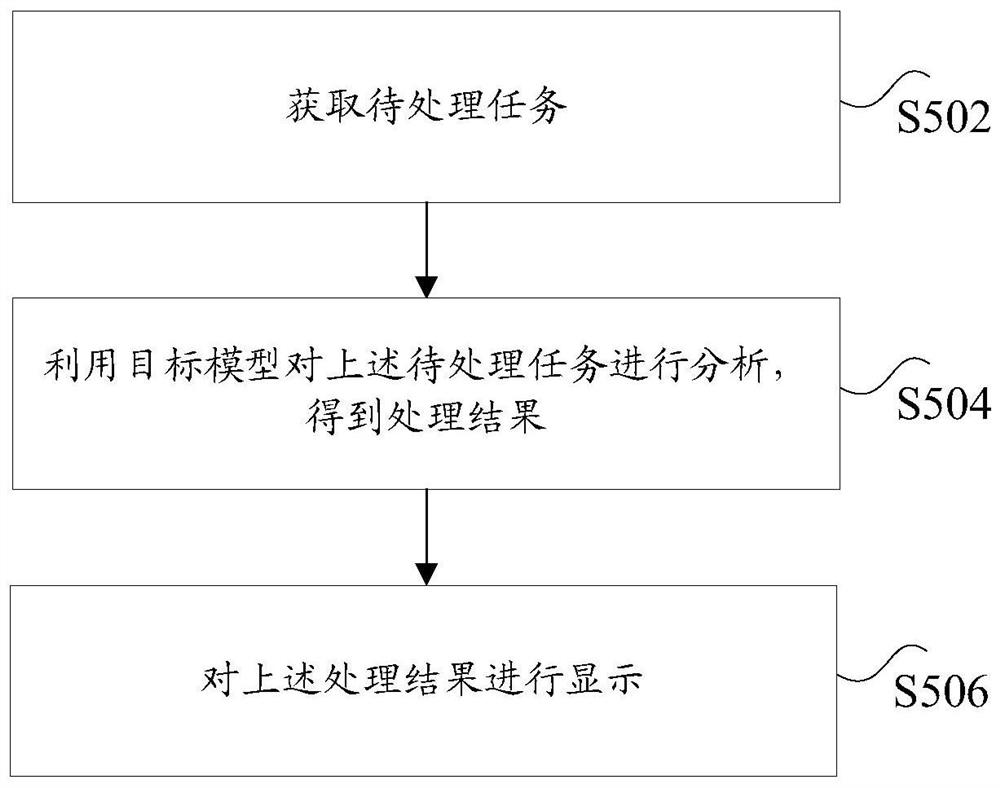

[0087] According to an embodiment of the present invention, an embodiment of a device for implementing the above model training method is also provided, Figure 5 According to a schematic structural diagram of a model training device according to an embodiment of the present invention, as shown in 5, the above device includes: a first training module 500, an anti-disturbance module 502 and a second training module 504, wherein:

[0088] The first training module 500 is used to obtain the first model by using the first data training, wherein the first data is labeled data, and the first model is a teacher model; the anti-disturbance module 502 is used for The above-mentioned first model performs anti-disturbance to obtain second data, wherein the above-mentioned second data is pseudo-labeled data; the second training module 504 is used to train the above-mentioned first data and the above-mentioned second data to obtain the second model, wherein , the above second model is the ...

Embodiment 3

[0092]According to an embodiment of the present invention, an embodiment of an electronic device is also provided, and the electronic device may be any computing device in a computing device group. The electronic device includes: a processor and a memory, wherein:

[0093] A processor; and a memory, connected to the above-mentioned processor, for providing the above-mentioned processor with instructions for processing the following processing steps: Step 1, using the first data training to obtain the first model, wherein the above-mentioned first data is labeled data, The above-mentioned first model is a teacher model; step 2, based on the above-mentioned first data, the above-mentioned first model is subjected to anti-disturbance to obtain second data, wherein the above-mentioned second data is pseudo-labeled data; step 3, using the above-mentioned first data and the above-mentioned second data training to obtain a second model, wherein the above-mentioned second model is a s...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com