Video multi-target tracking method using convolutional neural network and bidirectional matching algorithm

A convolutional neural network, multi-target tracking technology, applied in neural learning methods, biological neural network models, neural architectures, etc., can solve the problems of long appearance feature extraction, difficult target tracking, tracking interruption, etc., and achieve many target tracking. effect, improve training speed, reduce the effect of tracking loss

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

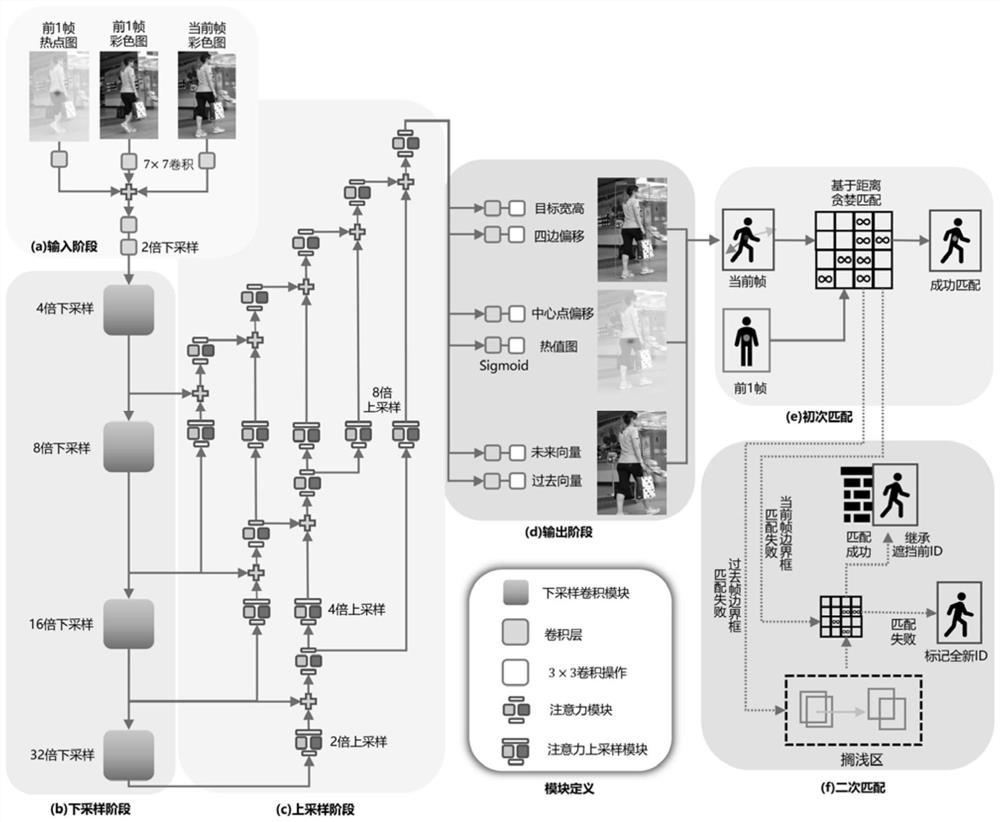

[0028] The present invention will be further described below in conjunction with the accompanying drawings and specific embodiments. A video multi-target tracking method using convolutional neural network and bidirectional matching algorithm, its specific implementation steps are as follows:

[0029] (S1): Split the input video into video frames for model training and actual tracking. The training of the model needs to use the bounding box information with the target and the ID information of the target in the video, and the same ID in the video frame represents the same target. Model training needs to send the image information of the current frame and the past frame into the convolutional neural network. It also needs to calculate the center point position of the target through the bounding box information of the target, and generate a two-dimensional Gaussian distribution of the center point of the target according to the position of the center point. Generate a target cen...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com