Three-dimensional image segmentation method based on double-path attention coding and decoding network

A 3D image and attention technology, applied in image analysis, image enhancement, image data processing, etc., can solve problems such as lack of, limited semantic segmentation accuracy, and insufficient consideration of pixel-to-pixel connection, so as to reduce false positives and false positives. Counter-example, optimize image edge details, and improve the effect of expressive ability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

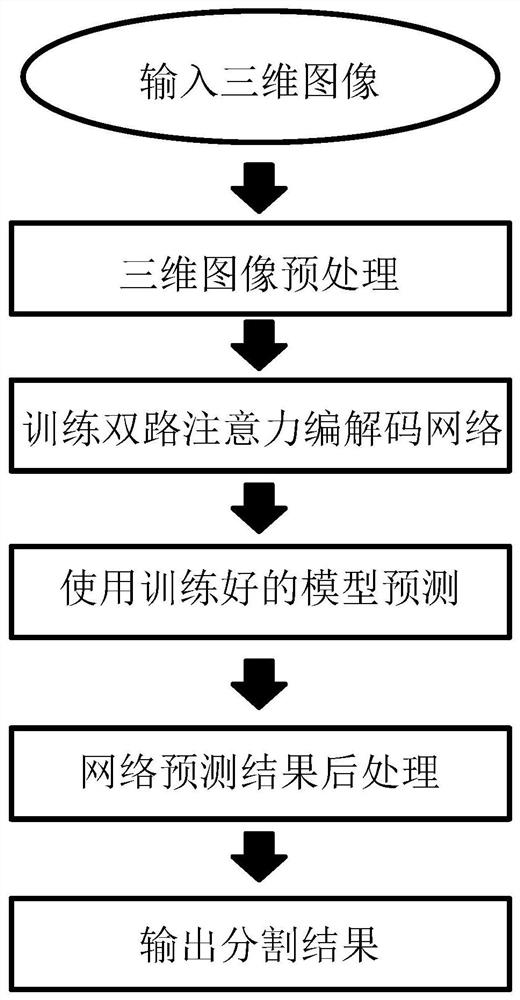

[0068] see figure 1 , in this embodiment, a three-dimensional image segmentation method based on a two-way attention encoding and decoding network is provided. This method constructs an efficient two-way attention encoding and decoding network structure, uses boundary loss to optimize network parameters, and improves network Segmentation accuracy for 3D image data.

[0069] The method of the present invention uses certain three-dimensional medical images to train the model, obtains the model parameters of such data, and then obtains high-precision prediction of similar segmented data other than samples, and the method of the present invention includes the following steps:

[0070] (1) Randomly crop the original image used for training into smaller image blocks, preprocess the small image blocks to obtain a clearer image, and save the preprocessed data locally;

[0071] (2) Build a two-way attention-based encoding and decoding network, input the training set data into the netw...

Embodiment 2

[0076] This embodiment is basically the same as Embodiment 1, especially in that:

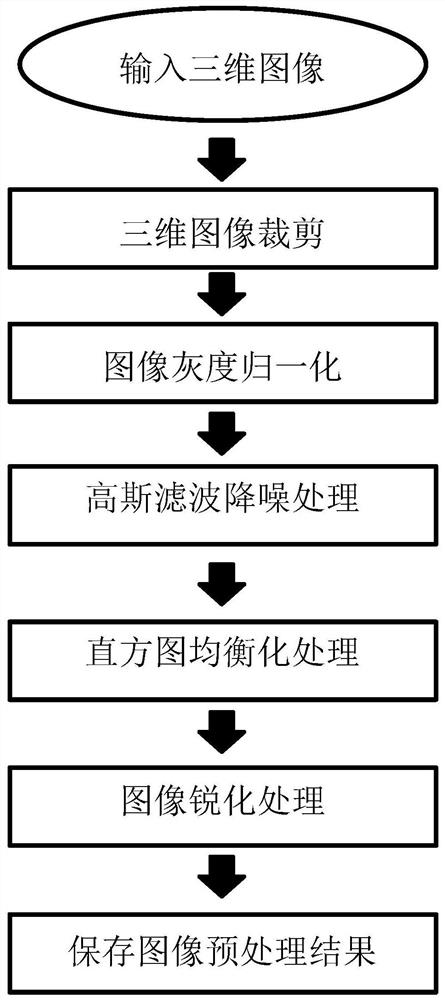

[0077] In this example, if figure 2 As shown, image preprocessing includes the following steps:

[0078] (1-1) cutting the three-dimensional image data into image blocks of 12×224×244 pixels;

[0079] (1-2) Determine whether the image block is a grayscale image, and use a normalization algorithm to convert the grayscale image for the non-grayscale image;

[0080] (1-3 Use Gaussian filtering to remove noise points in the image;

[0081] (1-4) Use histogram equalization to stretch the gray distribution of the image and enhance the contrast of the image;

[0082] (1-5) Use the Laplacian operator to realize image edge sharpening processing, enhance the gray level mutation in the image, that is, reduce the area where the gray level changes slowly;

[0083] (1-6) Divide and save the preprocessed image data.

[0084] In this embodiment, image preprocessing is performed, and the data is stored lo...

Embodiment 3

[0086] This embodiment is basically the same as Embodiment 2, and the special features are:

[0087] In this embodiment, the two-way attention codec network includes three sub-network modules, namely: (a) encoder network, (b) two-way attention network and (c) decoder network; using residual block, Maximum pooling, average pooling, and dual-path blocks build the encoder, and the encoder network construction includes the following steps:

[0088] (2-1-1) Use a residual block to construct the first layer of the encoder to adapt to the input of different data dimensions, and use the maximum pooling to reduce the dimensionality of the output of the first layer;

[0089] (2-1-2) Use two dual-path blocks in the second layer of the encoder to explore the low-level texture features of the image, and use maximum pooling to reduce the dimensionality of the output of the second layer;

[0090] (2-1-3) Use 3 dual-path blocks in the third layer of the encoder to explore the advanced abstra...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com