Voiceprint recognition confrontation sample generation method based on boundary attack

An anti-sample, voiceprint recognition technology, applied in the countermeasures of attacking encryption mechanism, speech analysis, instruments, etc., can solve problems such as classification errors, stolen models, unavailability, etc., to achieve improved accuracy, wide application, and successful attacks high rate effect

Pending Publication Date: 2021-10-29

ZHEJIANG UNIV OF TECH

View PDF0 Cites 5 Cited by

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

1. Stealing the model, hackers use various advanced means to steal the model files deployed in the server

2. Data poisoning. Data poisoning for deep learning mainly refers to adding abnormal data to the training samples of deep learning, causing the model to generate classification errors when encountering certain conditions. For example, the backdoor attack algorithm is to add A backdoor flag that poisons the model

[0003] For the generation of adversarial examples, most methods either rely on detailed model information (gradient-based attacks) or confidence scores, such as class probabilities (score-based attacks), and these two methods are in most real-world are not available in any of the scenarios

Method used

the structure of the environmentally friendly knitted fabric provided by the present invention; figure 2 Flow chart of the yarn wrapping machine for environmentally friendly knitted fabrics and storage devices; image 3 Is the parameter map of the yarn covering machine

View moreImage

Smart Image Click on the blue labels to locate them in the text.

Smart ImageViewing Examples

Examples

Experimental program

Comparison scheme

Effect test

example

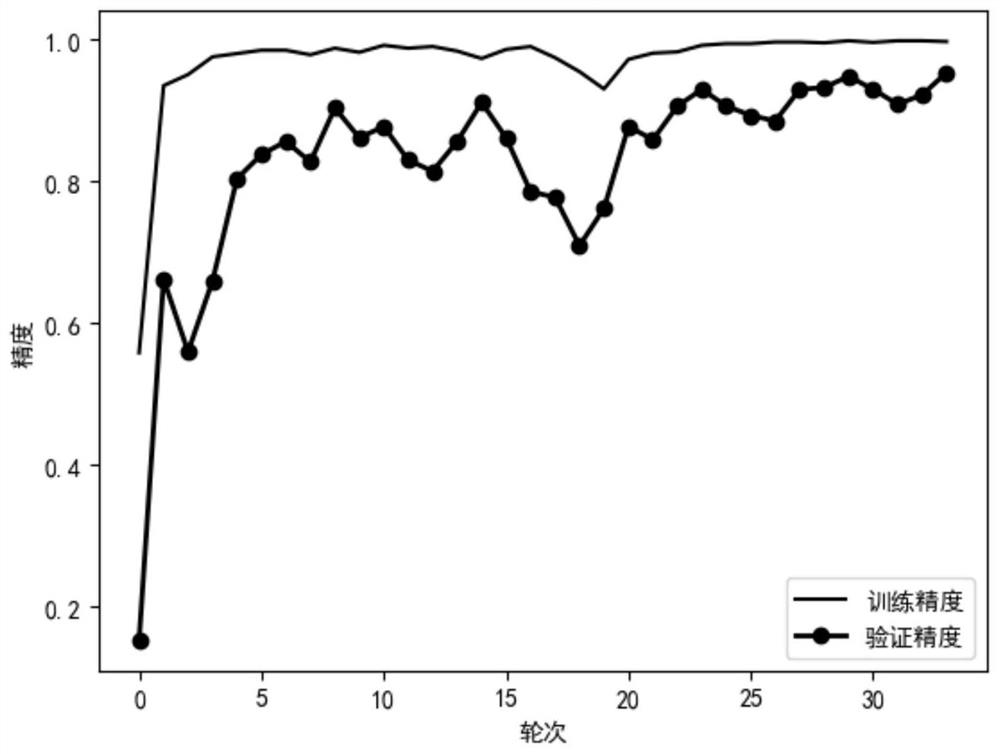

[0085] Example: Data from an actual experiment

[0086] Step 1 select experimental data

the structure of the environmentally friendly knitted fabric provided by the present invention; figure 2 Flow chart of the yarn wrapping machine for environmentally friendly knitted fabrics and storage devices; image 3 Is the parameter map of the yarn covering machine

Login to View More PUM

Login to View More

Login to View More Abstract

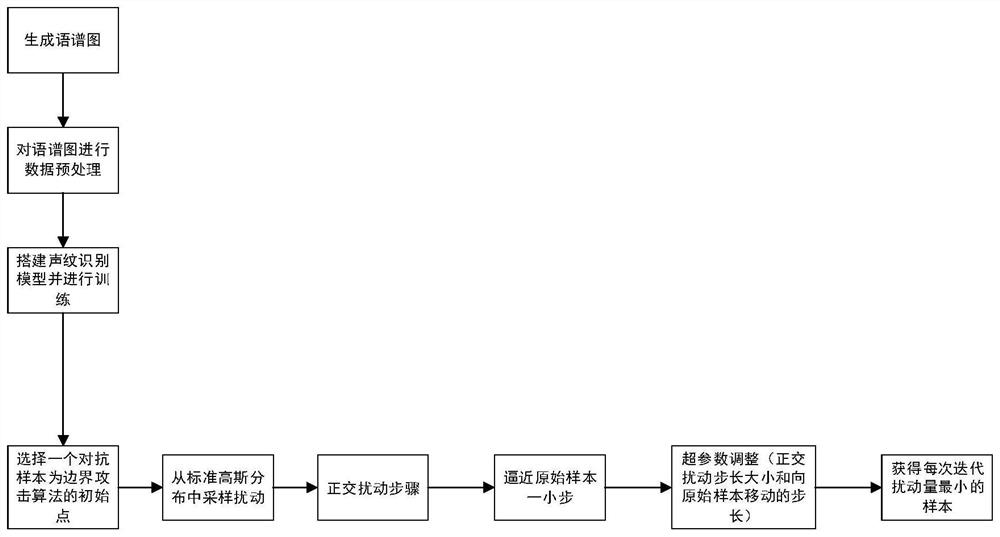

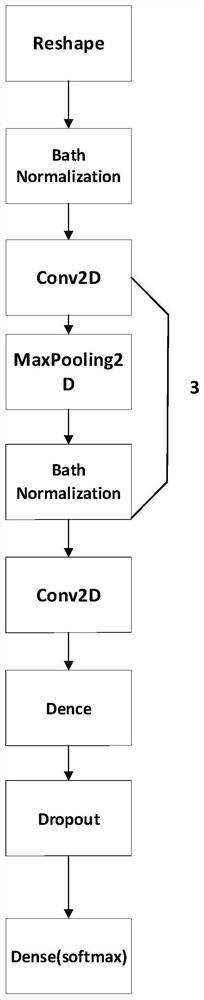

The invention discloses a voiceprint recognition confrontation sample generation method based on boundary attack. The method comprises the following steps: 1) performing data preprocessing on a used voice data set; 2) building a voiceprint recognition model; and 3) an algorithm for generating a confrontation sample through boundary attack, wherein the process comprises the following steps: selecting an initial point of a boundary attack algorithm, selecting a walking direction, and adjusting hyper-parameters. When the voiceprint identity is classified, traditional acoustic feature methods are not adopted, the voice is converted into the spectrogram for training, the advantage that the convolutional neural network extracts features on the image can be fully utilized, and the precision is greatly improved; and the method belongs to black box attacks, the structure and parameters of an original model do not need to be known, only classification labels of the model are needed, the application range is wider, and the practical significance is higher. The attack success rate is high, and generated confrontation samples cannot be perceived by naked eyes.

Description

technical field [0001] The invention belongs to the field of deep learning security, and relates to a method for generating adversarial samples for voiceprint recognition based on boundary attacks. Background technique [0002] With the rapid development of deep learning, deep learning has become one of the most common technologies of artificial intelligence, affecting and changing people's lives in all aspects. Typical applications include smart home, smart driving, speech recognition, voiceprint recognition and other fields . But deep learning, as a very complex software system, will also face various hacker attacks. Hackers can also threaten property safety, personal privacy, traffic safety, and announcement safety through deep learning systems. Attacks against deep learning systems usually include the following. 1. Stealing the model, hackers steal the model files deployed in the server through various advanced means. 2. Data poisoning. Data poisoning for deep learni...

Claims

the structure of the environmentally friendly knitted fabric provided by the present invention; figure 2 Flow chart of the yarn wrapping machine for environmentally friendly knitted fabrics and storage devices; image 3 Is the parameter map of the yarn covering machine

Login to View More Application Information

Patent Timeline

Login to View More

Login to View More Patent Type & Authority Applications(China)

IPC IPC(8): G10L17/02G10L17/04G10L17/18G10L21/14H04L9/00

CPCG10L17/02G10L17/04G10L17/18G10L21/14H04L9/002

Inventor 徐东伟蒋斌房若尘顾淳涛杨浩宣琦

Owner ZHEJIANG UNIV OF TECH

Who we serve

- R&D Engineer

- R&D Manager

- IP Professional

Why Patsnap Eureka

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com