Cross-view gait recognition method based on block horizontal pyramid spatial-temporal feature fusion model and gait reordering

A technology of spatio-temporal features and fusion models, which is applied in the field of deep learning and pattern recognition, can solve the problem that gait recognition has not been introduced, and achieve the effects of improved recognition rate and robustness, improved matching accuracy, and low computational complexity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

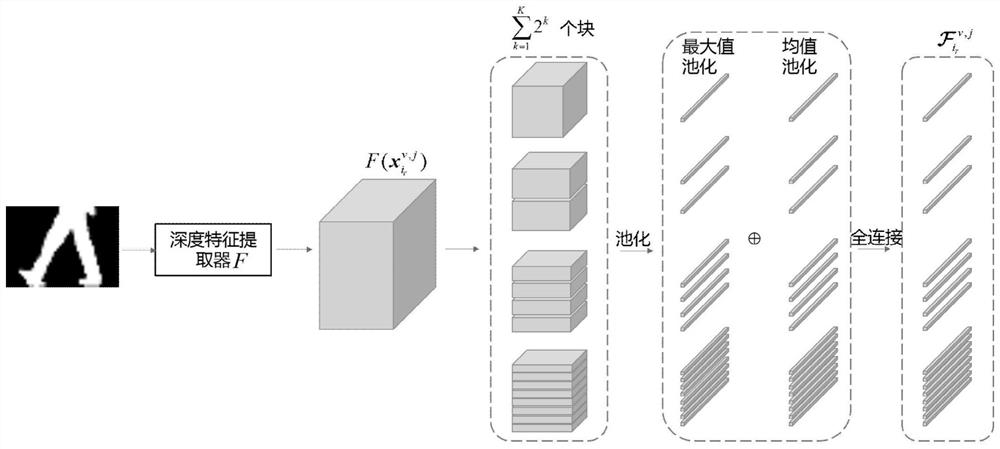

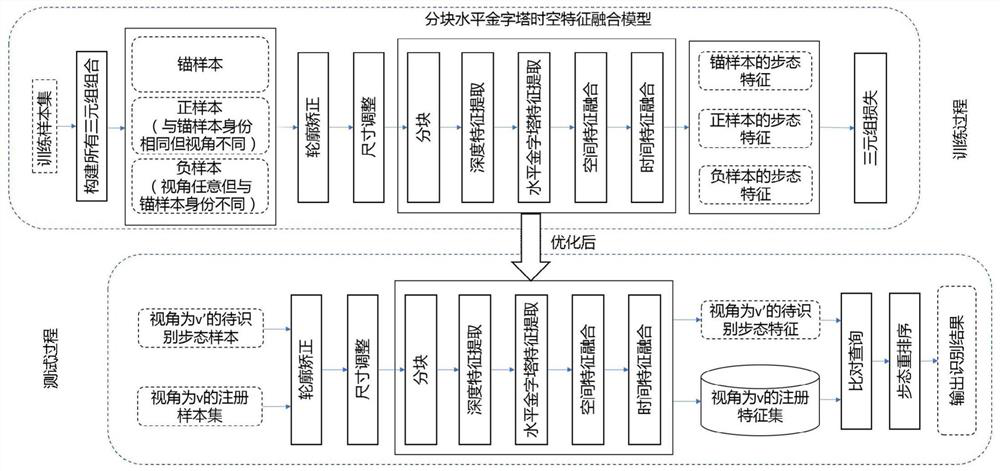

[0061] A cross-view gait recognition method based on block level pyramid spatio-temporal feature fusion model and gait reordering, including:

[0062] (1) Obtain the training sample set and construct a triplet combination. The triplet combination includes anchor samples, positive samples, and negative samples. The perspective of the anchor sample is a certain perspective, and the perspective of the positive sample is different from the perspective of the anchor sample. The identity of the positive sample The same as the identity of the anchor sample, the perspective of the negative sample is arbitrary and different from the identity of the anchor sample; for example figure 1 Shown:

[0063] A. Preprocessing the gait contour map, correcting the contour of the gait contour map to avoid interference caused by the different distance between the pedestrian and the camera; adjusting the size of the corrected gait contour map;

[0064] Given a gait dataset containing N pedestrians a...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com