Object semantics and deep appearance feature fusion-based scene identification method

A feature fusion and scene recognition technology, applied in the field of image processing, can solve the problems of small differences between classes, the decline of recognition effect, and large differences in indoor scenes, so as to improve the recognition rate and robustness, and improve the recognition accuracy rate. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

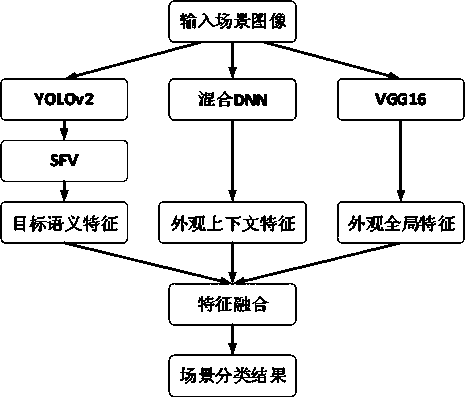

[0031] see figure 1 , a scene recognition method based on the fusion of target semantics and deep appearance features provided by this embodiment, the specific steps are:

[0032] Obtain the scene image to be recognized;

[0033] Extract the target semantic information of the scene image, and generate the target semantic features that maintain the spatial layout information;

[0034] Extract the appearance context information of the scene image to generate appearance context features;

[0035] Extract the appearance global information of the scene image, and generate the appearance global feature;

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com