Face motion synthesis method based on voice driving, electronic equipment and storage medium

A face motion and voice signal technology, applied in the field of computer information, can solve the problems of difficult target data migration, stiff face motion effect, and failure to achieve one-time training, etc., to achieve vivid and lifelike effects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

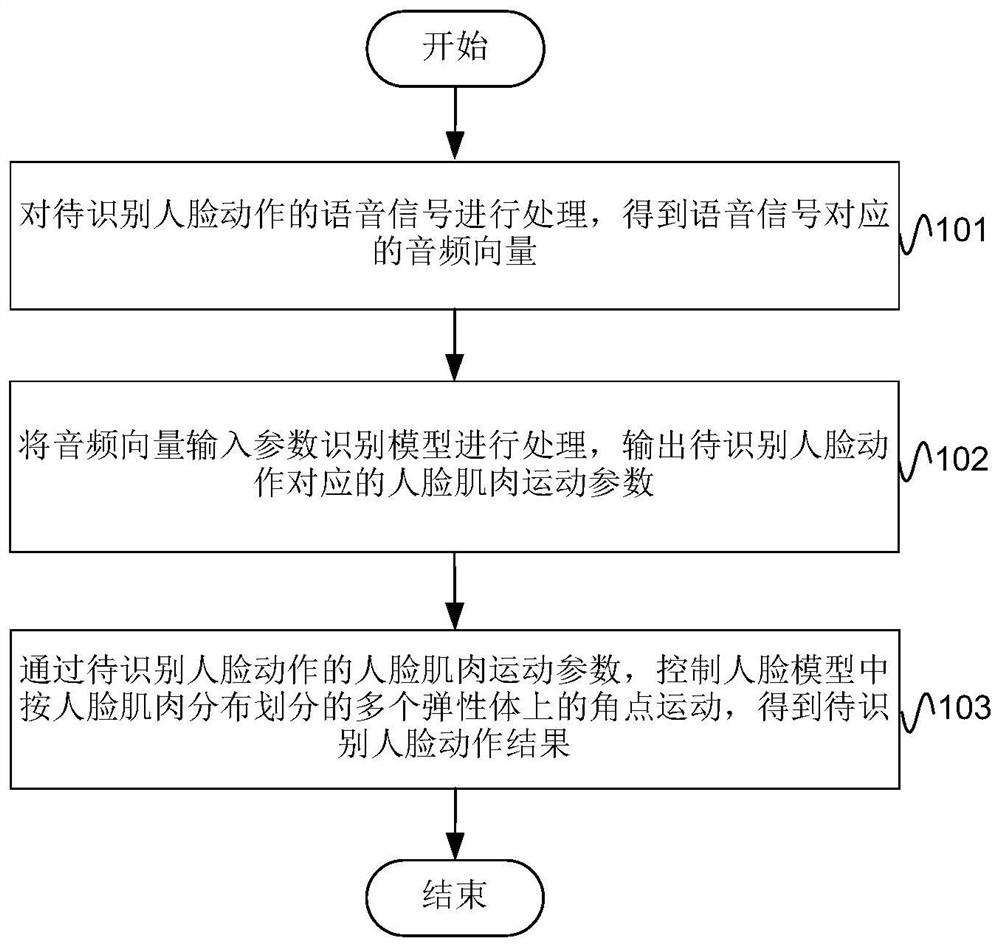

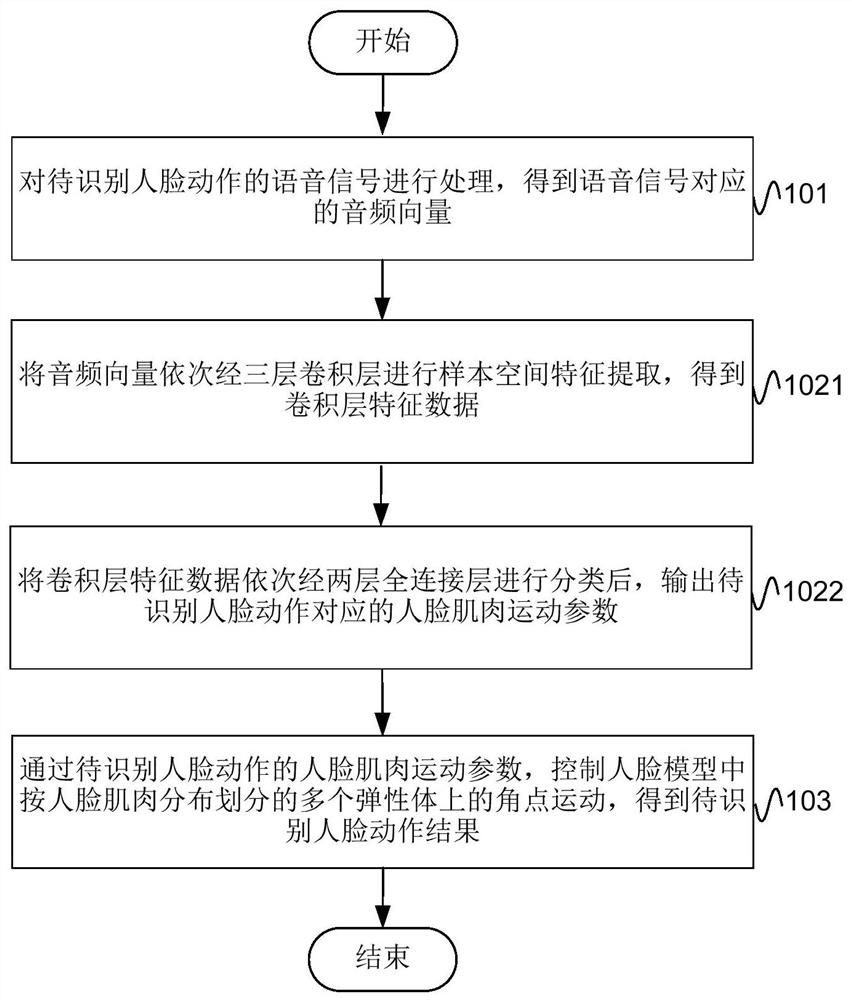

Method used

Image

Examples

Embodiment Construction

[0022] In order to make the purpose, technical solutions and advantages of the embodiments of the present invention more clear, various implementation modes of the present invention will be described in detail below in conjunction with the accompanying drawings. However, those of ordinary skill in the art can understand that, in each implementation manner of the present invention, many technical details are provided for readers to better understand the present application. However, even without these technical details and various changes and modifications based on the following implementation modes, the technical solution claimed in this application can also be realized.

[0023] In the existing speech-driven facial motion synthesis schemes, the mouth movement is mainly driven by speech. For example, let the virtual character say "the weather is really nice today", then while the voice is playing, the mouth movement of the virtual character should be basically the same as that...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com