Gibbs restricted text abstract generation method using pre-training model

A pre-training, Gibbs technology, applied in neural learning methods, biological neural network models, unstructured text data retrieval, etc. The effect of predicting unification, improving generation quality, and reducing training bias

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

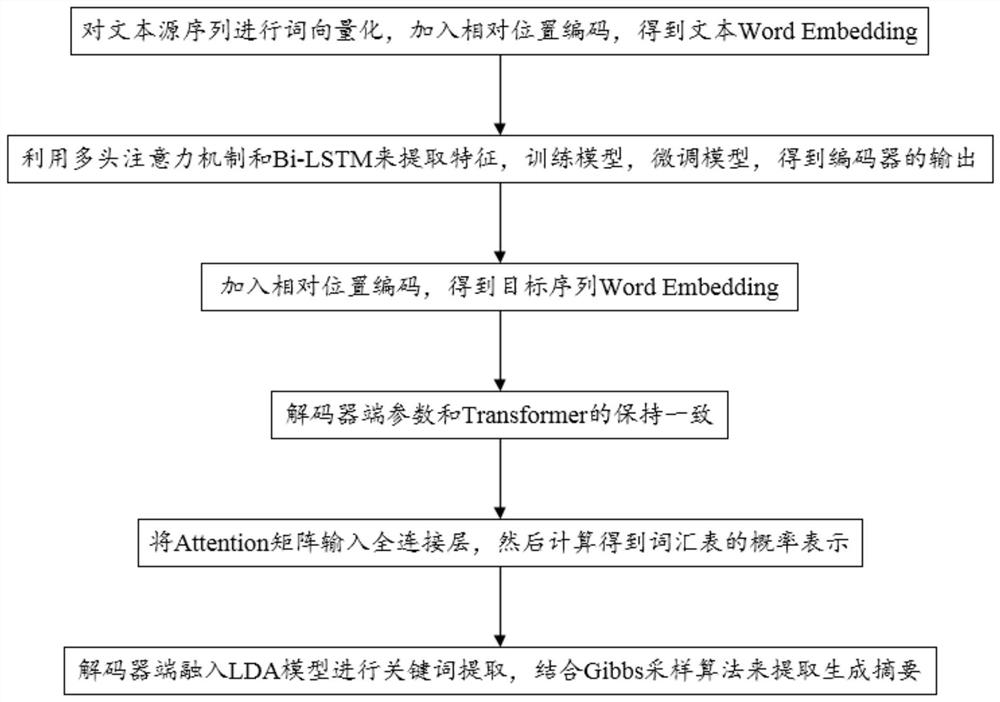

[0047] Such as figure 1 As shown, this embodiment provides a method for generating a Gibbs-restricted text summary using a pre-trained model, which uses the Trans-BLSTM model to train and generate a text summary, and the Trans-BLSTM model uses the FFN ( ) layer is changed to Bi-LSTM and connected to a Linear layer, while the decoder part remains unchanged. The training process of the Trans-LSTM model is as follows:

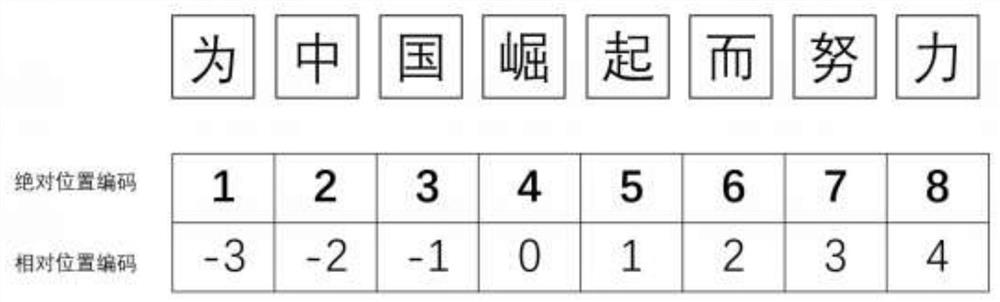

[0048] (1) First use the pre-trained language model Bert to the source sequence x={x of the text 1 ,x 2 ,...,x n} Carry out word vectorization, and add relative position encoding at the same time to get the Word Embedding of the text;

[0049] (2) In the encoder stage, use the multi-head attention mechanism and Bi-LSTM to extract features, train the model, fine-tune the model, and obtain the output of the encoder;

[0050] (3) Same as the word embedding method of the end-source sequence of the encoder, add relative position encoding to obtain the target seque...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com