Method, device and equipment for identifying baby cry category through multi-feature fusion

A multi-feature fusion, infant technology, applied in neural learning methods, character and pattern recognition, speech analysis, etc., can solve problems such as low accuracy, achieve the effect of improving accuracy and reducing misjudgment of crying detection

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

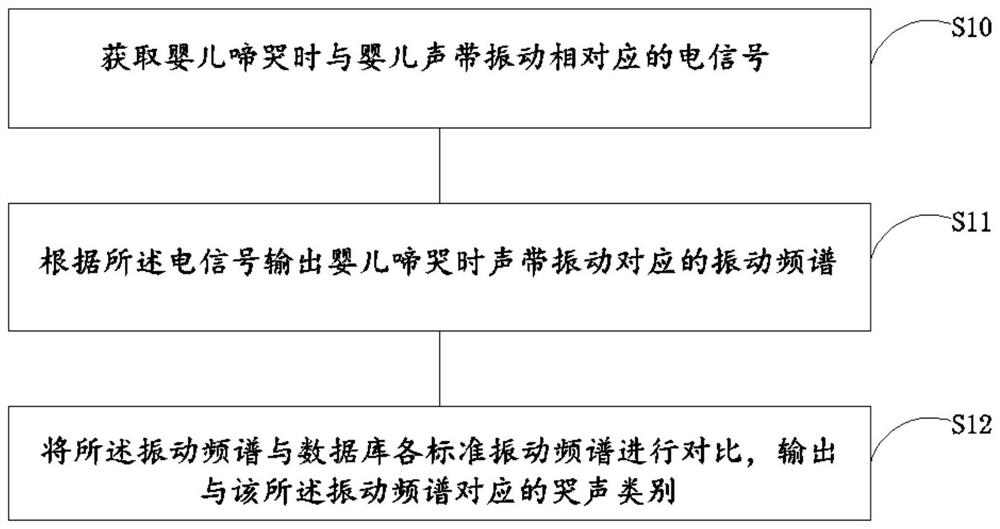

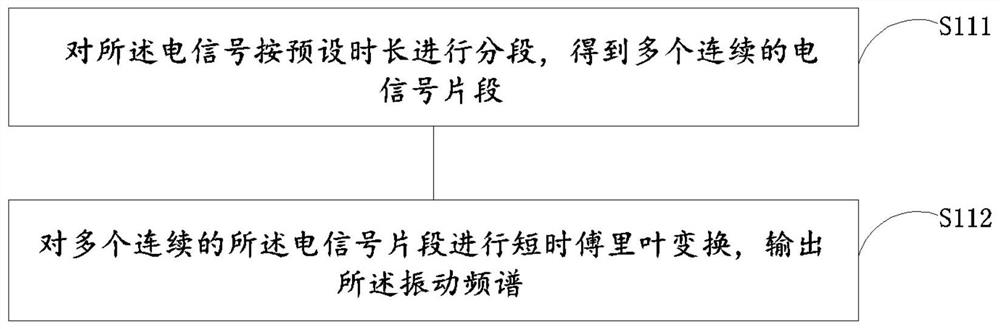

[0075] See figure 1 , figure 1 It is a schematic flow chart of the method for identifying the category of baby crying in Embodiment 1 of the present invention; the method includes:

[0076] S10: Obtain the electrical signal corresponding to the vibration of the baby's vocal cords when the baby is crying;

[0077] Specifically, when it is determined that the baby is crying, the electrical signal generated by the vibration of the vocal cords is obtained. The electrical signal can be an electrical signal obtained by converting the vibration parameters of the vocal cords, or an electrical signal obtained by converting an optical image signal; the vibration signal is a continuous It is non-stationary; it should be noted that the vibration of the vocal cords can be collected by piezoelectric sensors, and the vibration parameters of the vocal cords can also be obtained by other optical components, such as infrared rays, radar waves, and video collected by cameras.

[0078] S11: out...

Embodiment 2

[0117] In Example 1, the crying category of the baby's crying is determined by the vibration parameters corresponding to the vocal cord vibration. Since the baby's vocal cords are in the early stage of development, the difference in vocal cord vibration is small, and the accuracy of the collected vibration parameters is low, which ultimately affects the crying category. detection accuracy. Therefore embodiment 2 of the present invention also carries out further analysis to the audio signal that baby cry produces on the basis of embodiment 1; Please refer to Figure 7 , the method includes:

[0118] S20: Obtain the audio characteristics of the baby's cry and the vibration spectrum corresponding to the vibration of the baby's vocal cords;

[0119] Specifically, when the baby is crying, the audio signal containing the crying sound and the vibration parameters of the corresponding vocal cords are collected; the audio features are obtained by processing the audio signal, and the v...

Embodiment 3

[0170] In Embodiment 1 and Embodiment 2, the cry category of the baby's cry is determined by the audio signal of the vibration parameter cry corresponding to the vibration of the vocal cords. Since the baby's vocal cords are in the early stages of development and the vocal cords are not fully developed, the vibration of the vocal cords and crying The voice has a small range of expressions for the needs, so that the samples that can be matched are limited, which eventually leads to wrong judgments; therefore, on the basis of Embodiment 1, the gesture information corresponding to the crying state of the baby is introduced for further improvement; please refer to Figure 14 , the method includes;

[0171] S30: Obtain the audio features of the sound of the baby crying, the action feature values of the baby's gestures and actions in the image, and the vibration spectrum of the vocal cord vibration;

[0172] Specifically, when a baby is detected to be crying, the video stream incl...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com