Method and device for protecting safety of neural network model

A neural network model and network model technology, applied in biological neural network models, neural learning methods, neural architectures, etc., can solve problems such as attackers or gray production attacks, stealing model sensitive information, etc., to reduce resource consumption and ensure normal operation Effects on operational performance and efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

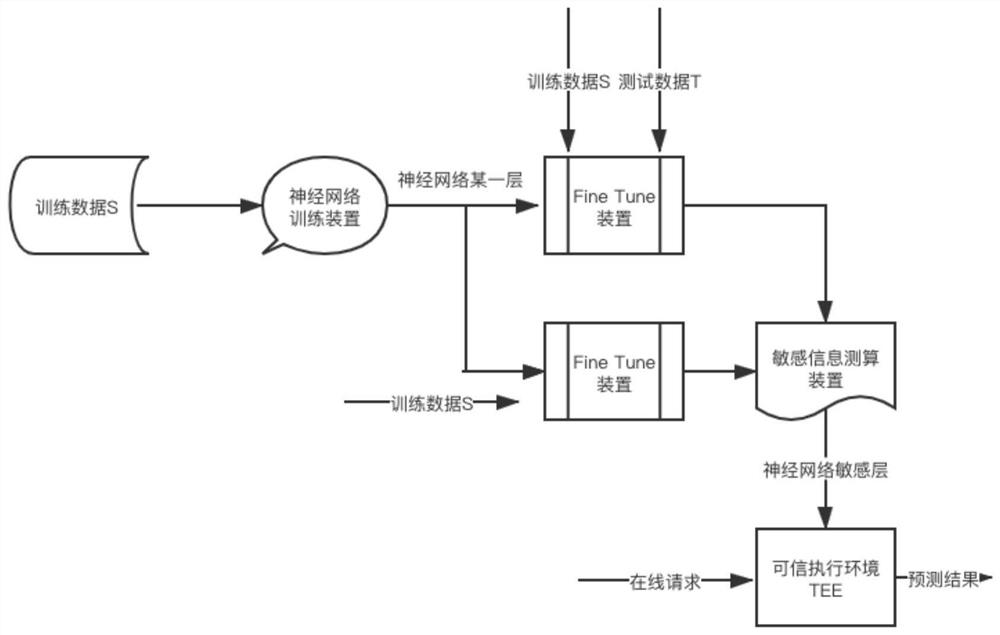

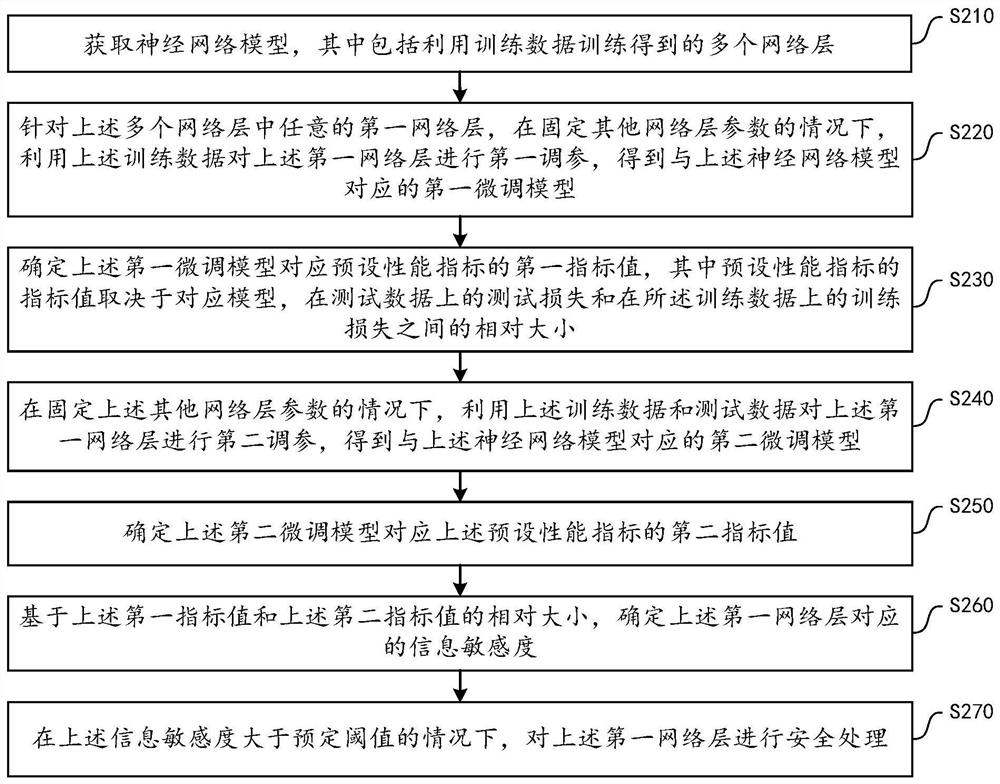

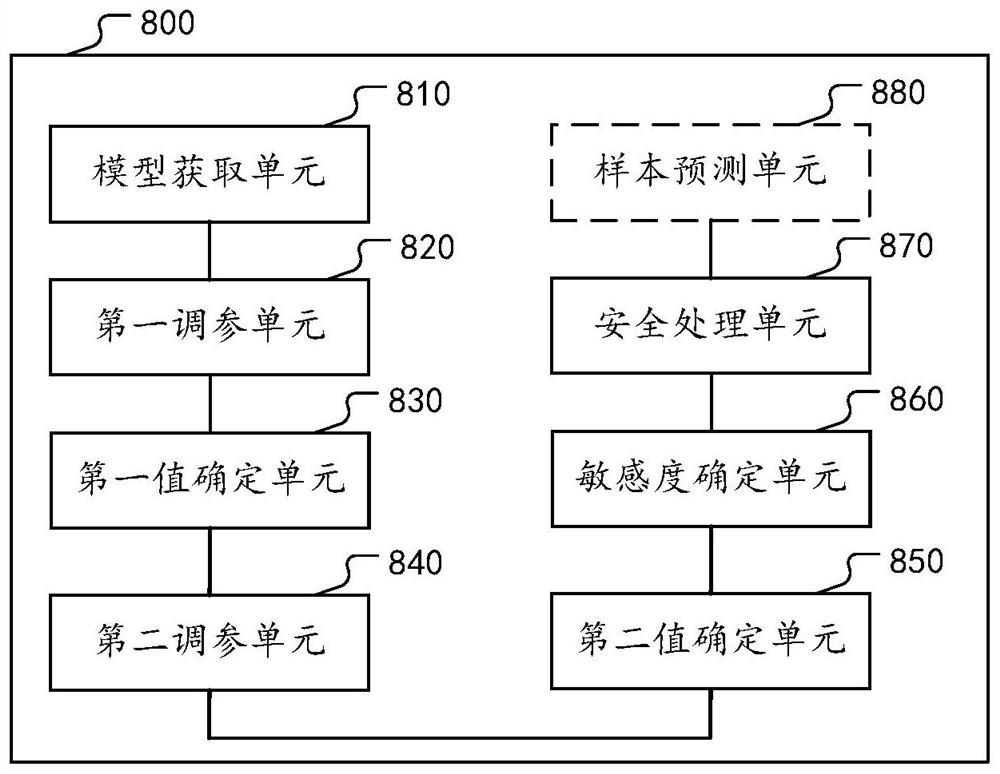

[0021] Multiple embodiments disclosed in this specification will be described below in conjunction with the accompanying drawings.

[0022] As mentioned above, if all the trained neural network models are exposed, it is easy for attackers or gray products to attack through the model and steal sensitive information in the model. For example, after obtaining the neural network model, the attacker can infer the statistical characteristics remembered in the network layer by visualizing it. For example, suppose the neural network model is used to decide whether to provide a certain service to the user, where The feature remembered by a certain network layer may be: if the user is older than 52 years old, no loan service will be provided. At this time, the attacker can modify the user's age (such as changing 54 years old to 48 years old), so that illegal users can use loan service. For another example, after obtaining the neural network model, the attacker can observe the data dist...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com