System-level cache

A system-level, caching technology that can be used in memory systems, climate sustainability, instrumentation, etc. to solve problems such as the inability of client devices to allocate cache lines to reduce latency, improve power efficiency, and improve power consumption

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

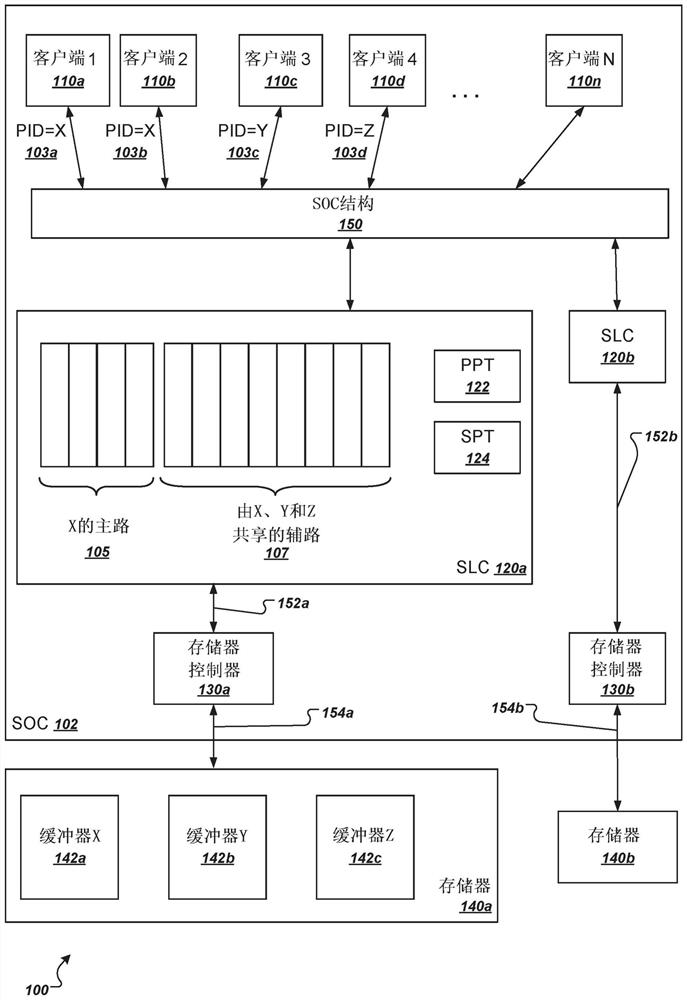

[0123] Embodiment 1 is a system comprising:

[0124] multiple integrated client devices;

[0125] a memory controller configured to read data from the memory device; and

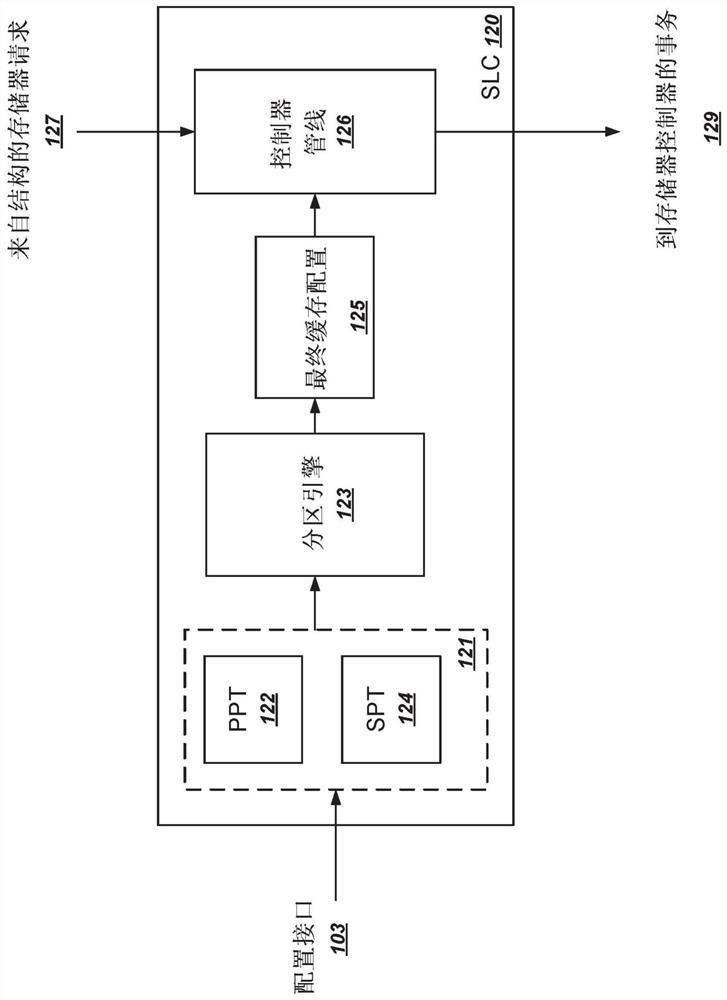

[0126] a system-level cache configured to cache data requests through the memory controller for each integrated client device of the plurality of integrated client devices,

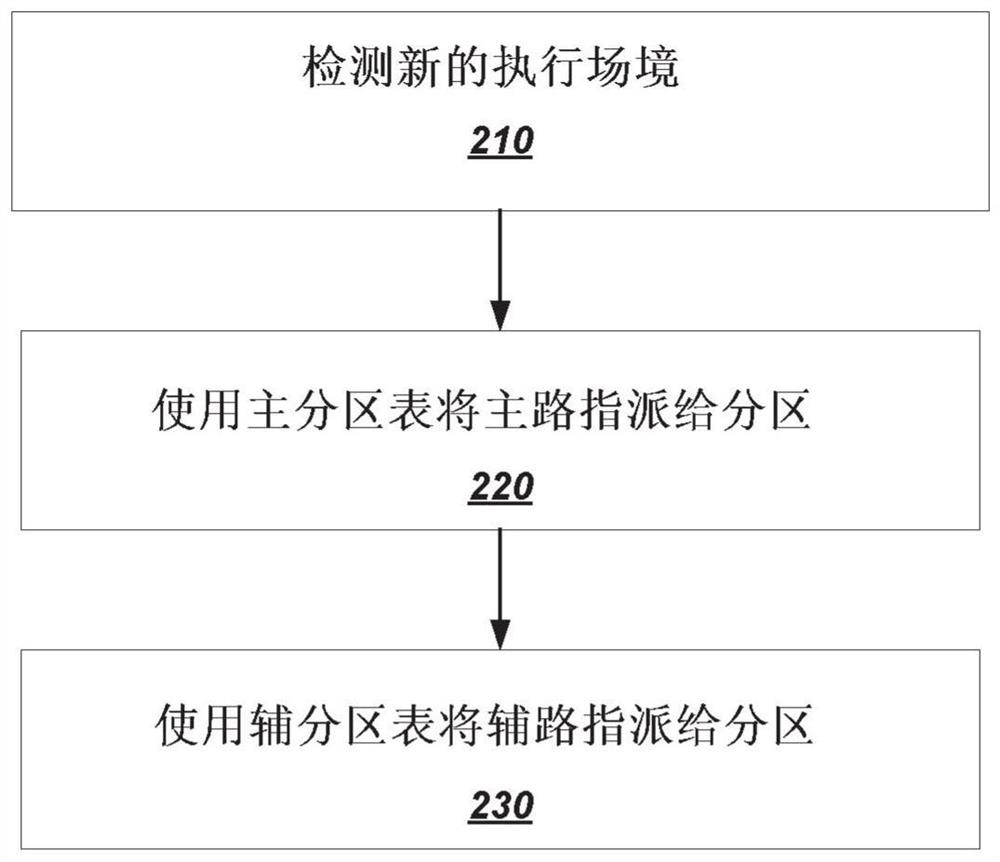

[0127]wherein the system-level cache includes a cache memory having a plurality of ways, each of the ways being a primary way or a secondary way,

[0128] wherein each main way is dedicated to a single corresponding partition corresponding to a memory buffer accessed by one or more client devices, and

[0129] wherein each secondary route corresponds to a set of multiple partition shares for a set of memory buffers accessed by the client device, and

[0130] wherein the system-level cache is configured to maintain a mapping between partitions and priority levels, and is configured to assign primary ways to the corresponding enabled p...

Embodiment 2

[0131] Embodiment 2 is the system of embodiment 1, wherein the system level cache is configured to assign a primary way exclusively to the first partition accessed by the one or more first client devices, and is configured to assign a secondary way to The first partition and one or more other partitions assigned to be accessed by the group of client devices that also includes the first client device.

Embodiment 3

[0132] Embodiment 3 is the system of embodiment 2, wherein the system level cache is configured to maintain a mapping between groups of client devices and secondary priority levels, and is configured to The secondary roads are assigned to the corresponding enabled partitions in the order corresponding to the corresponding secondary priority levels.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com