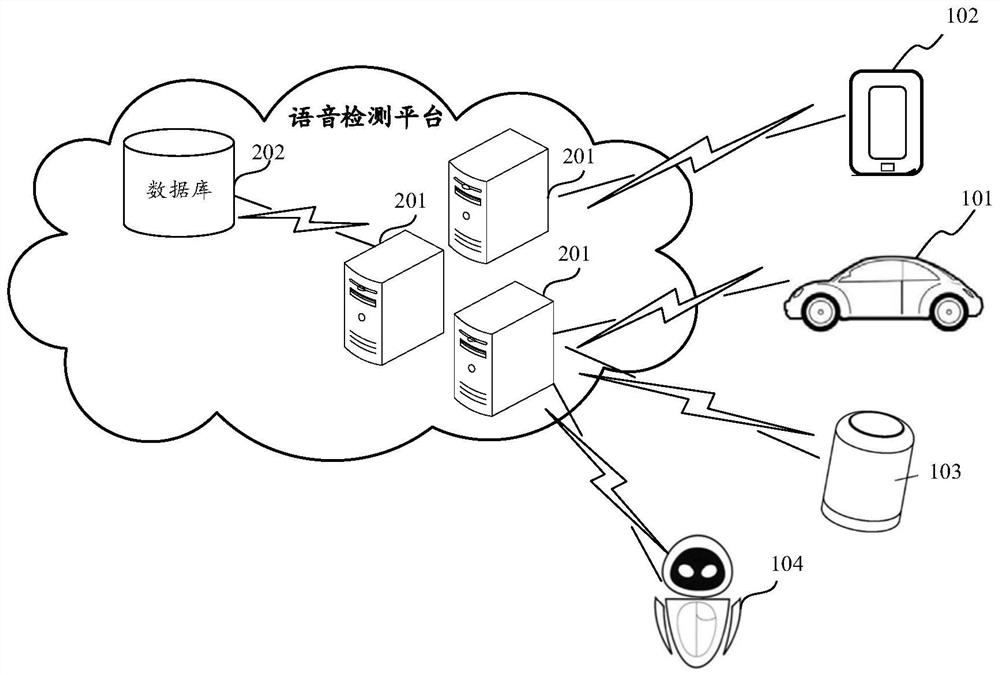

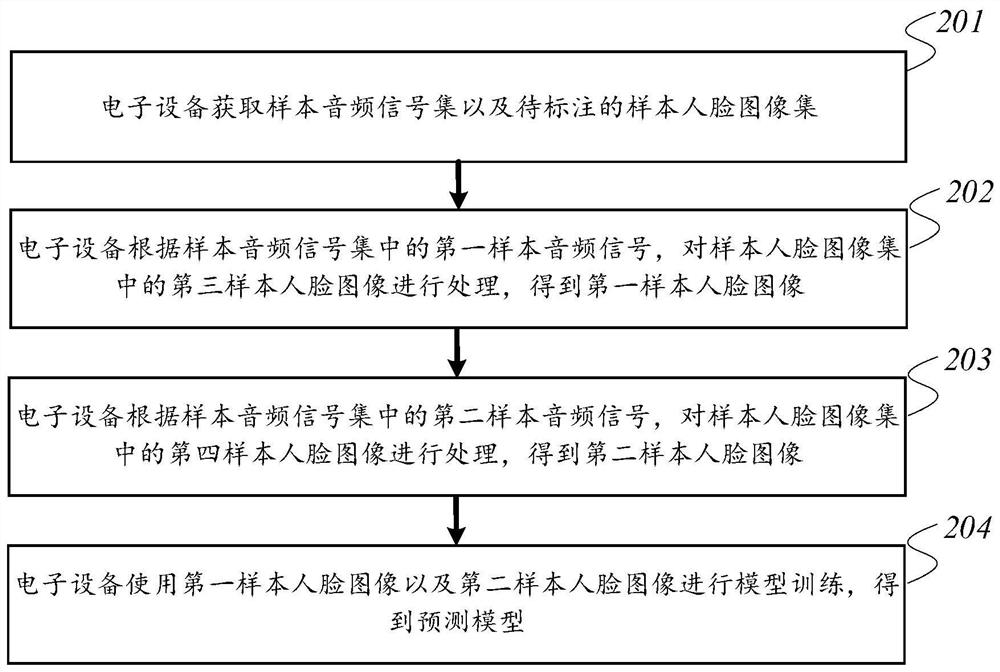

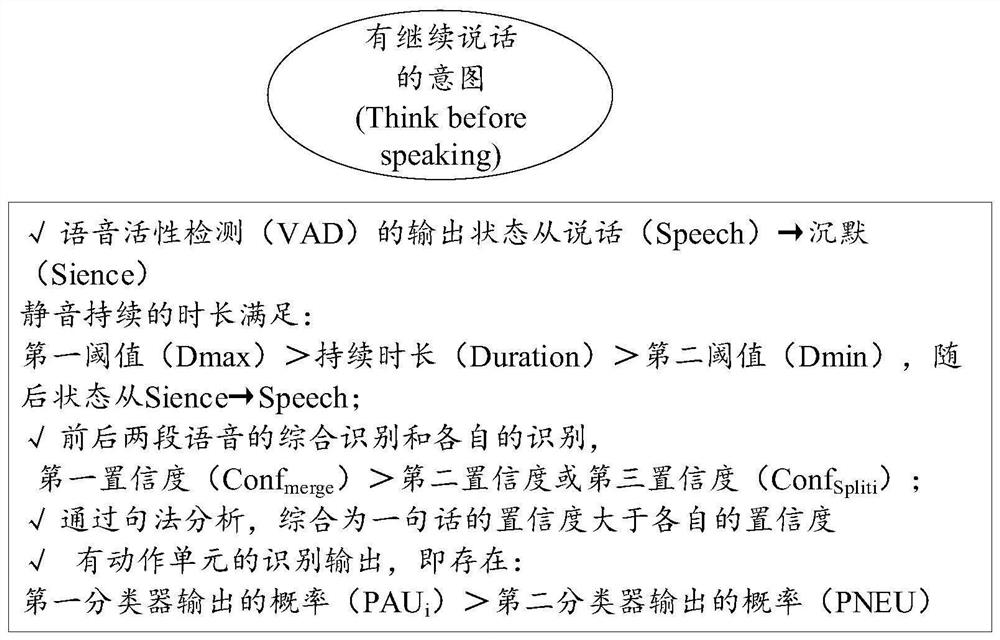

Voice detection method and device, prediction model training method and device, equipment and medium

A prediction model and speech detection technology, applied in speech analysis, speech recognition, character and pattern recognition, etc., can solve the problems of speech interaction in the end state, missing detection of speech end points, poor accuracy of speech end points, etc. Premature truncation, avoiding interference, avoiding the effect of misunderstanding the graph

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0088] In order to make the purpose, technical solution and advantages of the present application clearer, the implementation manners of the present application will be further described in detail below in conjunction with the accompanying drawings.

[0089] The meaning of the term "at least one" in this application refers to one or more, and the meaning of the term "multiple" in this application refers to two or more, for example, multiple second messages refer to two or two More than two second messages. The terms "system" and "network" are often used interchangeably herein.

[0090] In this application, the terms "first" and "second" are used to distinguish the same or similar items with basically the same function and function. It should be understood that "first", "second" and "nth" There are no logical or timing dependencies, nor are there restrictions on quantity or order of execution.

[0091] It should be understood that in each embodiment of the present application...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com