A Pose and Trajectory Estimation Method Based on Image Interpolation

A pose estimation and image technology, applied in the field of computer vision, can solve the problems of low recognition accuracy, achieve the effects of improving recognition accuracy, reducing tracking loss, and improving accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0026] Specific implementations of the present invention are described in detail below, examples of which are illustrated in the accompanying drawings, wherein the same or similar reference numerals represent the same or similar elements or elements with the same or similar functions throughout. The embodiments described below with reference to the accompanying drawings are exemplary, and are intended to explain the present invention and should not be construed as limiting the present invention.

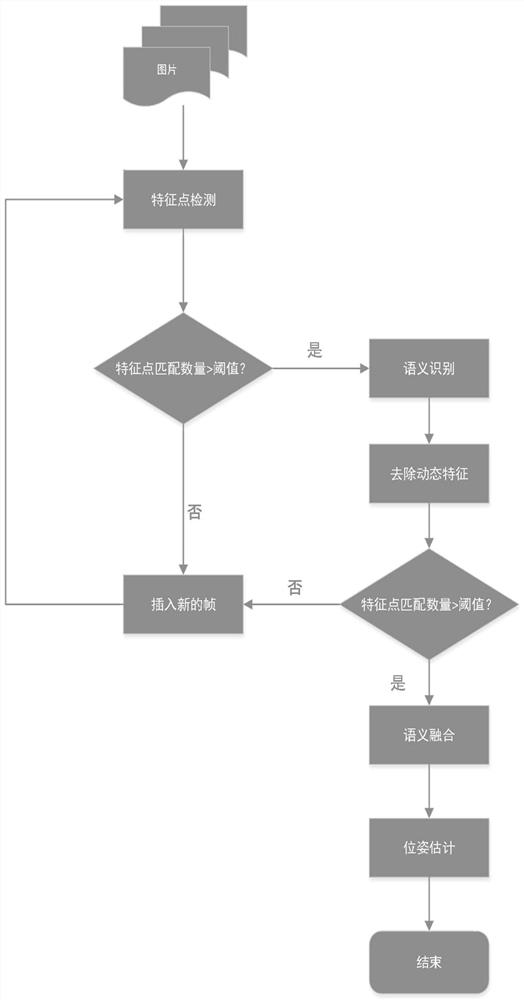

[0027] 1 image inset

[0028] When capturing images, it is inevitable that the camera moves too fast or the frame rate of the camera is too low, resulting in too little common view between two adjacent frames. Therefore, the video frame interpolation technology can effectively solve this problem.

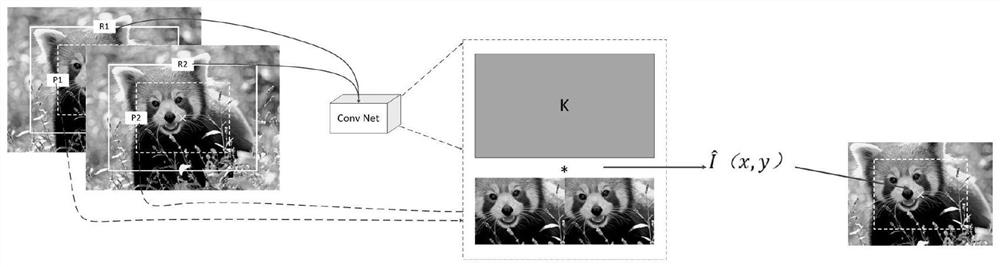

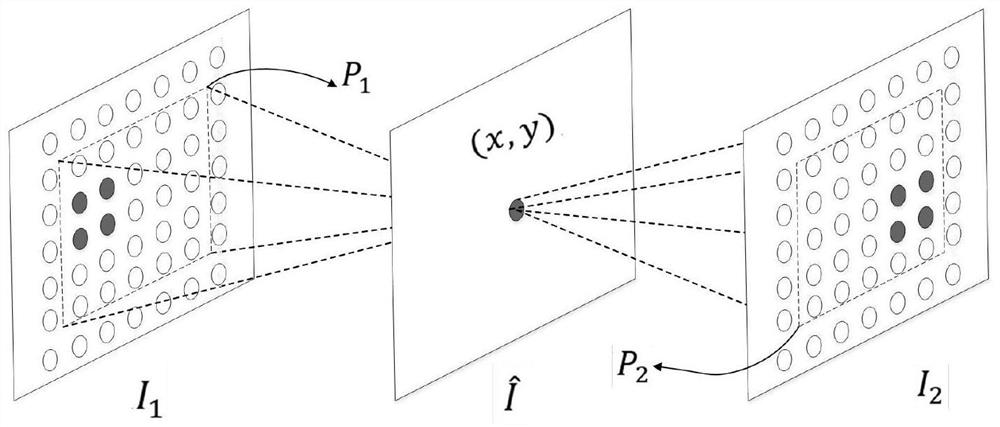

[0029] As a preferred solution, a robust video frame interpolation method is used that utilizes deep convolutional neural networks to achieve frame interpolation without explicitly splitti...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com