Model training method, human body posture detection method and device, equipment and medium

A technology of model training and posture, which is applied in the field of equipment and media, model training methods, human posture detection methods, and devices. It can solve the problems of lack of high-quality unconstrained scene labeling data, difficulties in model convergence, and inability to realize accurate detection of human body three-dimensional postures, etc. question

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 2

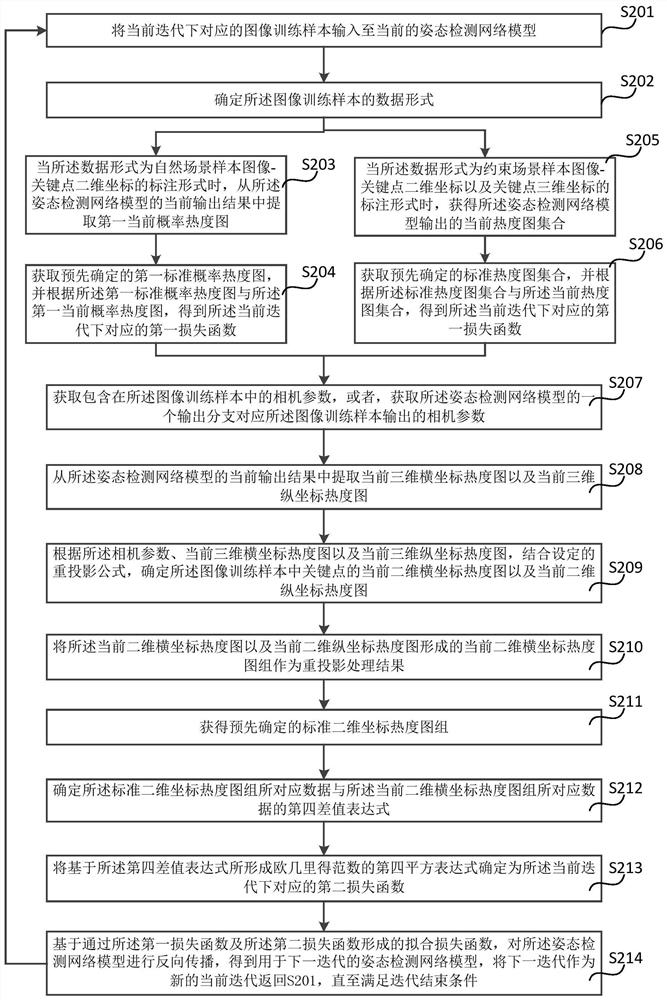

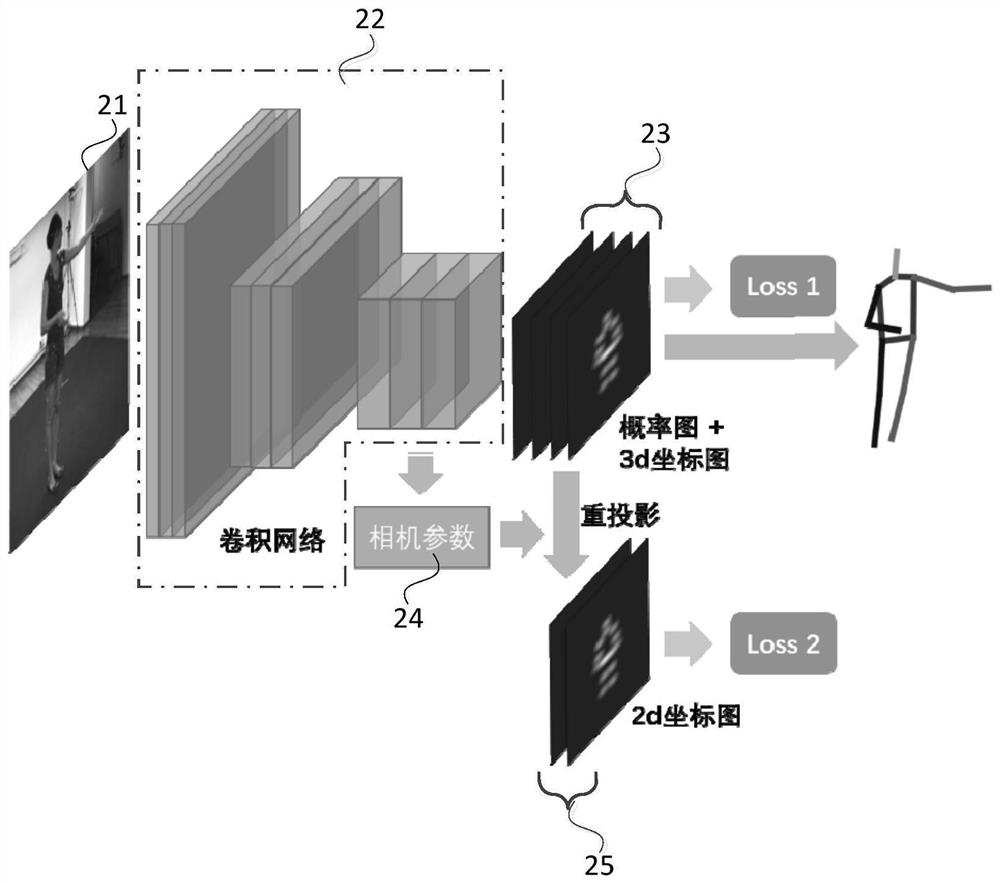

[0055] figure 2 A schematic flow chart of a model training method provided in Embodiment 2 of the present invention is given. This Embodiment 2 is optimized based on the above-mentioned embodiment. In this embodiment, further according to the data form of the image training sample, The first loss function corresponding to the current iteration is optimized as follows: when the data form of the image training sample is the labeled form of natural scene sample image-key point two-dimensional coordinates, the current output from the pose detection network model Extract the first current probability heat map from the results; obtain a predetermined first standard probability heat map, and obtain the corresponding first probability heat map under the current iteration according to the first standard probability heat map and the first current probability heat map A loss function; wherein, the first standard probability heat map is determined by converting the two-dimensional coordi...

Embodiment 3

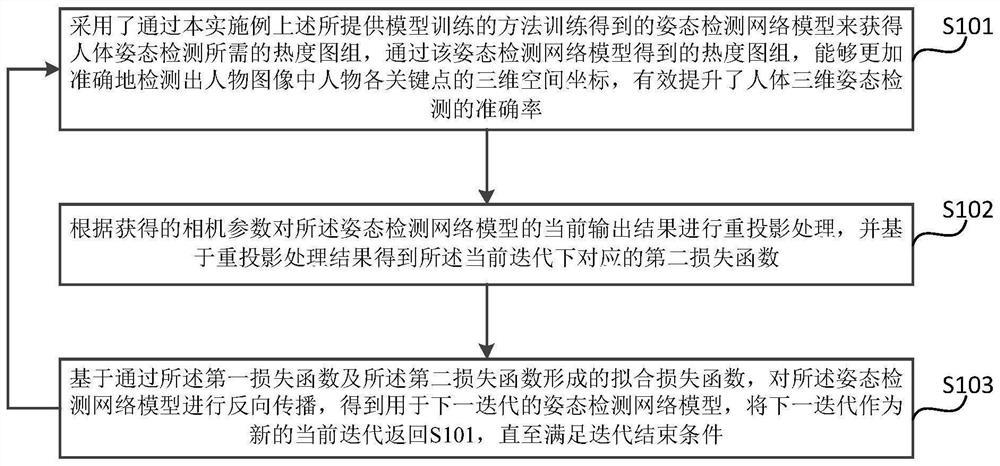

[0108] image 3 It is a schematic flowchart of a human body posture detection method provided by Embodiment 3 of the present invention. The method can be executed by a human body posture detection device, wherein the device can be implemented by software and / or hardware, and generally can be integrated into a computer device. like image 3 As shown, the method includes:

[0109] S301. Acquire a real-scene image of a person to be detected.

[0110] Exemplarily, the real-scene image of the person can be obtained by a conventional image capture device, and the image capture device can be a mobile phone, a notebook or a tablet with a camera, and the like. When there is a requirement for human body posture detection, this step can be used to obtain the real-scene image of the person to be detected first.

[0111] S302. Input the real-scene image of the person into a preset pose detection network model.

[0112] Wherein, the preset posture detection network model is trained by a...

Embodiment 4

[0123] Figure 4 It is a structural block diagram of a model training device provided by Embodiment 4 of the present invention. The device can be implemented by software and / or hardware, and generally can be integrated into a computer device, and model training can be performed by executing a model training method. like Figure 4 As shown, the device includes: a first information determination module 41 , a second information determination module 42 and a model training module 43 .

[0124] Wherein, the first information determination module 41 is configured to input the image training samples corresponding to the current iteration into the current posture detection network model, and obtain the corresponding first image training samples under the current iteration according to the data form of the image training samples. loss function;

[0125] The second information determination module 42 is configured to perform reprojection processing on the current output result of the...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com