Man-machine identification method, identification system, MR intelligent glasses and application

A technology of man-machine recognition and smart glasses, applied in the field of man-machine recognition, can solve the problems of high user operation complexity, poor user experience, and low accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

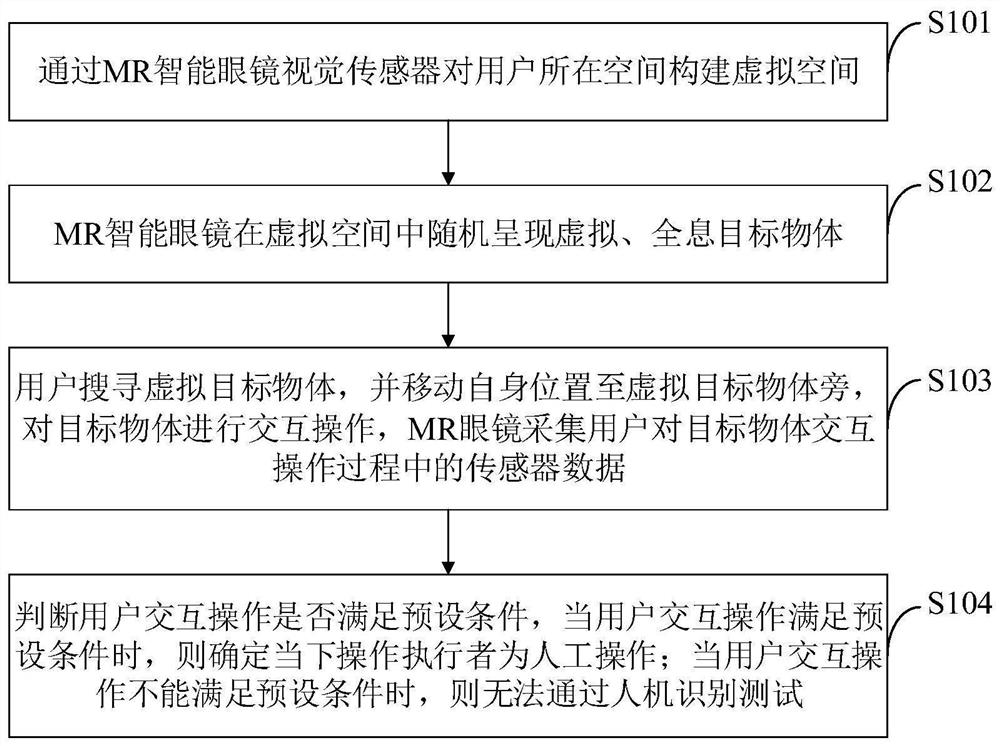

[0172] Such as figure 2 for figure 1 Mode (1) The generation and operation method of the assessment task embodiment 1 of the identification method.

[0173] The generation and operation method of the assessment task embodiment 1 is as follows: using human visual search behavior as a principle to set assessment tasks. Such as figure 2 After the user requests the human-machine recognition operation on the webpage, the human-machine recognition assessment task is loaded in the interface of the webpage. Start by showing the user at least one "Search Target Impression 1" (such as figure 2 are triangles and stars), and the lower "Search Area 2" contains at least one "Interference Item 3". Among them, the features of "search target display 1" and "interference item 3" can be any graphics, any text, any symbol, any font, any color, any object image, and can be arbitrarily combined into a shape, that is, it has a degree of recognition Any pattern of any pattern can also have dy...

Embodiment 2

[0184] image 3 for figure 1 Method 1 is the generation and operation method of Embodiment 2 of the assessment task.

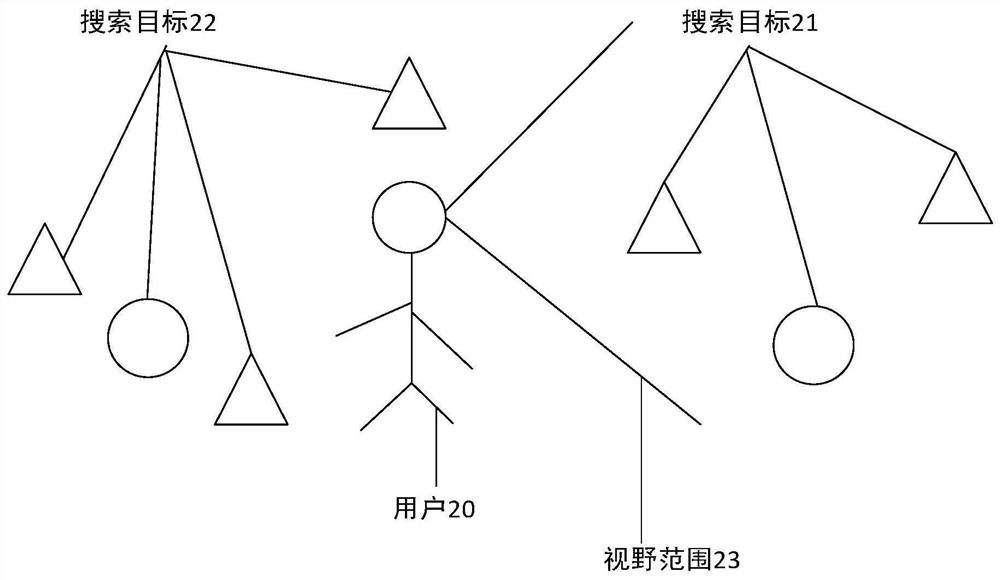

[0185] Compared with task embodiment 1, the difference is that the assessment task of task embodiment 1 is a search target that is imaged on the same plane, while task embodiment 2 is that MR glasses scan and identify the physical space (such as Figure 4 ) and randomly present holographic "search targets 21" at any spatial position around the user, so all "search targets 21, 22" cannot be presented within the range of the user's field of view. Such as image 3 As shown, the user 20 can only see the “search target 21 ” within the visual range 23 , but cannot see the search target 22 outside the visual range 23 . Therefore, the user 20 not only needs to turn his eyes, but also needs to turn his head and body to change the field of view 23 so that he can see the search target 22 , such as looking down or looking up.

[0186] At the same time, the distance be...

Embodiment 3

[0195] Figure 4 for figure 1 The generation and operation method of the assessment task embodiment 3 of method one identification method specifically includes:

[0196] In the first step, the depth vision camera on the MR glasses reconstructs the real world in 3D;

[0197] Augmented reality display device 405 may include one or more externally facing image sensors configured to acquire image data of real world scene 406 . Examples of such image sensors include, but are not limited to, depth sensor systems (eg, time intervals). time-of-flight or structured light camera), visible light image sensor and infrared image sensor. Alternatively or additionally, a previously acquired 3D mesh representation may be retrieved locally or remotely from storage,

[0198] In the second step, the MR glasses recognize the spatial position and surface of the object (obstacle 402) in the real environment, and set the holographic target according to the spatial position and surface condition;...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com