Intelligent robot grabbing method based on action demonstration teaching

A kind of intelligent robot and robot technology, applied in the field of robot learning, can solve problems that affect algorithm performance, difficult to transfer, rely on human experience, etc., and achieve the effect of strong adaptability and robustness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

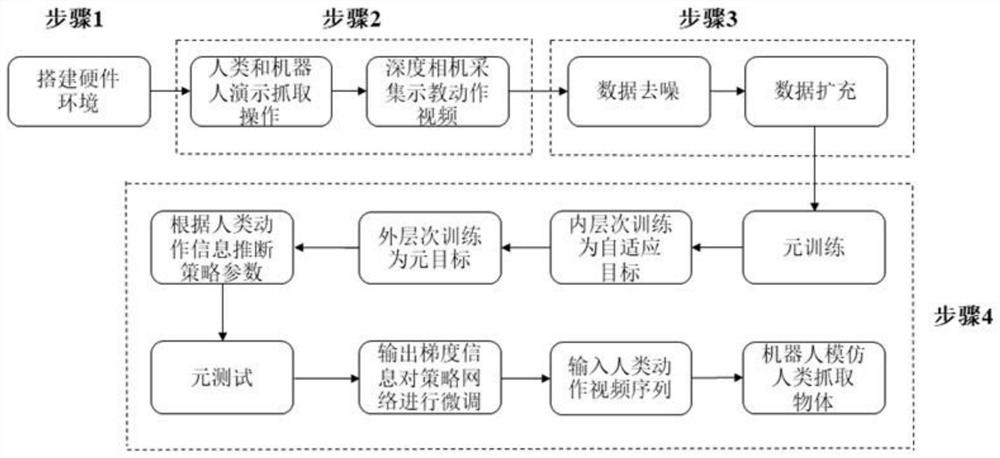

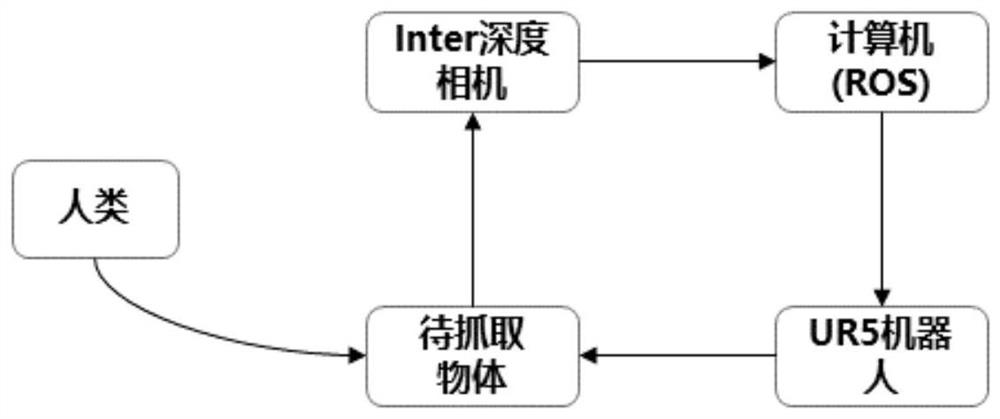

[0068] figure 1 A schematic flow chart of the intelligent robot grasping method based on action demonstration and teaching of the present invention is given, as shown in figure 1 As shown, the present invention provides a kind of intelligent robot grasping method based on action demonstration teaching, comprising the following steps:

[0069] Step S1: Complete the hardware environment construction of the action demonstration teaching programming system;

[0070] Step S2: The human demonstrates the grasping operation to form a human teaching action video, and the human uses the teaching pendant to control the robot to complete the demonstration grasping action to form a robot teaching action video;

[0071] Step S3: Denoise and expand the data sets of both the human teaching action video and the robot teaching action video;

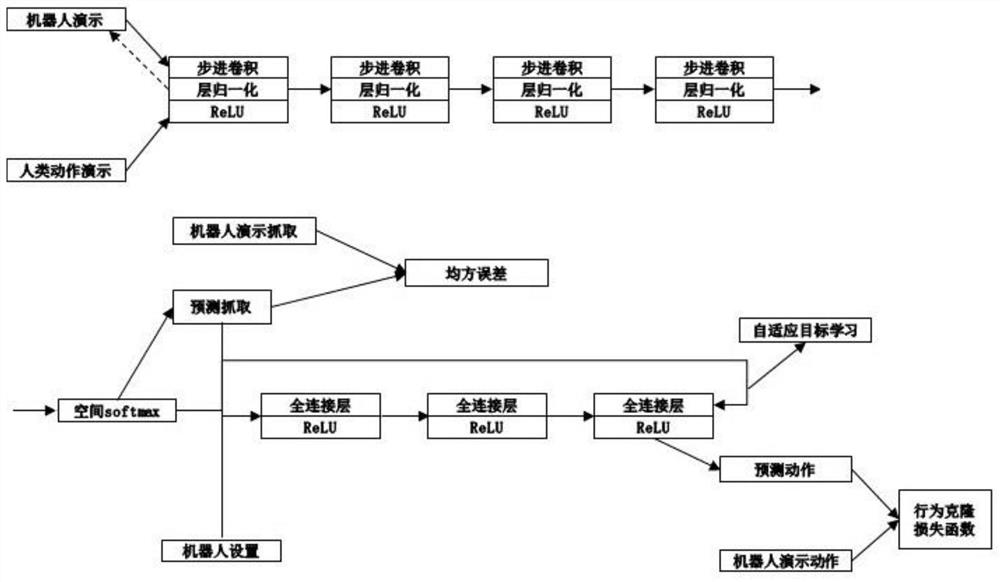

[0072] Step S4: Use the meta-learning algorithm to automatically learn prior knowledge directly from the teaching actions of humans and robots to realiz...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com