Infrared and visible light image fusion method based on gray level co-occurrence matrix

A gray-level co-occurrence matrix and image fusion technology, which is applied in image enhancement, image analysis, image data processing, etc., can solve the problems of losing texture details and neglecting

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

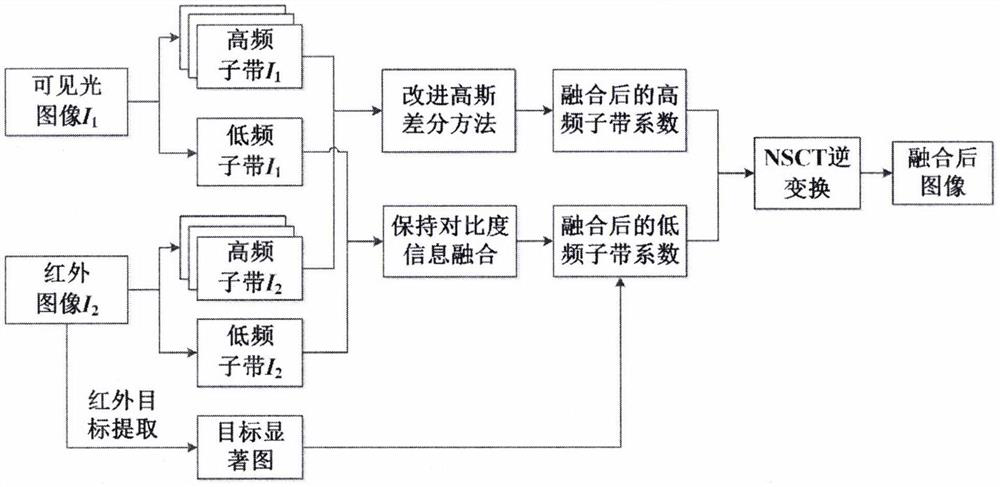

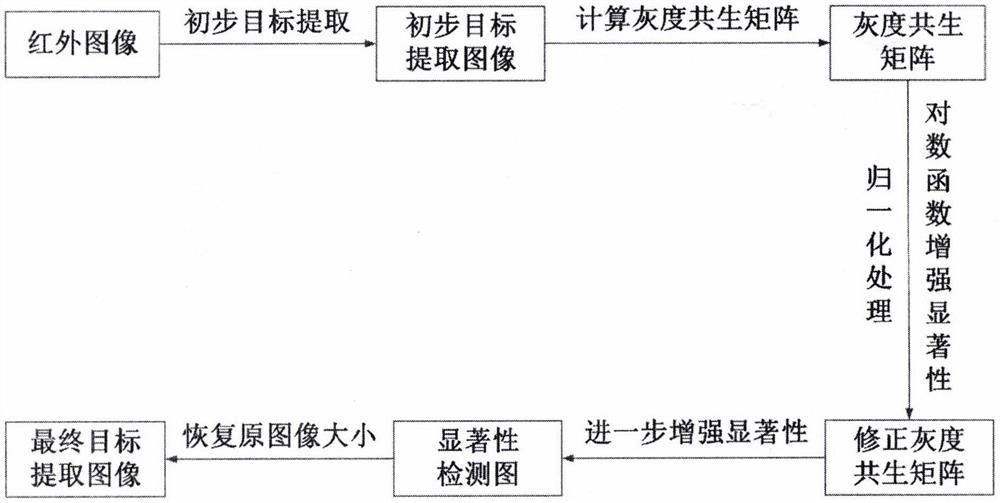

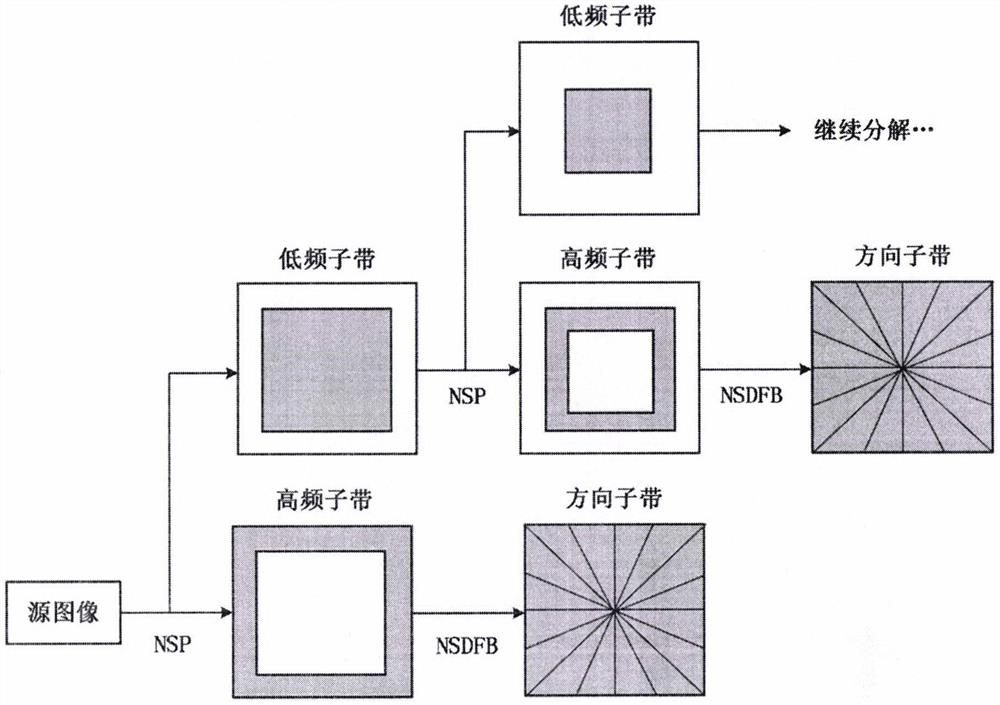

Method used

Image

Examples

Embodiment 1

[0140] Example 1: The "Camp" image used in this experiment is as follows Image 6 as shown, Image 6 (a) is a visible light image, Image 6 (b) is an infrared image. The experimental results are shown in Table 1:

[0141] Table 1 "Camp" image fusion evaluation index

[0142]

Embodiment 2

[0143] Example 2: The "Trees" image used in this experiment is as follows Figure 7 as shown, Figure 7 (a) is a visible light image, Figure 7 (b) is an infrared image. The experimental results are shown in Table 2:

[0144] Table 2 "Trees" image fusion evaluation index

[0145]

[0146] From the results in Table 1 and Table 2, it can be seen that compared with other 7 classical fusion algorithms, the fusion method of the present invention performs best in terms of objective evaluation indicators, especially the gray standard deviation (SD), spatial frequency (SF) And visual information fidelity (VIFF), these three indicators perform more prominently, significantly outperforming other methods. That is to say, the method of the present invention not only highlights the infrared target, but also takes into account the processing of texture details, which is more conducive to human eye observation, and the image quality after fusion is higher. The six objective evaluatio...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com