Automatic text abstracting method based on pre-trained language model

A language model and automatic summarization technology, applied in neural learning methods, biological neural network models, natural language data processing, etc., can solve the problems of repetitive or semantically irrelevant abstracts at the decoding end, and the inability of the coding end to learn information well. The effect of overcoming the lack of long-distance access to information and improving training speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0034] The invention will be described in further detail below in conjunction with the accompanying drawings.

[0035] figure 1 Represents the flow chart of the present invention—a method for automatic text summarization based on a pre-trained language model. It is mainly divided into two parts:

[0036] (1) Encode the source text using an encoder built on a pre-trained language model.

[0037] (2) Use a decoder built on the basis of LSTM network, combined with the attention mechanism to decode the encoded vector and generate a text summary.

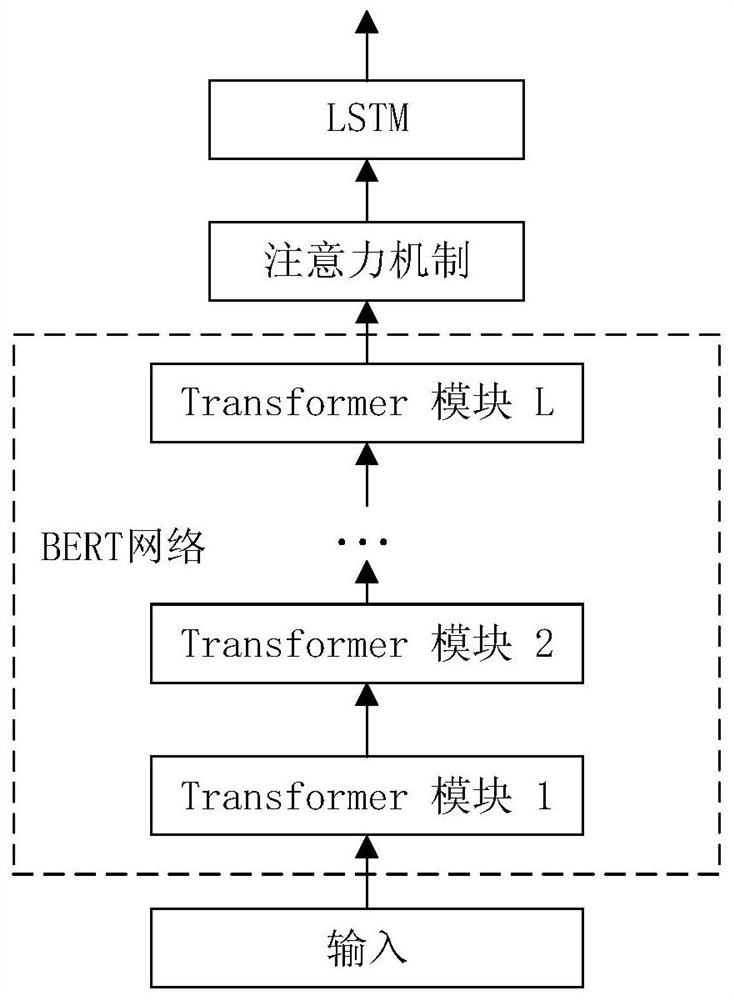

[0038] For a piece of text content, to automatically generate a general summary, you can use figure 2 The method described is implemented. The specific process is:

[0039] (1) Use the BERT pre-trained network to encode the source text.

[0040] The processed source text information can be expressed as H 0 =[E 1 ,...,E N ], where: E 1 represents the vector representation of the first word (character) in the source text; E N A...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com