A Decentralized Device Collaborative Deep Learning Inference Method

A technology of deep learning and equipment, which is applied in the field of artificial intelligence and edge computing, can solve the problems that the input data of edge equipment is similar or even repeated, and the edge equipment cannot be fully utilized, so as to reduce the complexity of the model and the size of the intermediate result Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

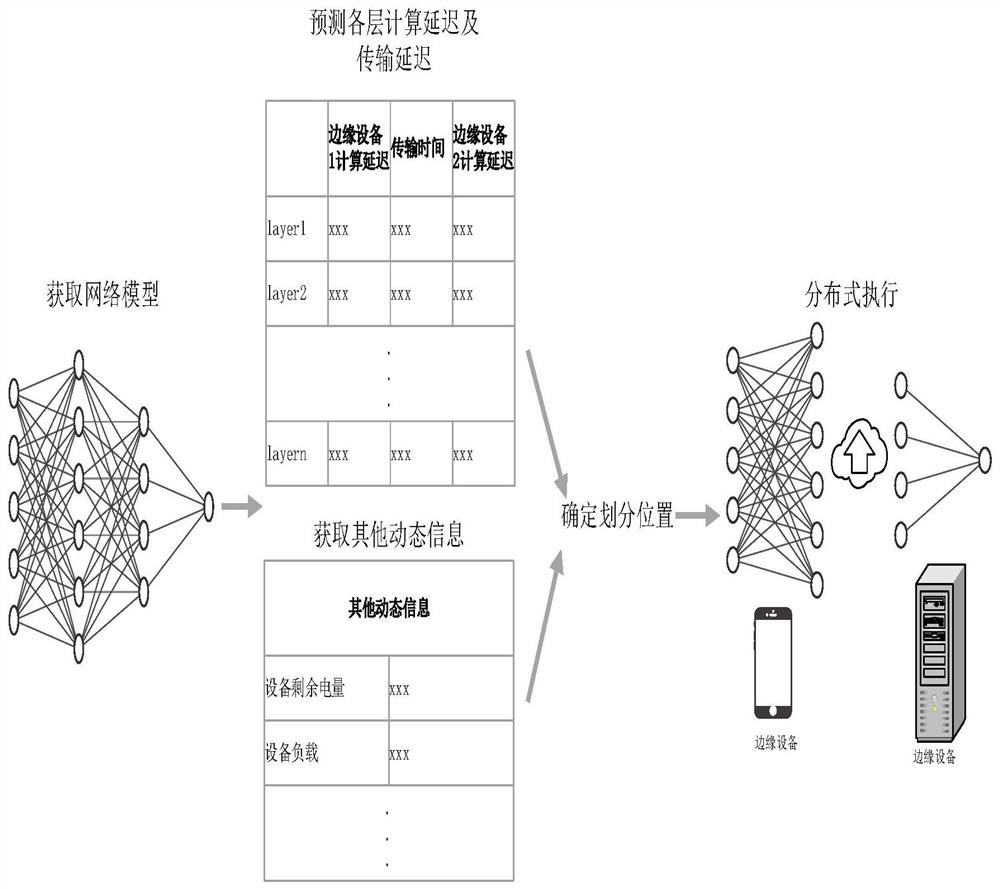

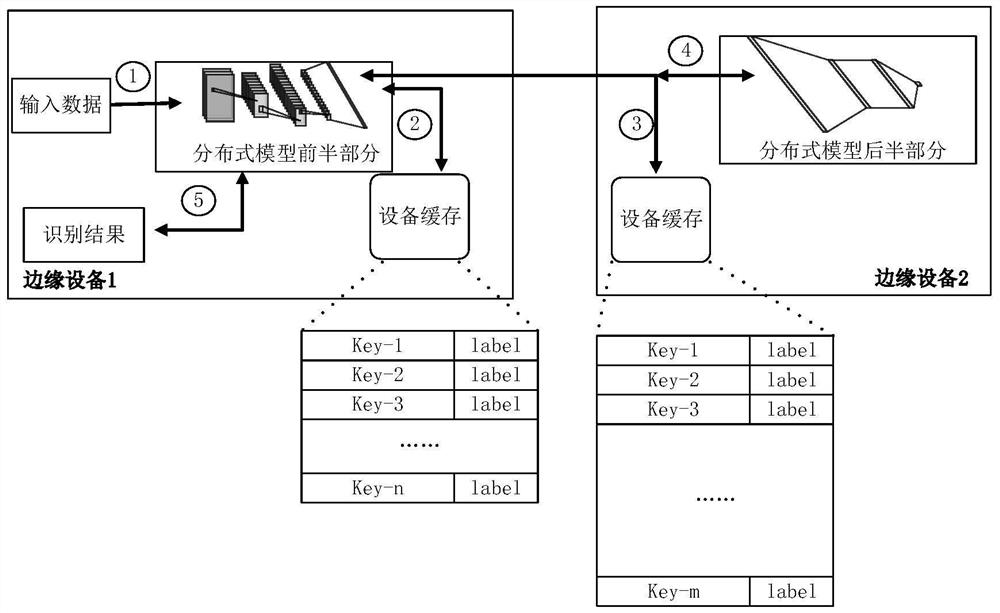

[0016] The present invention mainly has two steps: a step of selecting a decentralized device, and a step of calculating a distributed neural network based on a cache.

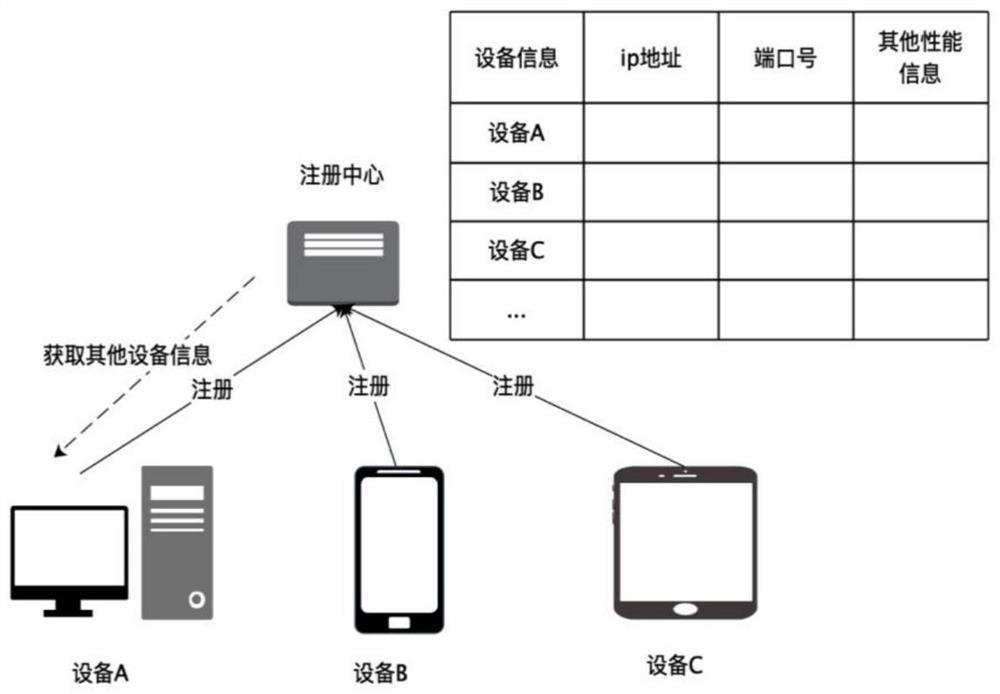

[0017] Decentralized equipment selection

[0018] Because edge scene devices are generally heterogeneous, that is, there are different computing performance, network performance and storage performance, it is necessary to reasonably select cooperative devices before initiating collaborative intelligent computing tasks. Each device can be used as a task initiating device or a task cooperation device. like figure 1 As shown, in order to let other devices know their existence, each device will register its own IP address, port number and other device performance information in the registration center, and establish a stable connection under the condition of ensuring network reliability. The task initiating device obtains the information of other devices through the registration center, first screen out the devi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com