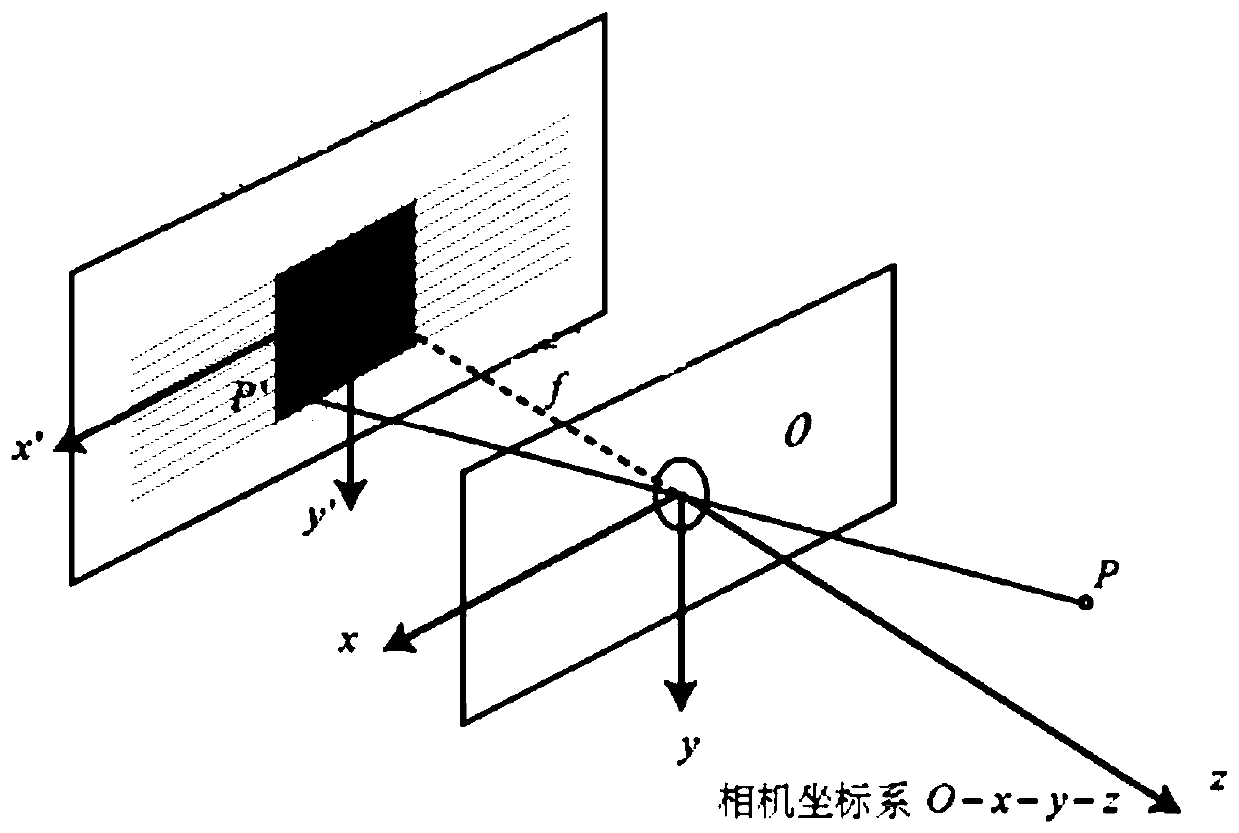

Semantic mapping method based on visual SLAM and two-dimensional semantic segmentation

A technology of semantic segmentation and semantic mapping, which is applied in the field of cross-integration of computer vision and deep learning, can solve problems such as affecting the quality of the built map, and achieve the effect of improving robustness and system performance.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0077] In order to make the object, technical solution and advantages of the present invention clearer, the present invention will be described in further detail below in conjunction with the accompanying drawings and embodiments. It should be understood that the specific embodiments described here are only used to explain the invention, not to limit the invention.

[0078] The concept of semantic map refers to a map containing rich semantic information, which represents the abstraction of semantic information such as spatial geometric relations and existing object types and positions in the environment. The semantic map contains both the environmental spatial information and the environmental semantic information, so that the mobile robot can know both the objects in the environment and what the objects are like a human.

[0079] Aiming at the problems and deficiencies in the prior art, the present invention proposes a semantic mapping system based on visual SLAM and two-dime...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com