Lane detection method and system based on multi-level fusion of vision and lidar

A technology for lidar and lane detection, which is used in radio wave measurement systems, measurement devices, and re-radiation of electromagnetic waves.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 2

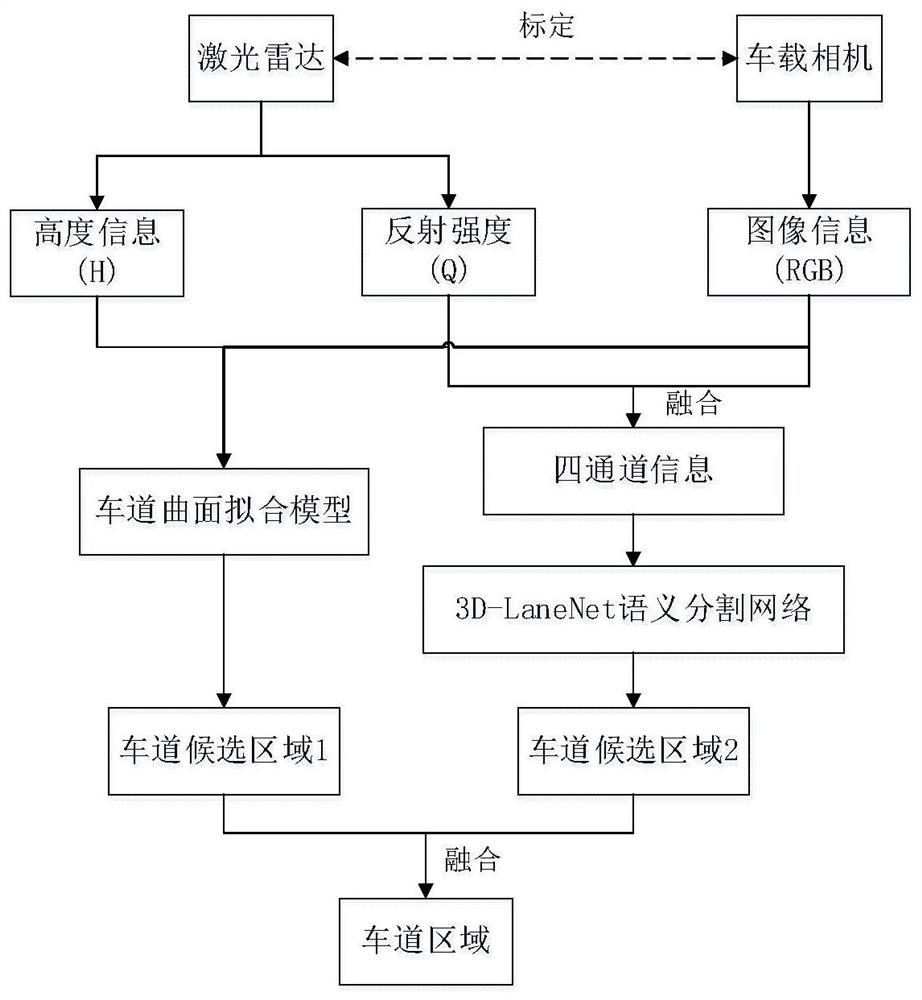

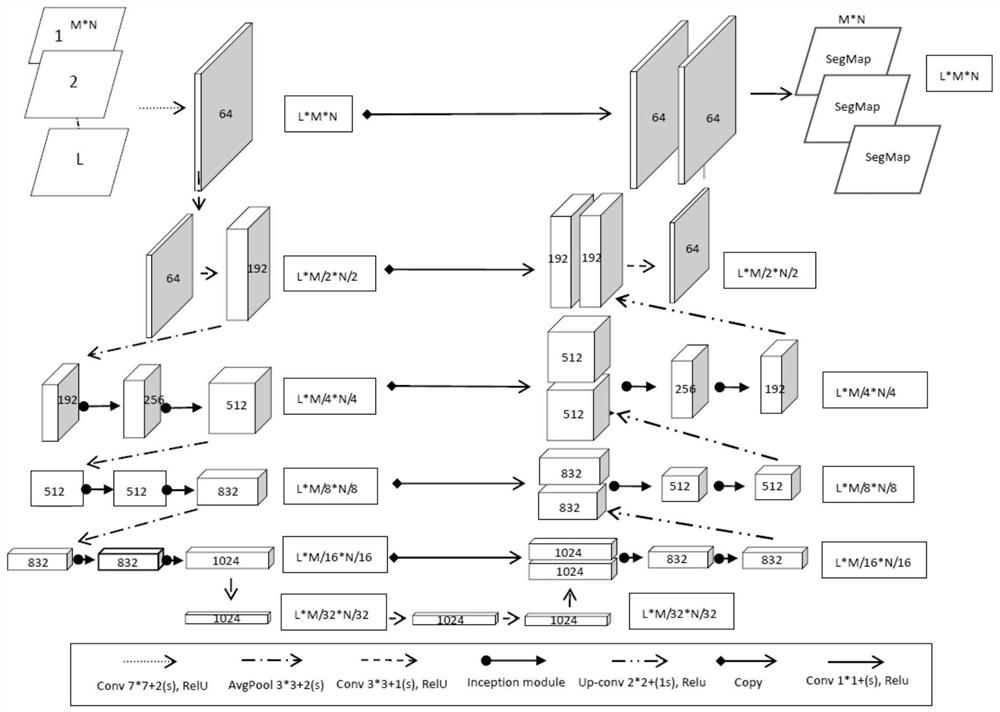

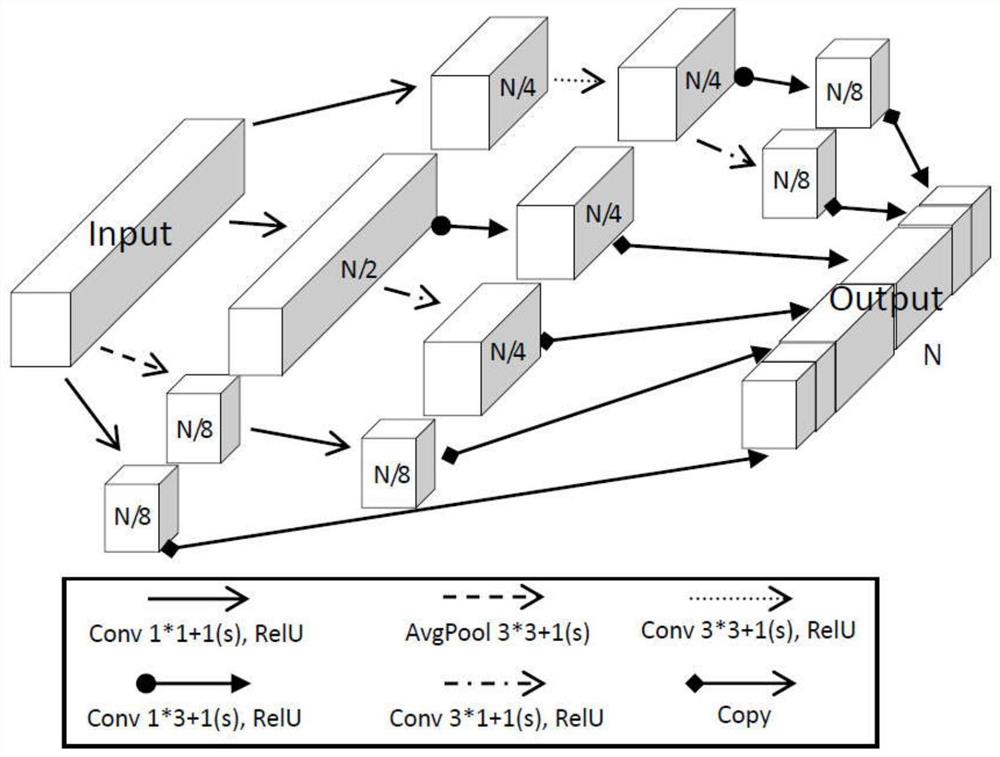

[0086] Embodiment 2 of the present invention proposes a lane detection system based on multi-level fusion of vision and lidar, the system includes: lidar, vehicle camera and lane detection module; the lane detection module includes: semantic segmentation network 3D-LaneNet, A marking unit, a first lane candidate area detection unit, a second lane candidate area detection unit and a lane fusion unit;

[0087] Lidar is used to obtain point cloud data;

[0088] The on-board camera is used to obtain video images;

[0089] A calibration unit is used to calibrate the obtained point cloud data and video images;

[0090] The first lane candidate area detection unit is used to fuse the height information of the point cloud data, the reflection intensity information and the RGB information of the video image to construct a point cloud clustering model, obtain the lane point cloud based on the point cloud clustering model, and carry out the lane point cloud The least squares method is ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com