Image labeling method, device and system and host

An image tagging and host technology, applied in the field of image processing, can solve problems such as large tagging costs, negative impact on detection results, and reduced data tagging efficiency, so as to improve defect detection results, reduce tagging costs, and improve tagging efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

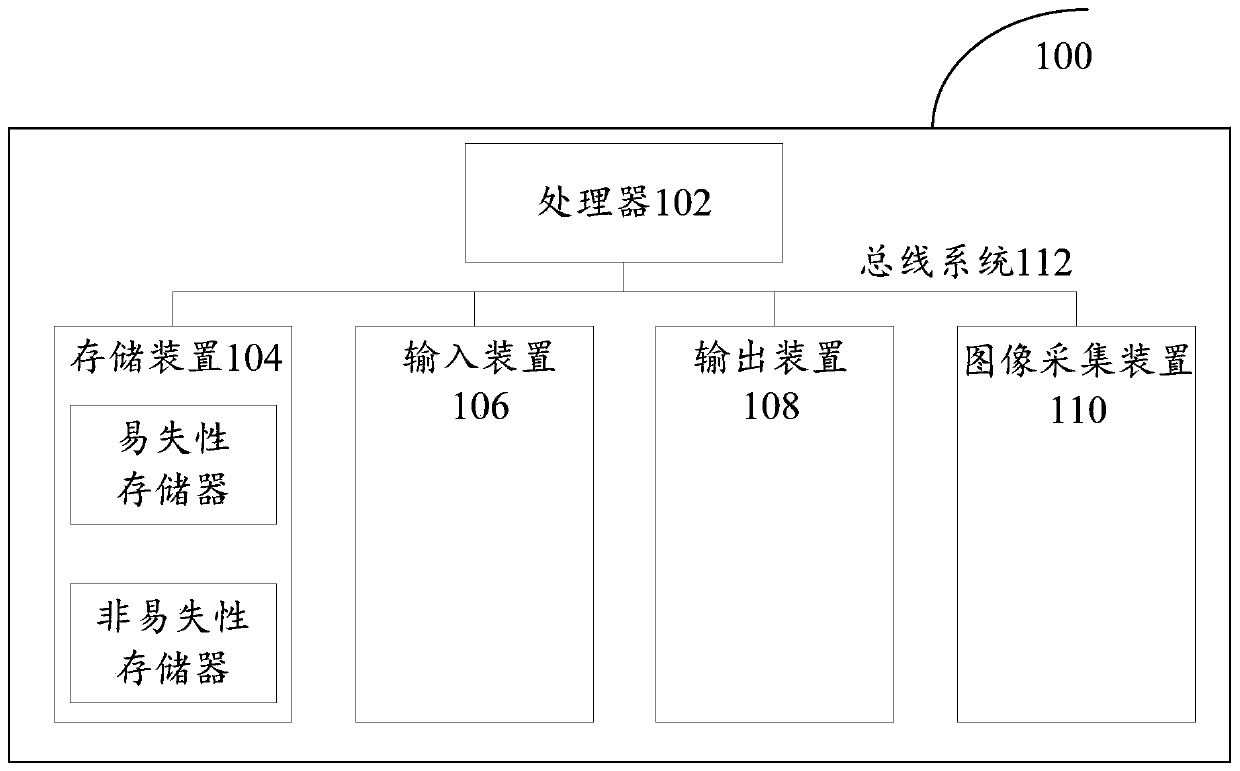

[0030] First, refer tofigure 1 An example electronic device 100 for implementing the image tagging method and device of the embodiments of the present invention will be described.

[0031] Such as figure 1 Shown is a schematic structural diagram of an electronic device. The electronic device 100 includes one or more processors 102, one or more storage devices 104, an input device 106, an output device 108, and an image acquisition device 110. These components pass through a bus system 112 and / or other forms of connection mechanisms (not shown). It should be noted that figure 1 The components and structure of the electronic device 100 shown are only exemplary, not limiting, and the electronic device may have figure 1 Some components shown may also have figure 1 Other components and structures not shown.

[0032] The processor 102 may be a central processing unit (CPU) or other forms of processing units with data processing capabilities and / or instruction execution capabilit...

Embodiment 2

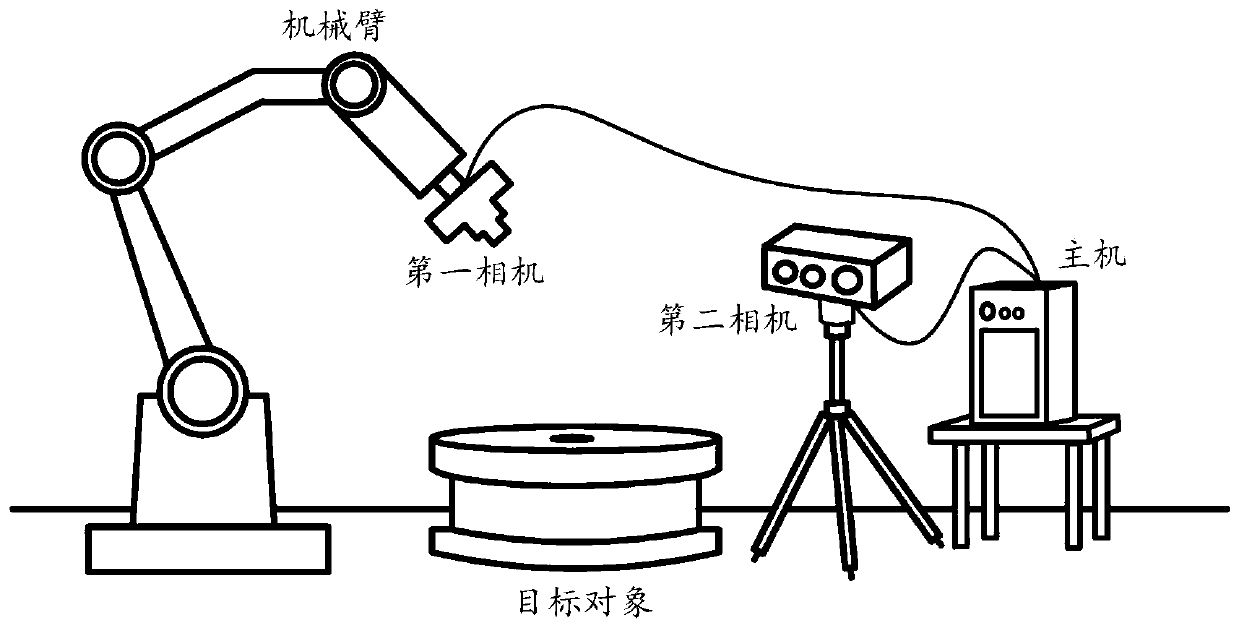

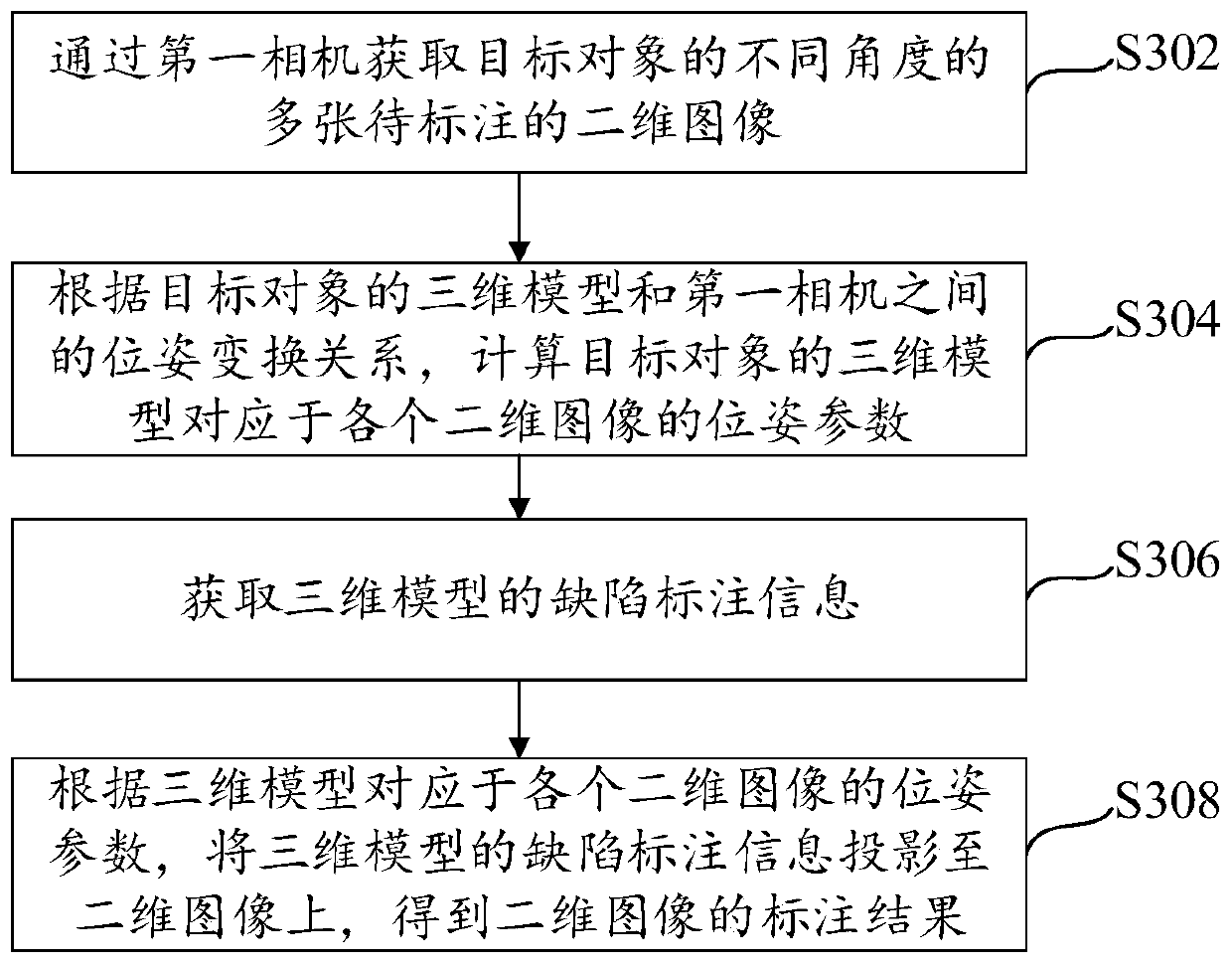

[0039] First, for ease of understanding, this embodiment provides an image tagging system, and exemplifies an actual application scenario of an image tagging method. refer to figure 2 , the image annotation system includes a host and a 2D image acquisition device and a 3D data acquisition device connected to the host; for the convenience of description, the 2D image acquisition device can also be called the first camera, and the 3D data acquisition device can be called the second camera . In practical applications, the first camera can be a monocular camera, a binocular camera or a depth camera; in order to improve the flexibility of the first camera during the image acquisition process, the first camera can be mounted on the end of the flange of the mechanical arm. In consideration of cost and manipulation complexity, the first camera may be a monocular camera. The second camera is generally a depth camera. In this embodiment, the first camera is mainly used to collect tw...

example 1

[0063] Example 1: The pose transformation relationship between the 3D model and the first camera is determined based on the pose parameters of the 3D model in the world coordinate system, the first pose transformation relationship, and the second pose transformation relationship. In practical applications, the pose parameters of the 3D model in the world coordinate system can be converted into pose parameters in the manipulator coordinate system according to the first pose transformation relationship, and then the 3D model can be transformed according to the second pose transformation relationship The pose parameters of the model in the robot arm coordinate system are converted into pose parameters in the first camera coordinate system; thereby realizing pose transformation between the 3D model and the first camera.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com