Data caching method based on local area network

A technology of data caching and local area network, which is applied in the direction of database update, data exchange details, data exchange network, etc. It can solve problems such as unpersistence, data loss, and inapplicability of a small amount of data caching, etc., and achieves obvious effects and simple structure.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

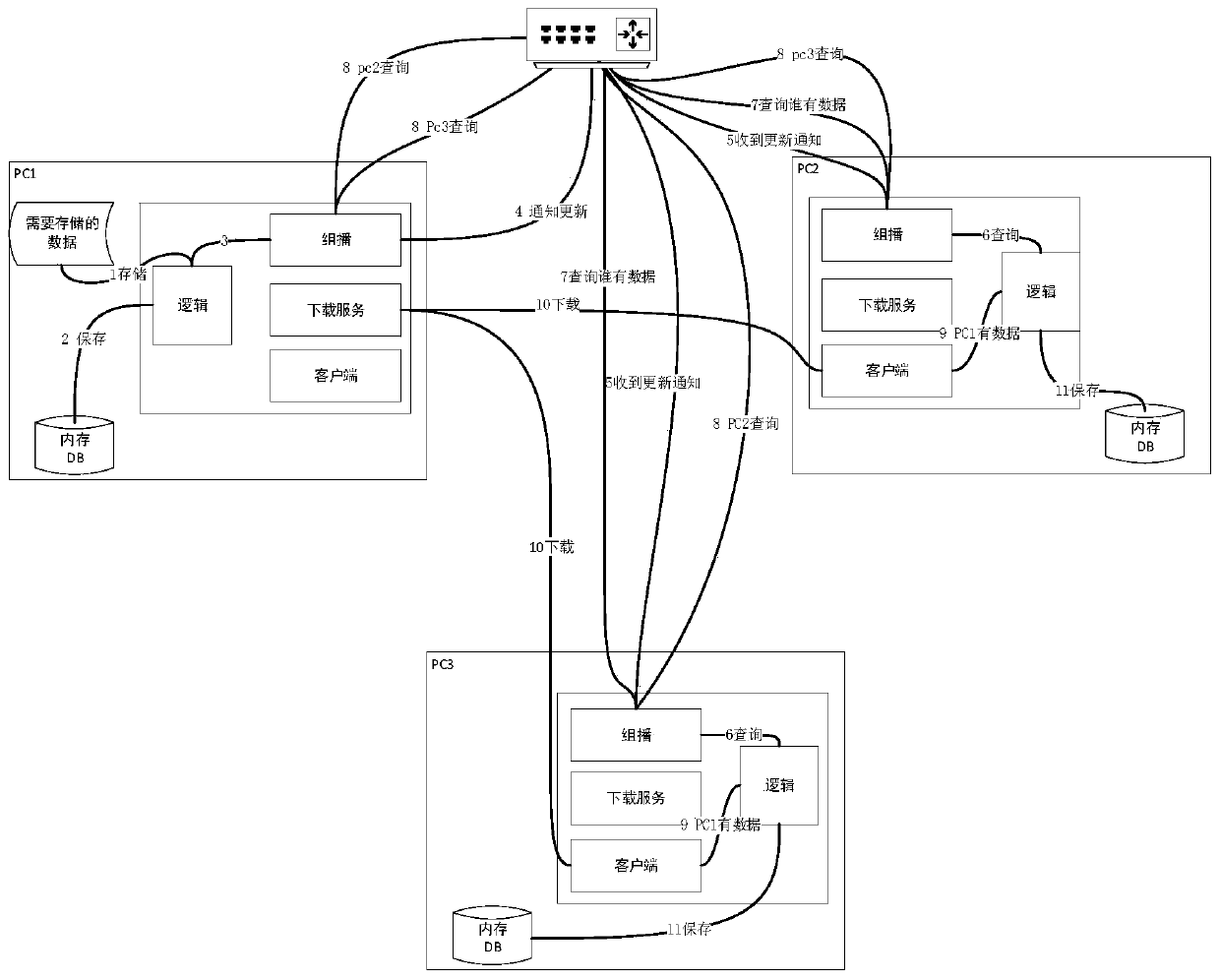

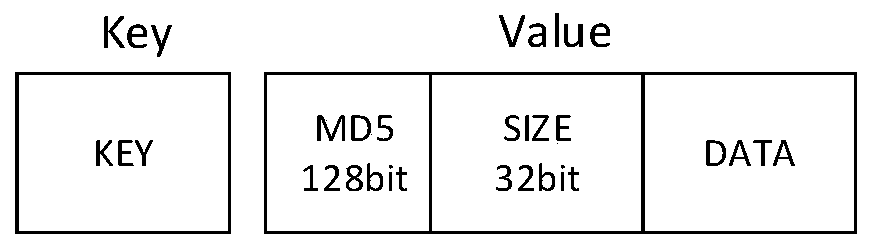

[0031] Such as figure 1 As shown, there are three computers PC1, PC2, and PC3 in the local area network in this embodiment, but the present invention does not limit the number of computers in the local area network. PC1, PC2, and PC3 form a local area network through a router, and can perform multicast communication. Each computer is a peer node in the network, and each node mainly has a multicast module, a download service module, a client module, and a logic processing module. Among them, the multicast module is used to send query requests, send operation lock requests, and receive update requests; the download service module is used to provide data download servers; the client is used to download data from other machines; the logic module is used to generate data MD5 Logical processing such as value, data storage, query, update, maintenance operation queue, etc.

[0032] figure 1 The data caching method in:

[0033] 1) In this embodiment, there are three computers PC1, ...

Embodiment 2

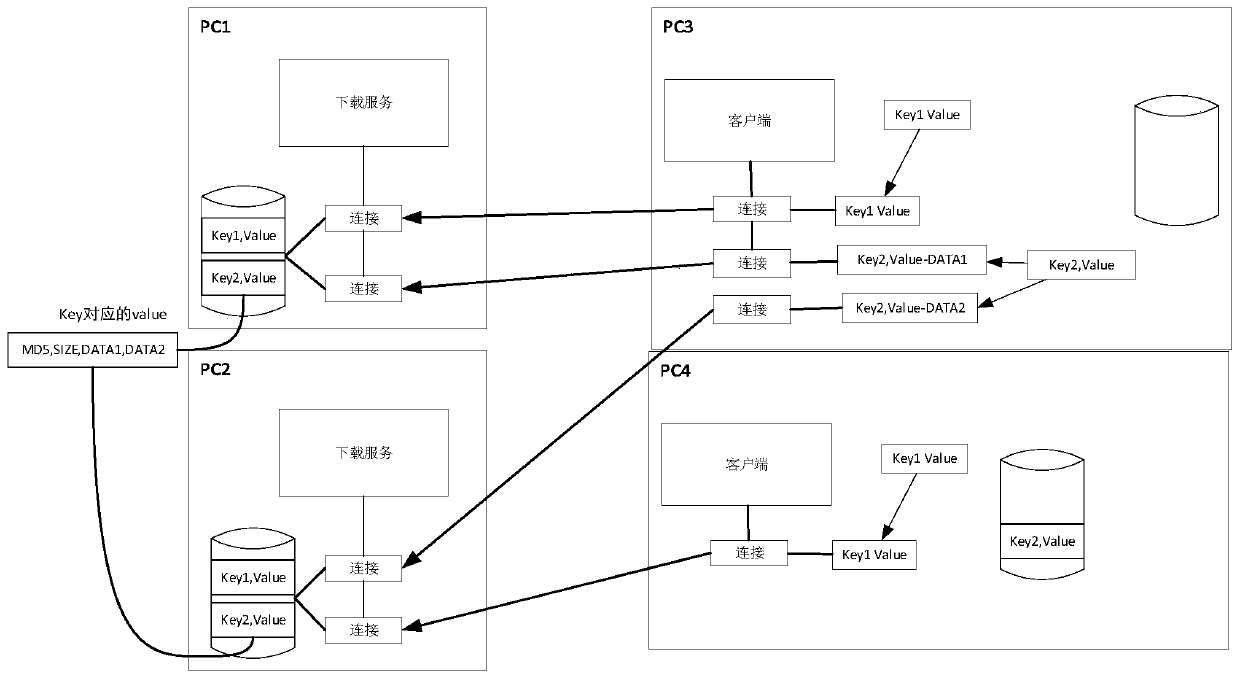

[0042] On the basis of the data caching method of embodiment 1, in order to improve the update speed of data, such as image 3 As shown, the data is sharded.

[0043] First, in Embodiment 2, PC1 and PC2 are the source node computers at this time, and the data to be stored is fragmented according to a certain size. In this implementation, the two data Key1 is not fragmented, and Key2 is fragmented into two data (DATA1, DATA2), but the present invention does not limit the number of slices.

[0044] Then, perform data fragmentation according to the data size. For example, Key1 does not need to be fragmented, and Key2 is divided into two fragments. The update of Key1 on PC3 is as follows:

[0045] 1. PC3 broadcasts query data, and both PC1 and PC2 have data;

[0046] 2. The returned query packet contains data Key1, MD5 and SIZE. Through SIZE, it is known that Key1 has only one data fragment;

[0047] 3. PC3 receives the response from PC1 first and connects successfully;

...

Embodiment 3

[0057] The download connection provided by the source node will limit the number of downloads connected to the source node in order to reduce the additional performance overhead of the computer.

[0058] On the basis of the data caching method of embodiment 1 or embodiment 2, such as Figure 4 The embodiment in uses the fission method to update the data to the entire local area network faster, and the number of connection downloads of the source node is limited to 2. This embodiment has nine computers: PC1, PC2, PC3, PC4, PC5, PC6, PC7, PC8, PC9. For the first update, PC1 is the source node computer. Based on the method of Embodiment 1 or Embodiment 2, PC1 updates the data to be stored to PC2 and PC3; after the download service is successfully downloaded during the update process, PC2 and PC3 also become the source Node computers, during the second update process, PC1, PC2, and PC3 act as source node computers at the same time, and update the data to be stored to PC4, PC5, PC...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com