Minimizing memory reads and increasing performance by leveraging aligned blob data in a processing unit of a neural network environment

A kind of neural network and memory technology, applied to the architecture with multiple processing units, biological neural network model, general-purpose stored program computer, etc., can solve the problem of reducing the number of memory operations, reduce power consumption, reduce the use of memory, Improve the effect of human-computer interaction

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

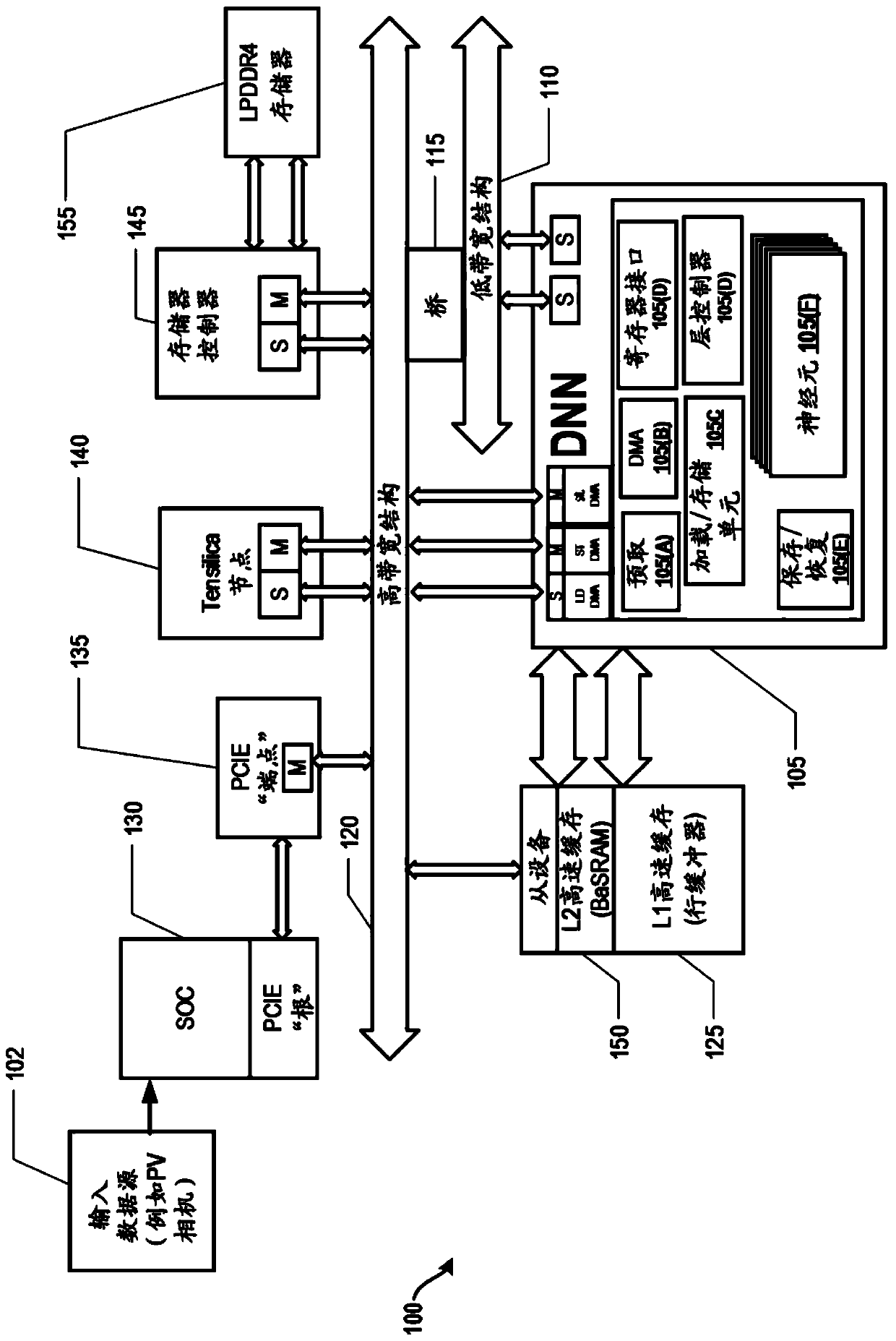

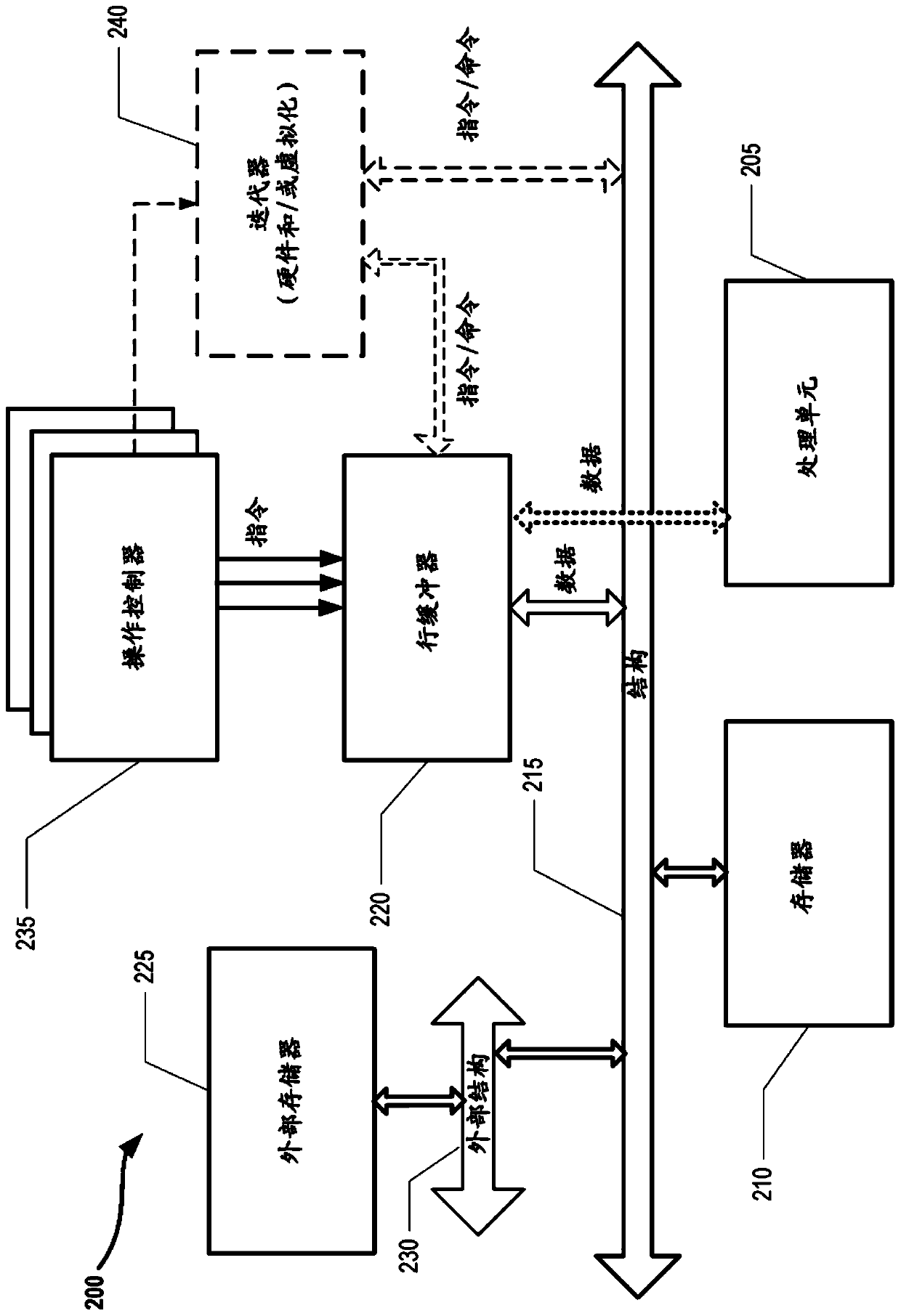

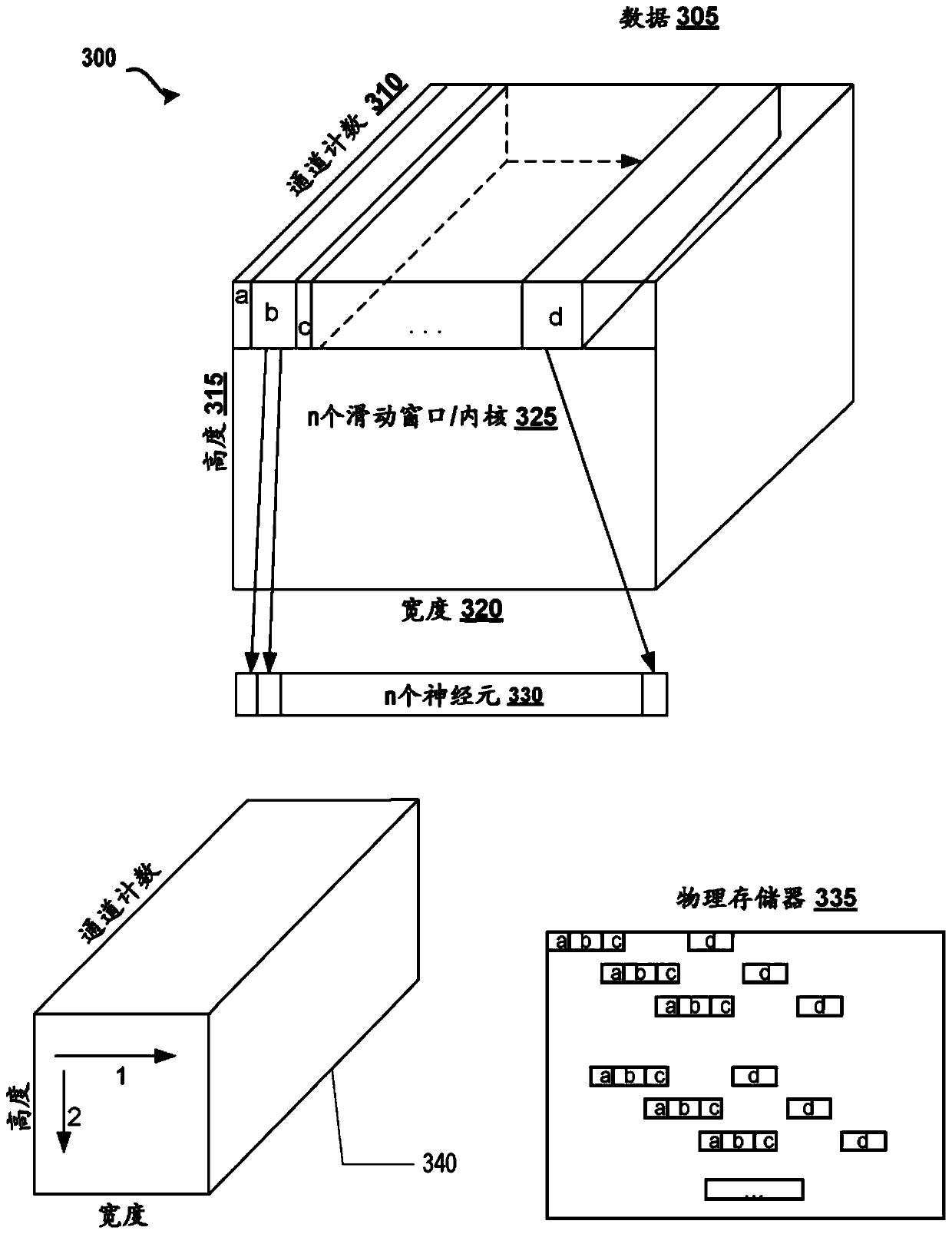

[0024] The following detailed description techniques described herein provide virtualization of one or more hardware iterators to be utilized in an exemplary neural network (NN) and / or deep neural network (DNN) environment, where data is physically populated in Aligning data in memory components allows manipulation of data that improves overall performance and optimizes memory management. It should be understood that the systems and methods described herein are applicable to NNs and / or DNNs, and thus, when reference is made to NN, it shall also refer to DNN and vice versa.

[0025] In an exemplary implementation, an exemplary DNN environment may include one or more processing blocks (e.g., computer processing units—CPUs), memory controllers, line buffers, high-bandwidth structures (e.g., local or external structures) (e.g., in the exemplary A data bus for passing data and / or data elements between DNN modules and cooperating components of the DNN environment), operating control...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com