Text topic model LDA high-performance computing method based on CPU-GPU collaborative parallelism

A high-performance computing and topic model technology, applied in computing, unstructured text data retrieval, text database clustering/classification, etc., can solve problems such as low computing efficiency and single platform

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

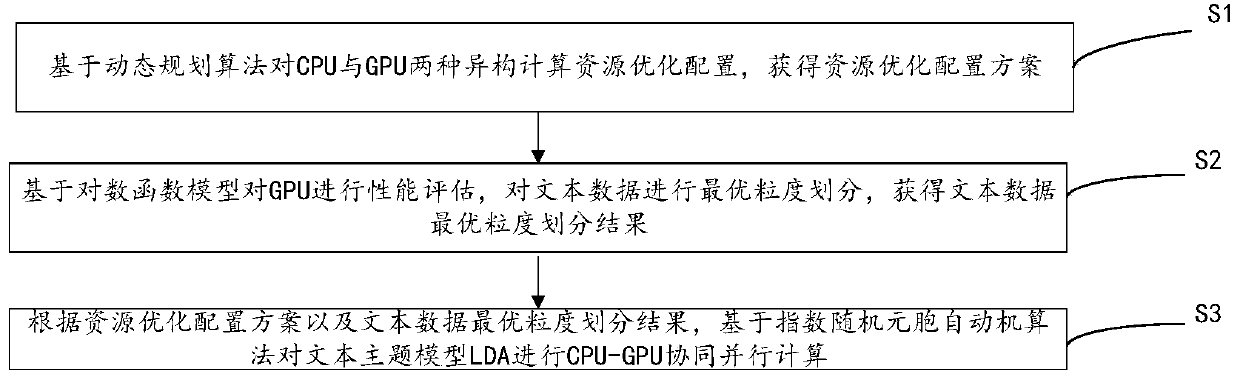

[0064] This embodiment provides a high-performance computing method based on CPU-GPU cooperative parallel text topic model LDA, please refer to figure 1 , the method includes:

[0065] Step S1: Based on the dynamic programming algorithm, optimize the allocation of two heterogeneous computing resources, CPU and GPU, and obtain an optimal allocation plan for resources.

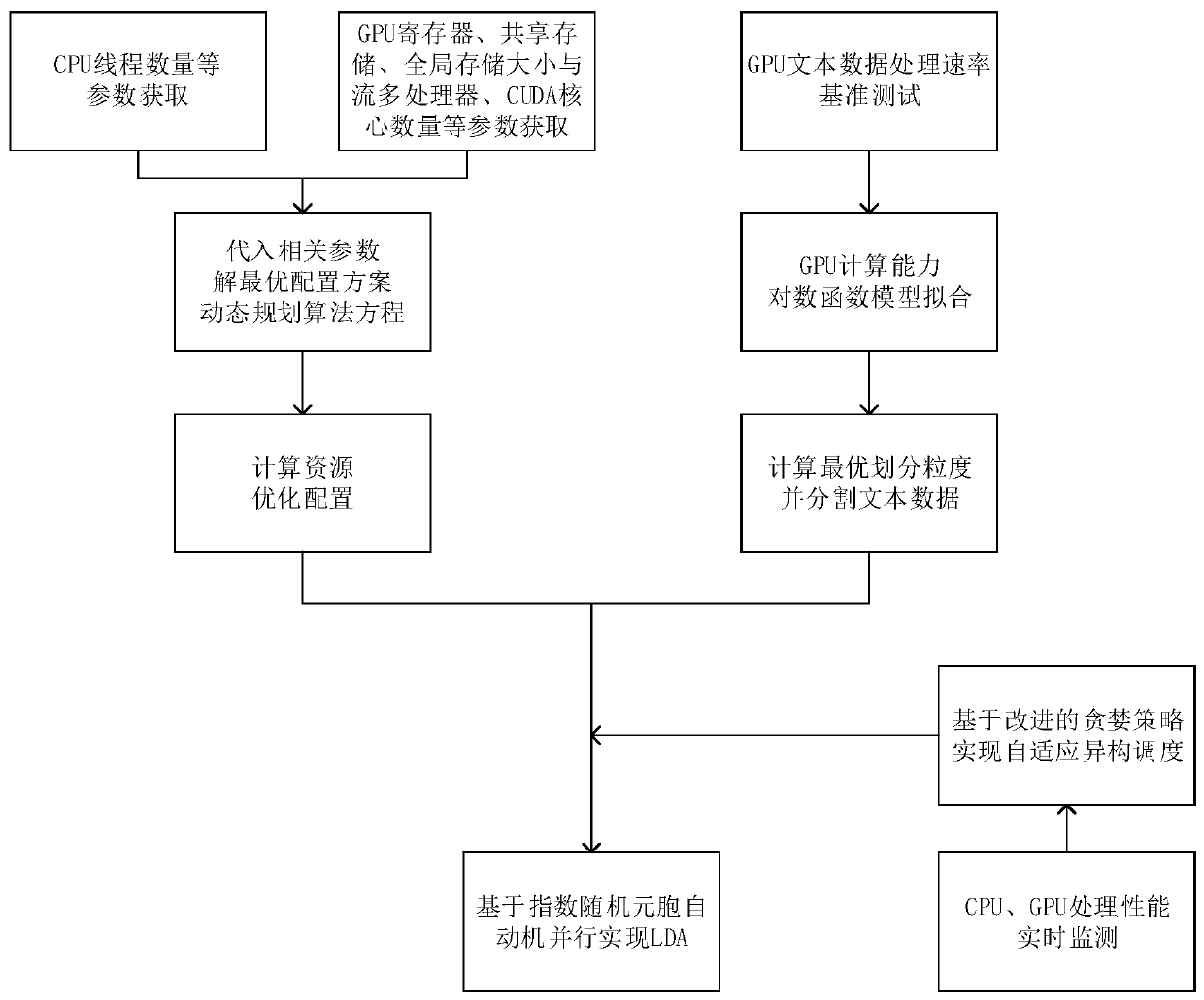

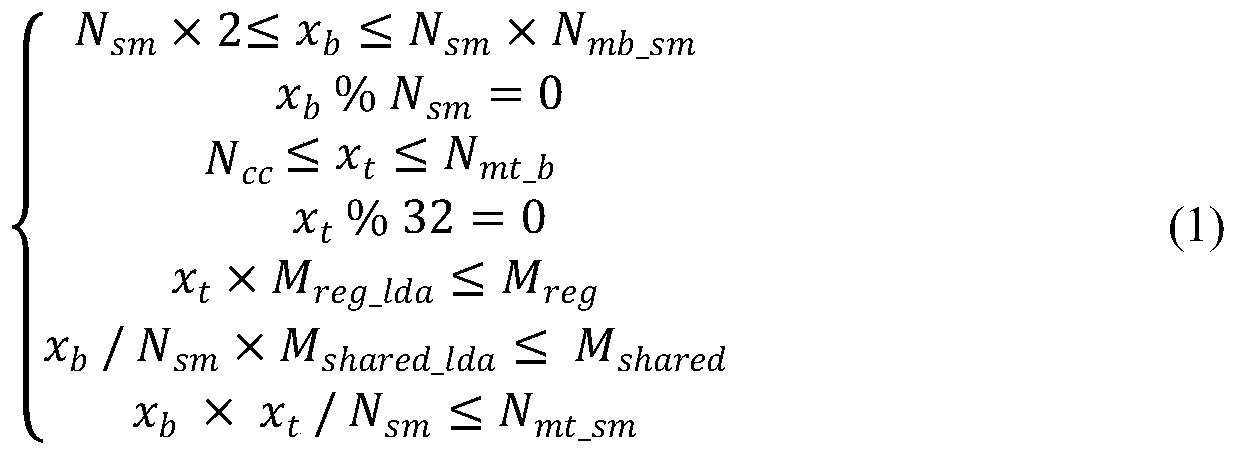

[0066] Specifically, in a CPU-GPU heterogeneous system, reasonable resource allocation is crucial to efficiently utilizing the system's computing power. When the present invention uses a dynamic programming algorithm for resource allocation, on the CPU side, the calculation threads and task allocation threads can be reasonably allocated according to the number of threads supported by the CPU; on the GPU side, GPU hardware resource constraints, algorithm storage requirements and general The GPU programming optimization rules transform the problem of optimal allocation of GPU computing resources into a problem of...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com