Computing platform implementation method and system for neural network

A computing platform and neural network technology, applied in the field of deep learning, can solve problems such as short execution time, achieve the effects of eliminating bottlenecks, avoiding bandwidth occupation, and improving computing efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0038] Preferred embodiments of the present disclosure will be described in more detail below with reference to the accompanying drawings. Although preferred embodiments of the present disclosure are shown in the drawings, it should be understood that the present disclosure may be embodied in various forms and should not be limited to the embodiments set forth herein. Rather, these embodiments are provided so that this disclosure will be thorough and complete, and will fully convey the scope of the disclosure to those skilled in the art.

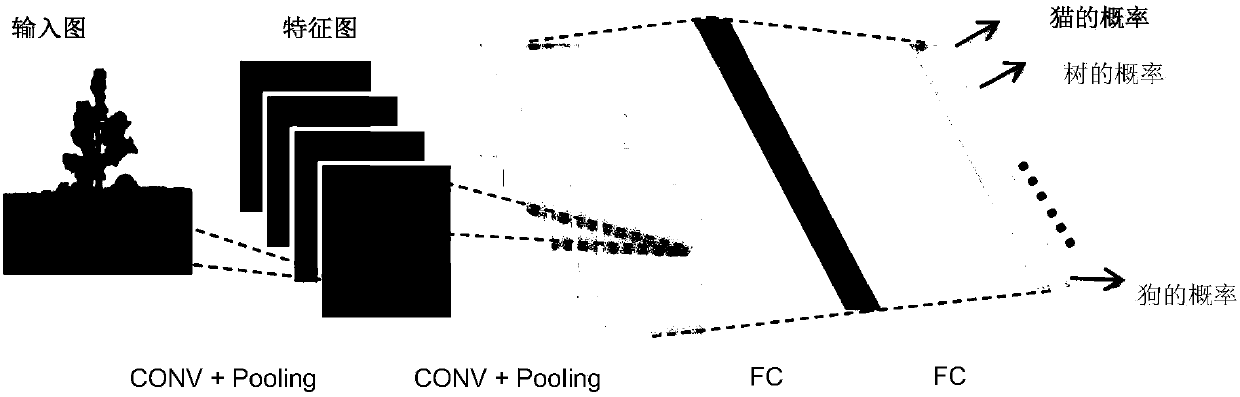

[0039] Basic concepts of neural network processors

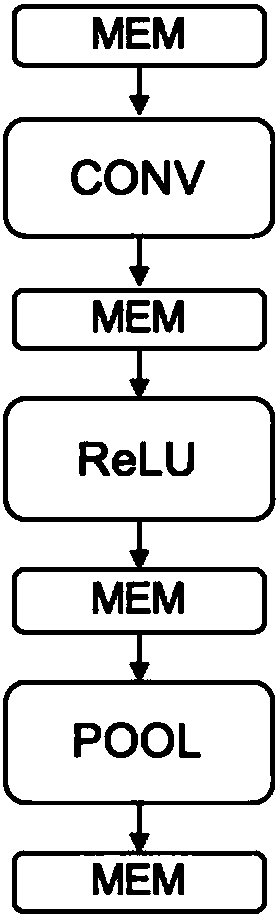

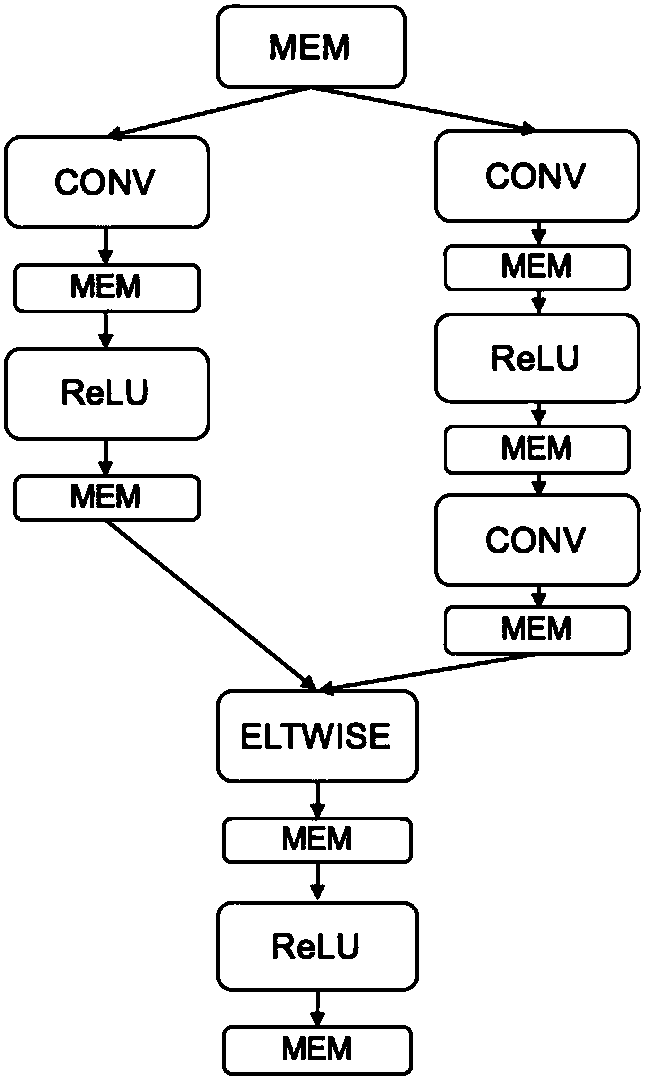

[0040]With the continuous development of artificial intelligence, machine learning and neural network algorithms in recent years, convolutional neural networks have achieved superhuman effects in image classification, recognition, detection and tracking. Due to the huge parameter scale and huge amount of calculation of the convolutional neural network, as well as the requirements for hard...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com