Deep neural network training method and device, electronic equipment and storage medium

A technology of deep neural network and training method, which is applied in the field of deep neural network training method, electronic equipment and storage medium, and device, and can solve the problems of limited improvement of neural network classification or recognition results, so as to improve the importance and accuracy , to avoid misclassification effects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

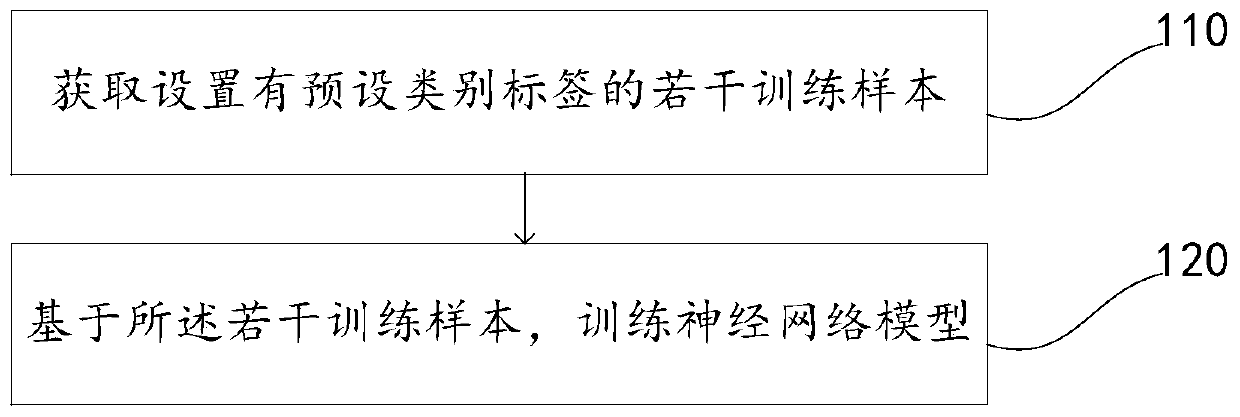

[0024] A deep neural network training method disclosed in this embodiment, such as figure 1 As shown, the method includes: step 110 and step 120.

[0025] Step 110, acquiring several training samples with preset class labels.

[0026] Before training the neural network, it is first necessary to obtain several training samples with preset class labels.

[0027] Depending on the specific application scenario, the form of the training samples is different. For example, in the application of work clothes recognition, the training samples are images of work clothes; photo); in the voice recognition application scenario, the training sample is a piece of audio.

[0028] Depending on the output of the specific recognition task, the category labels of the training samples are different. Taking the neural network trained to perform work clothes recognition tasks as an example, according to the specific recognition task output, the categories of training samples can include category...

Embodiment 2

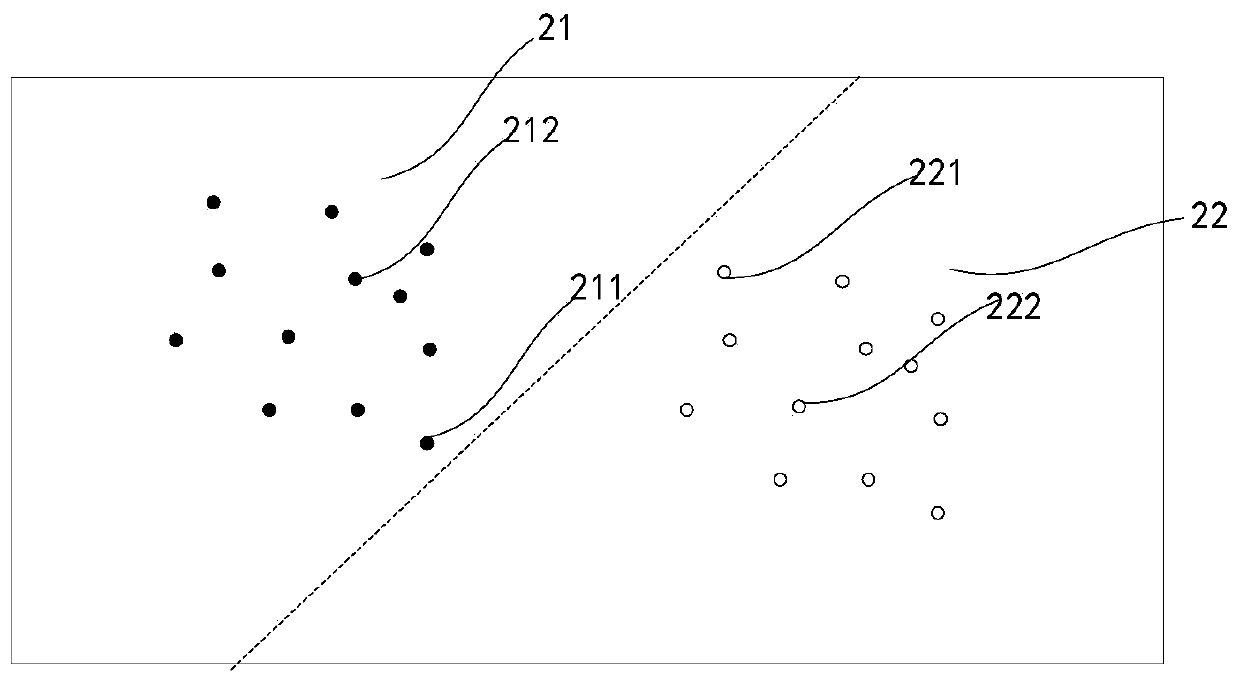

[0045] Based on the first embodiment, this embodiment discloses an optimization scheme of a deep neural network training method.

[0046] During specific implementation, after obtaining several training samples with preset category labels, a neural network is constructed first. In this embodiment, ResNet50 (residual network) is still used as the basic network to construct a neural network, and the neural network includes multiple feature extraction layers. Through the forward propagation stage of the neural network, the forward function of each feature extraction layer (such as the fully connected layer) is called in turn to obtain the output layer by layer. The last layer is compared with the target function to obtain the loss function, and the error update value is calculated. Then, the first layer is reached layer by layer through backpropagation, and all values are updated together at the end of backpropagation. The last feature extraction layer uses the extracted fea...

Embodiment 3

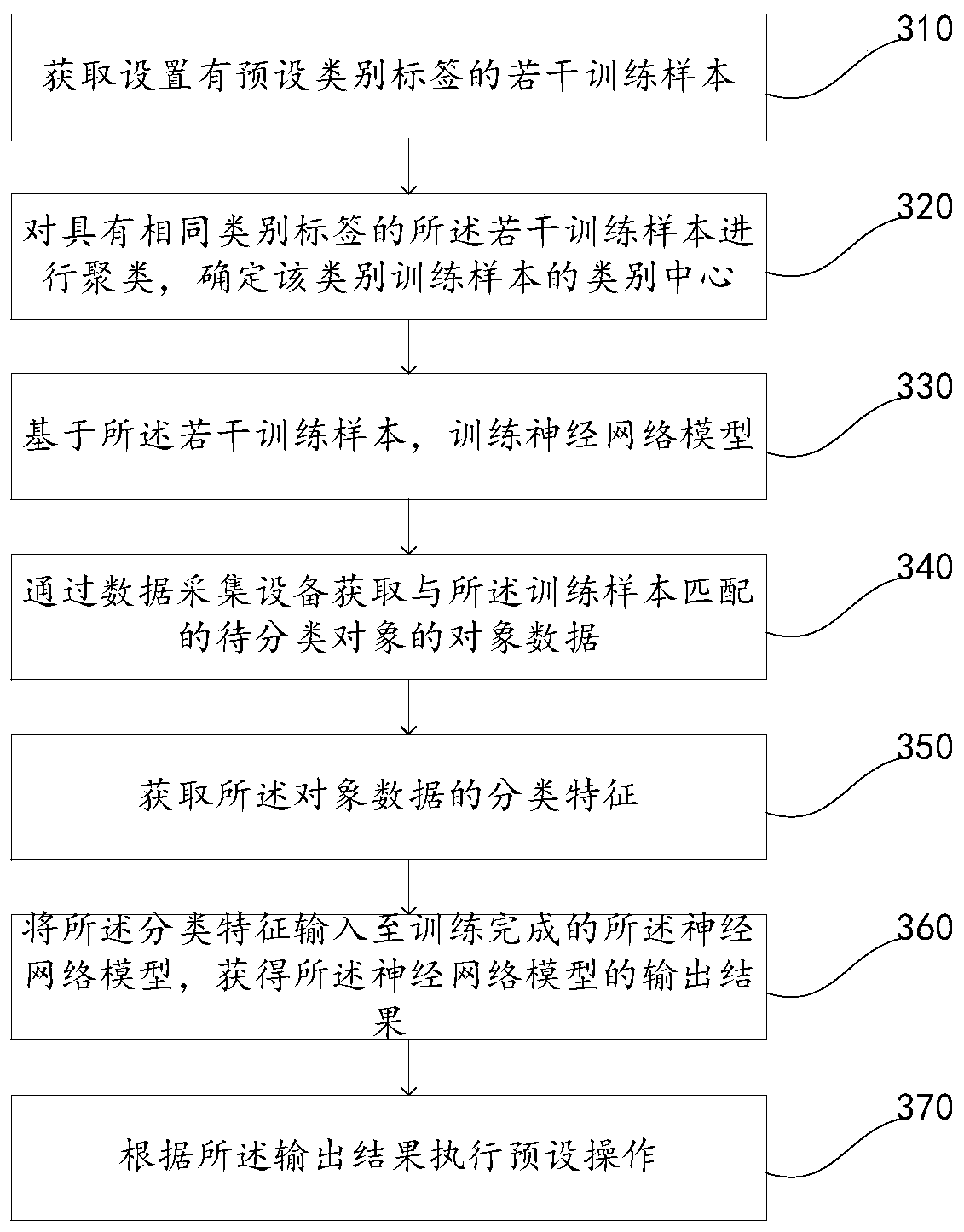

[0062] The embodiment of the present application also discloses a deep neural network training method, which is applied to classification applications. Such as image 3 As shown, the method includes: Step 310 to Step 370.

[0063] Step 310, acquiring several training samples with preset class labels.

[0064] During the specific implementation of the present application, the training samples include any one of the following: images, texts, and voices. For different objects to be classified, it is necessary to obtain training samples of corresponding objects to be classified in the neural network model. In this embodiment, take the training of a neural network model for work clothes recognition as an example. First, obtain work clothes images with different platform tags, such as: work clothes images with Meituan Waimai platform tags, and Ele. The image of the work clothes with the label of the platform, the image of the work clothes with the label of the Baidu Waimai platfo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com