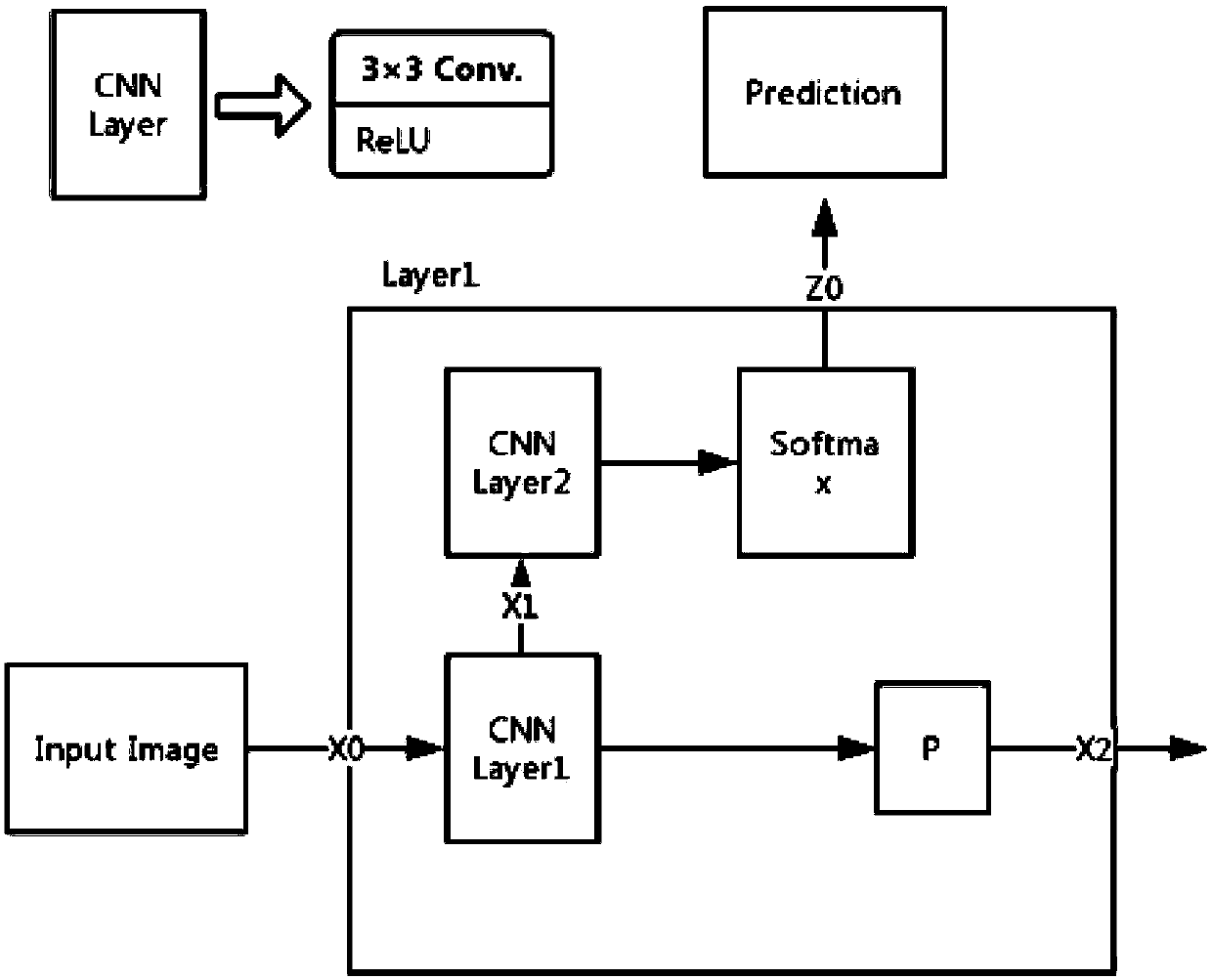

A neural network migration method based on shallow learning

A neural network and neural network model technology, applied in neural learning methods, biological neural network models, neural architectures, etc., can solve problems such as unclear migration layers, difficult migration methods, and increased method complexity, and achieve memory graphics card resources. The effect of less demand, simple and efficient transfer tasks, and simple and efficient transfer learning

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0056] A neural network migration method based on shallow learning provided by a preferred embodiment of the present invention is applied to image recognition tasks, and the steps of the method are as follows:

[0057] Step 1. Preprocess the target task data set: divide image recognition related tasks, form a task dictionary, mark the classified target tasks, store the marked data, and use it as the training data of the shallow neural network x 0 . The attributes and characteristics of objects of the same type are basically the same, for example, an animal has a head plus limbs, a vehicle has wheels and a carrier, and so on. This step is specifically:

[0058] 1) The images on the open source data set ImageNet are roughly divided into image recognition tasks by identifying different objects: animals, plants, buildings, roads, landscapes, objects, vehicles, and text; and record these categories as 1 to 8 . The images are sorted into eight folders according to these categorie...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com