Data processing method and related apparatus

A technology for data processing and processing results, applied in the field of data processing, can solve the problems of accelerated computing units, low processing performance, source data processing, etc., to achieve the effect of increasing system capacity, improving reliability, and reducing space size

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

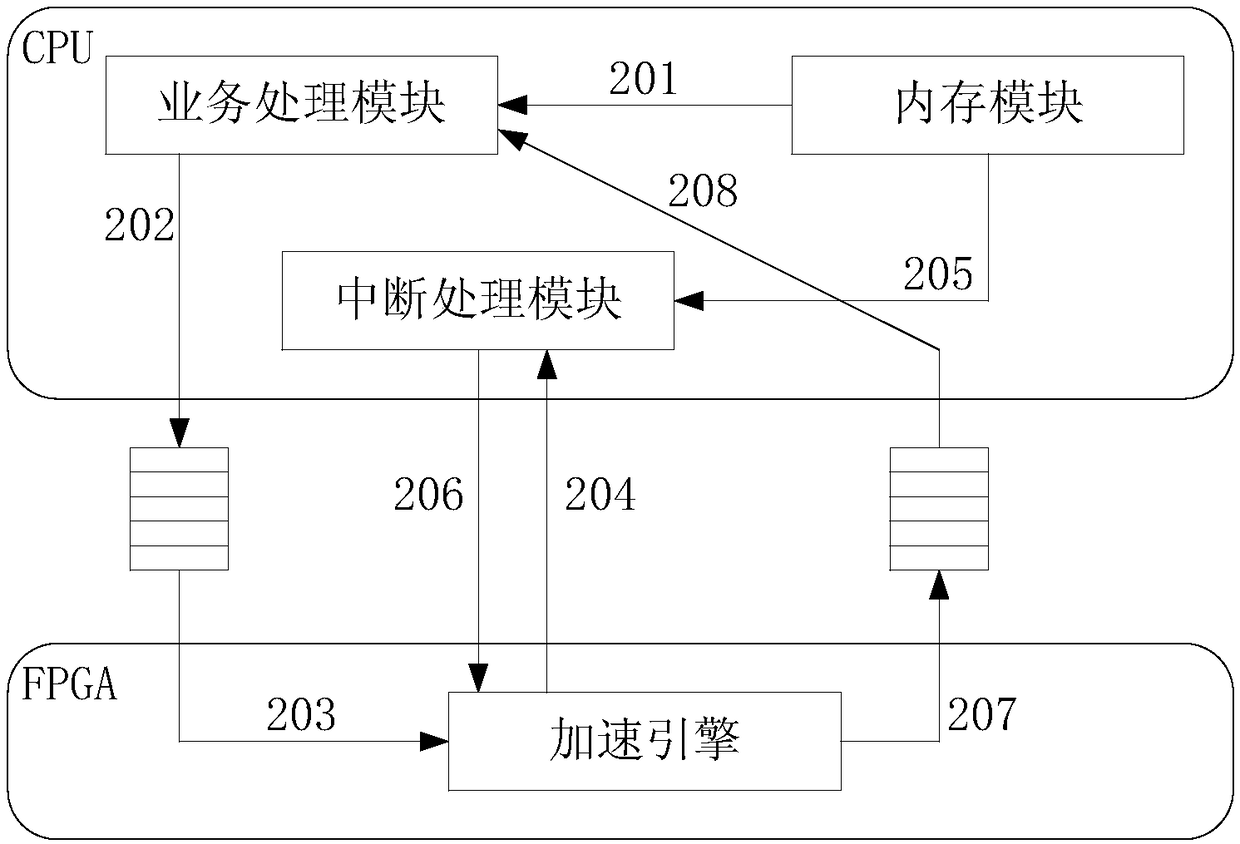

Image

Examples

example 1

[0279] When the cache management module sets the value of the memory status word of the target memory address, it configures the setting time for the memory status word at the same time, and the setting time is used to record the time when the value of the memory status word is set.

[0280] Thus, step E1 specifically includes:

[0281] When the verification module detects that the value of the memory status word of the target memory address is occupied by the CPU, it judges whether the difference between the setting time of the memory status word and the current time is greater than the preset time, if the setting time of the memory status word is different from the current time If the difference between times is greater than the preset time, it means that the value of the memory status word at the target memory address continues to be occupied by the CPU for longer than the preset time, and step E2 is executed.

[0282] Wherein, the checking module detects that the value of ...

example 2

[0284] In some embodiments of the present invention, the status indication field includes information such as a memory status word, a verification status word, and status synchronization time.

[0285] The target memory address is also configured with a check status word.

[0286] The verification status word corresponds to the status synchronization time, and the status synchronization time is used to indicate the time when the value of the verification status word is synchronized to the value of the memory status word;

[0287] The value of the verification status word of the target memory address is obtained by synchronizing the value of the memory status word of the target memory address under the synchronization condition. The synchronization condition is that the value of the verification status word of the target memory address is different from the value of the memory status word of the target memory address ;

[0288] The value of the memory status word of the target...

example 3

[0300] When the cache management module sets the value of the memory status word of the target memory address to be occupied by the CPU, the countdown start flag is set for the memory status word, and the countdown start flag is used to trigger the countdown to the preset countdown time;

[0301] When the cache management module sets the value of the memory status word of the target memory address to be occupied by the accelerated computing unit, it sets a countdown cancel flag for the memory status word, and the countdown start flag is used to trigger cancellation of countdown to the preset countdown time.

[0302] Thus, step E1 specifically includes:

[0303] When the verification module detects the countdown start flag of the memory status word of the target memory address, it starts counting down the preset countdown time, and if the countdown result of the preset countdown time is zero, it indicates the value of the memory status word of the target memory address Continue...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com