A Joint Calibration Method of Multi-line LiDAR and Camera Based on Refined Radar Scanning Edge Points

A laser radar and joint calibration technology, which is applied in radio wave measurement systems, image analysis, image enhancement, etc., can solve the problem of inaccurate edge calibration points, and achieve the effect of avoiding insufficient precision and accurate calibration results

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0043] The present invention will be further described below in conjunction with the accompanying drawings.

[0044] refer to Figure 1 to Figure 4 , a multi-line lidar and camera joint calibration method for refining radar scanning edge points, including the following steps:

[0045] 1) Perform internal reference calibration on the camera to obtain the internal reference matrix of the camera

[0046]

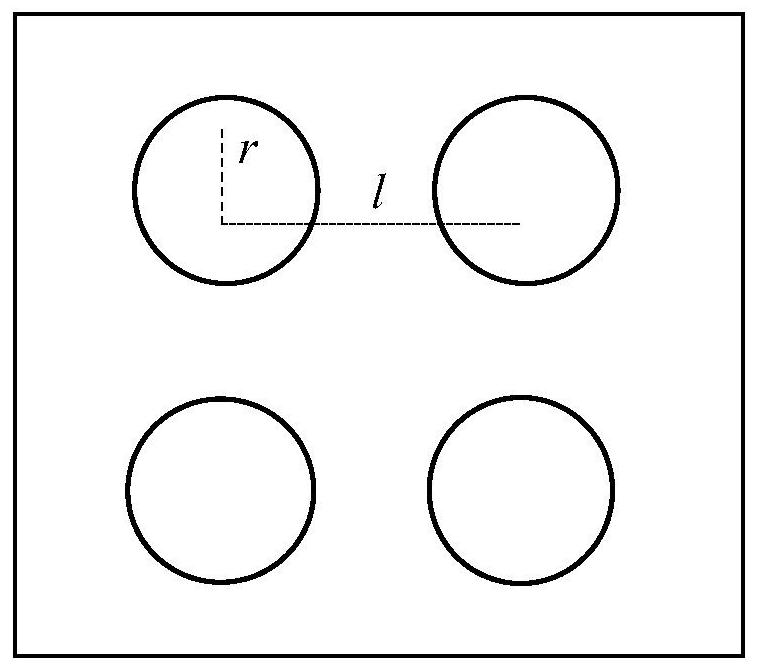

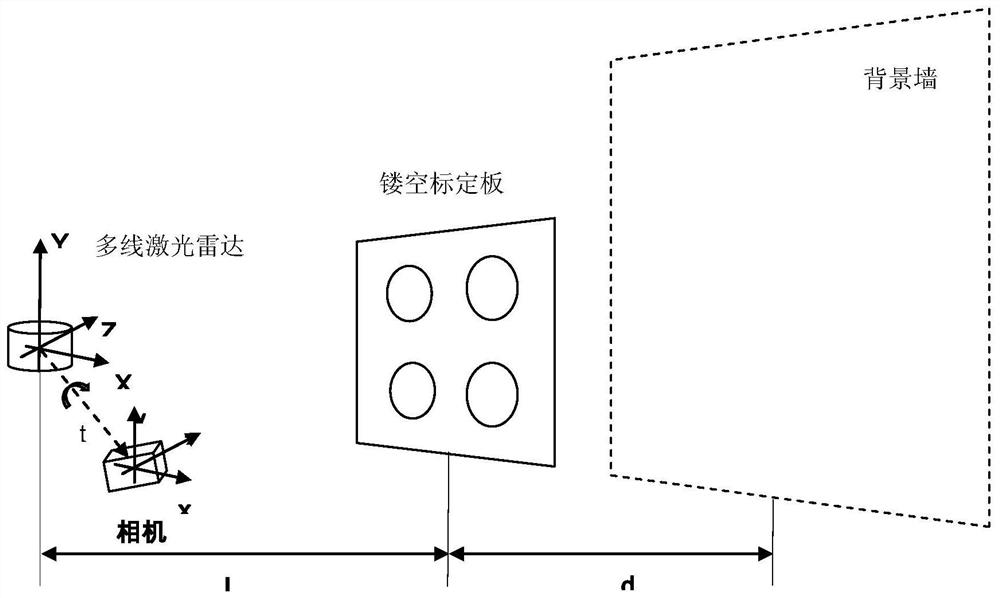

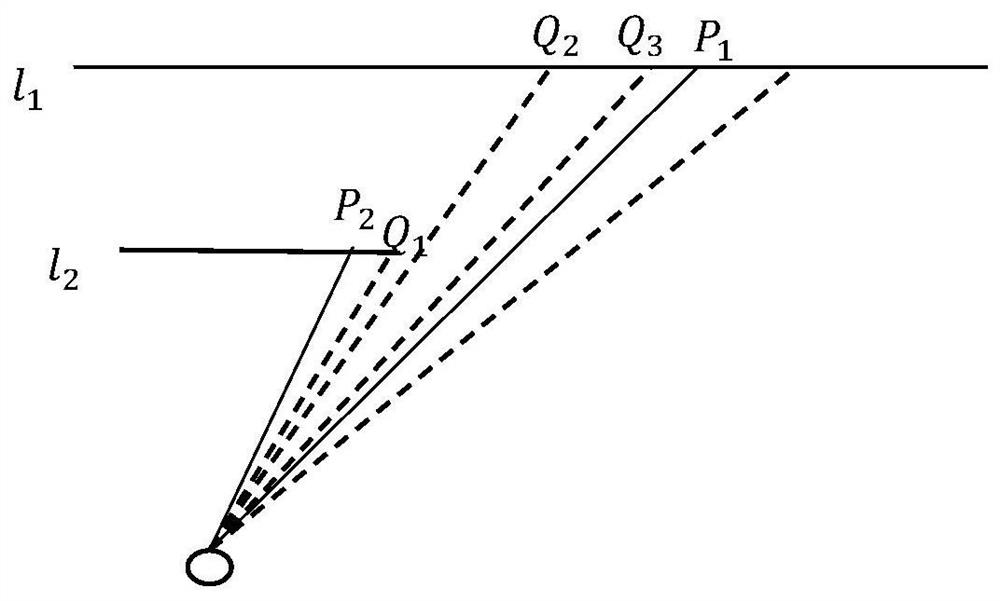

[0047] Where f is the focal length of the camera, [O x , O y ] at the principal optical axis point, such as figure 1 As shown in , design a calibration object with spatial geometric characteristics, on which there are four hollow circles with the same radius r and the same distance l from the center of the circle. Place it where the camera and lidar can get it at the same time, such as figure 2 As shown, the positions of the lidar and the camera are relatively fixed, the distance from the calibration board to the lidar is L, and the distance between the background wall...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com