Gesture recognition device and man-machine interaction system

A gesture recognition and gesture technology, applied in the field of human-computer interaction systems, can solve problems such as low efficiency and complex structure of recognition devices, and achieve the effect of improved recognition efficiency and simple structure

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

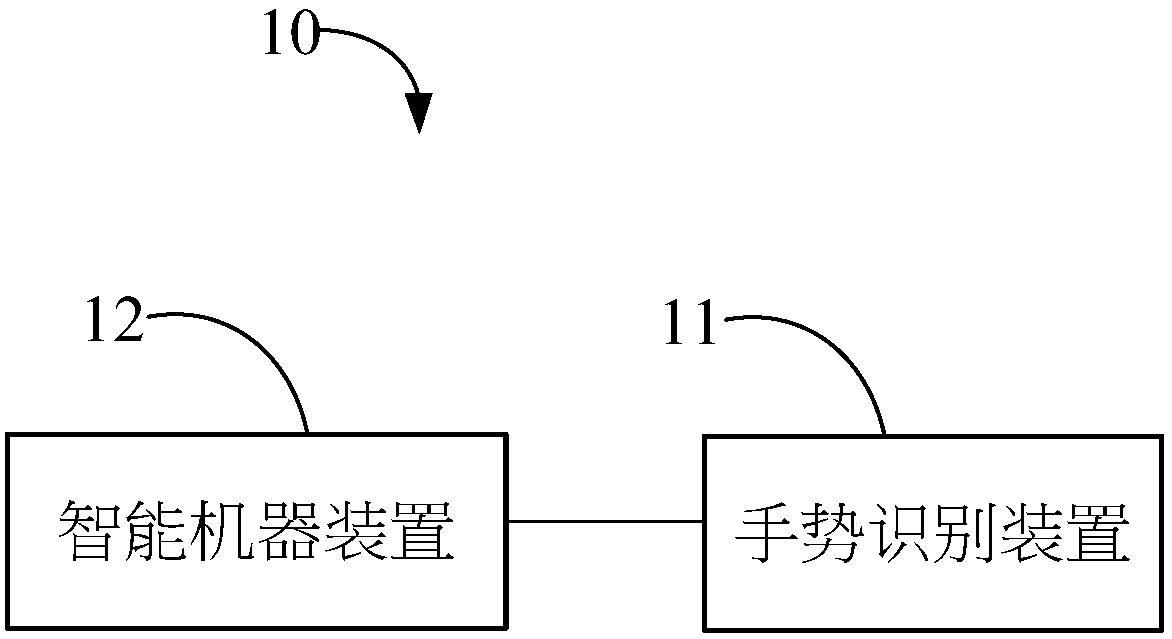

Embodiment 1

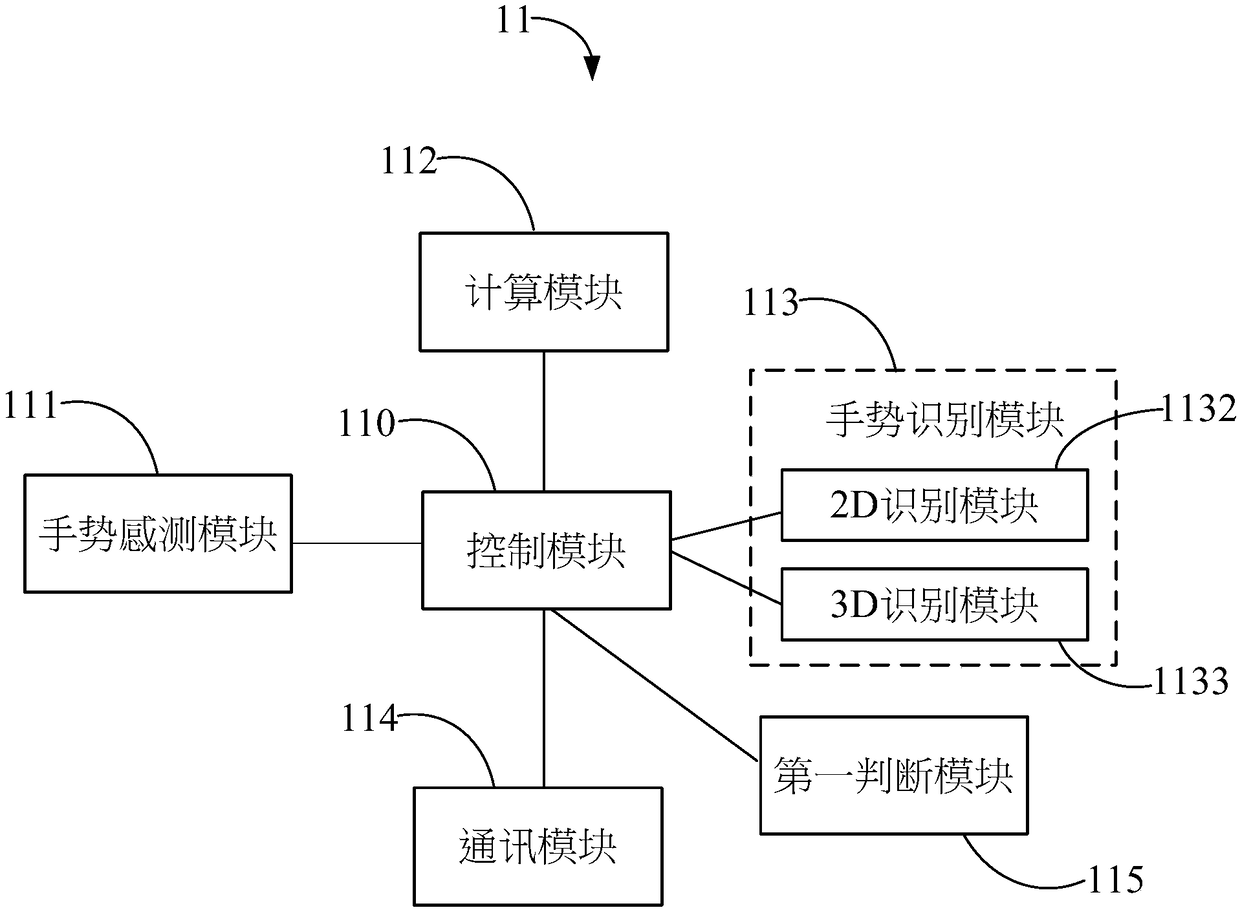

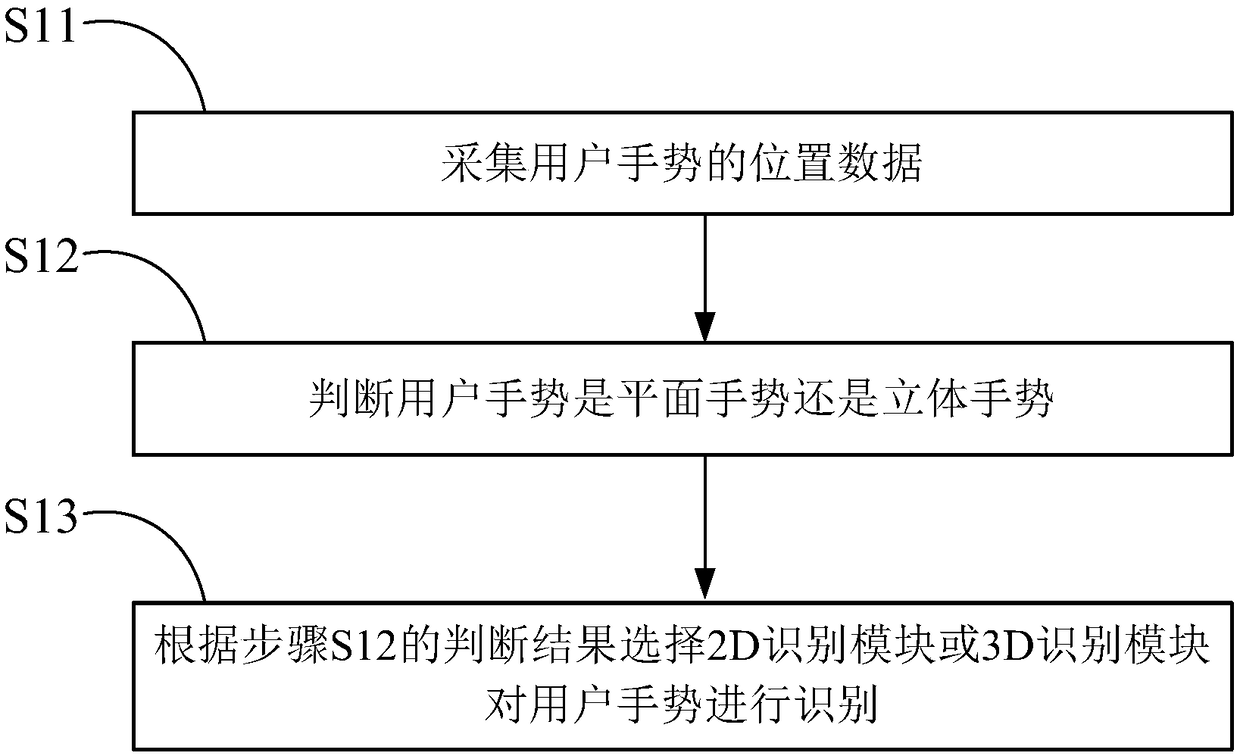

[0029] see figure 2 , in this embodiment, the gesture recognition device 11 includes: a control module 110 , a gesture sensing module 111 , a calculation module 112 , a gesture recognition module 113 and a communication module 114 . The gesture sensing module 111 , computing module 112 , gesture recognition module 113 and communication module 114 are respectively connected to the control module 110 .

[0030] The control module 110 controls the entire gesture recognition device 11 to work. The gesture sensing module 111 is used to collect position data of user gestures. The gesture sensing module 111 includes a 3D sensing device. The 3D sensing device may be any 3D sensing device, such as an infrared sensing device, a laser sensing device or an ultrasonic sensing device. In this embodiment, the 3D sensing device is leap motion. The calculation module 112 is used for analyzing and processing the position data and other data of the user gesture. The gesture recognition mod...

Embodiment 2

[0044] see Figure 5 , in this embodiment, the gesture recognition device 11A includes: a control module 110, a gesture sensing module 111, a calculation module 112, a gesture recognition module 113, a communication module 114, a first judgment module 115 and a The second judging module 116 .

[0045] The gesture recognition device 11A in Embodiment 2 of the present invention is basically the same in structure as the gesture recognition device 11 in Embodiment 1 of the present invention, the difference being that it further includes a second judging module 116 . The second judging module 116 is used for judging whether a gesture input start instruction or a gesture input end instruction is received.

[0046] The method for the second judging module 116 to judge whether a gesture input start instruction or a gesture input end instruction is received may be: judging whether the communication module 114 has received an instruction from an external remote control device, or by ju...

Embodiment 3

[0060] see Figure 7 , in this embodiment, the gesture recognition device 11B includes: a control module 110, a gesture sensing module 111, a calculation module 112, a gesture recognition module 113, a communication module 114, a first judgment module 115, a The second judging module 116 and a third judging module 117 .

[0061] The gesture recognition device 11B in Embodiment 3 of the present invention is basically the same in structure as the gesture recognition device 11A in Embodiment 2 of the present invention, the difference is that it further includes a third judging module 117, which is used to judge whether the gesture input mode is 2D input mode or 3D input mode.

[0062] The method for the third judging module 117 to judge whether the gesture input mode is 2D input mode or 3D input mode may be: judging whether the communication module 114 receives an instruction from an external remote control device, or judging whether the gesture recognition module 113 detects a ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com