High-bandwidth memory-based neural network calculation apparatus and method

A high-bandwidth memory and neural network technology, applied in biological neural network models, calculations, instruments, etc., can solve the problems of high power consumption, difficulty in reducing the area, hindering the continuous growth of the performance of neural network computing devices, etc. The effect of improving computing performance, increasing data transmission bandwidth and transmission speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0030] In order to make the object, technical solution and advantages of the present invention clearer, the present invention will be described in further detail below in conjunction with specific embodiments and with reference to the accompanying drawings.

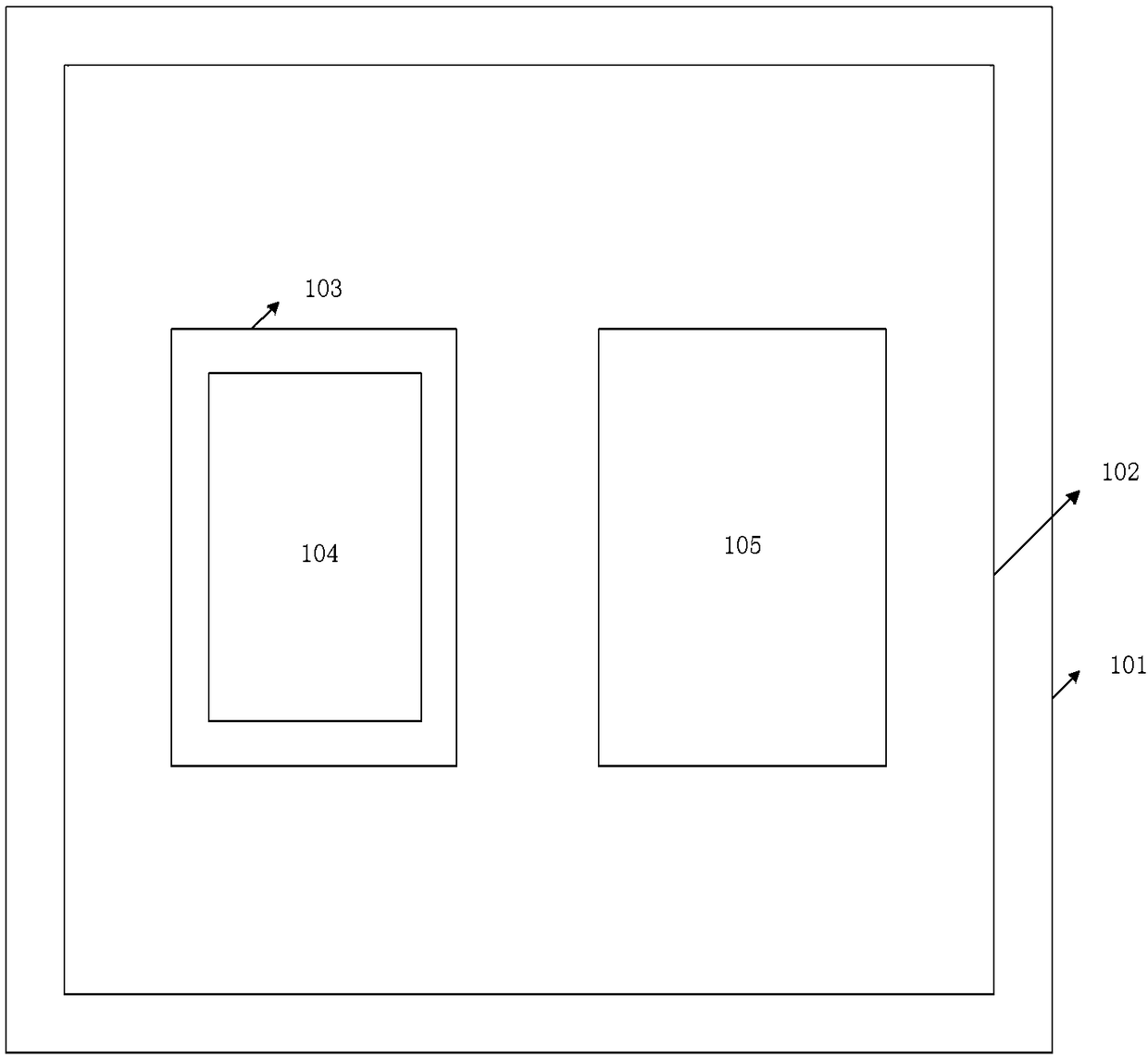

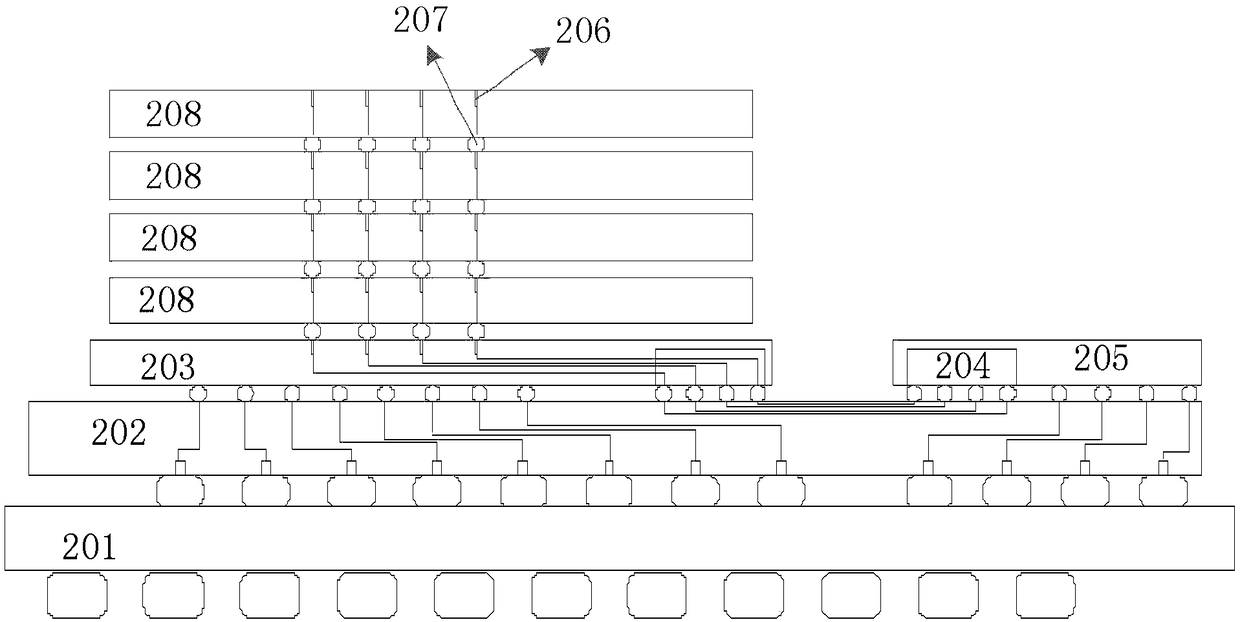

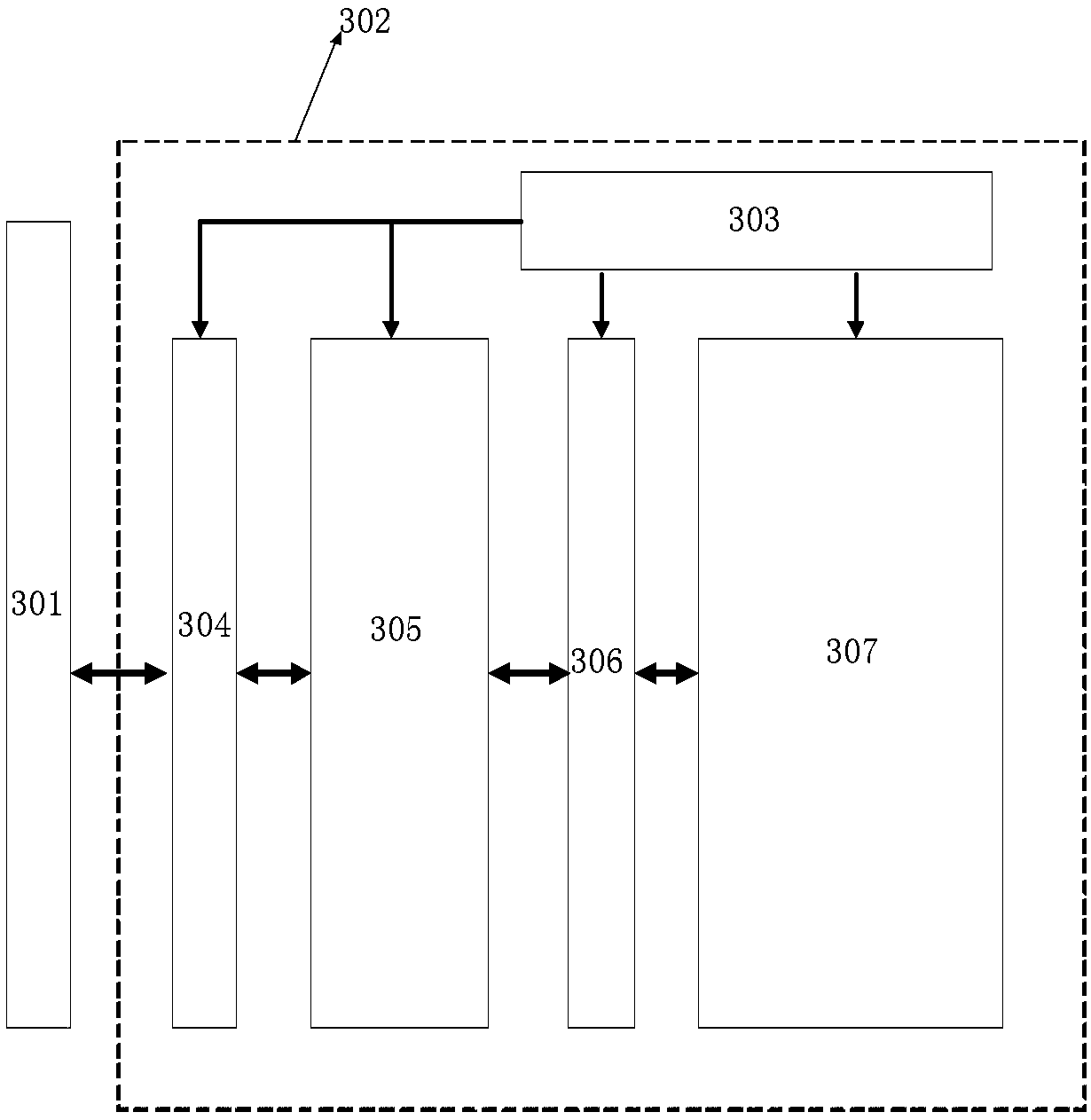

[0031] As a new type of low-power memory chip, High-Bandwidth Memory (HBM) has the excellent characteristics of ultra-wide communication data path, low power consumption and small area. An embodiment of the present invention proposes a neural network computing device based on high-bandwidth memory, see figure 1 , the neural network computing device includes: a package substrate 101 (PackageSubstrate), an interposer 102 (Interposer), a logic chip 103 (Logic Die), a high bandwidth memory 104 (Stacked Memory) and a neural network accelerator 105 . in,

[0032] The packaging substrate 101 is used to carry the above-mentioned other components of the neural network computing device, and to be electrically connected to upper de...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com