Constant Cache capable of supporting pipeline operation

A constant, pipelining technique, applied to constant Cache. field

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

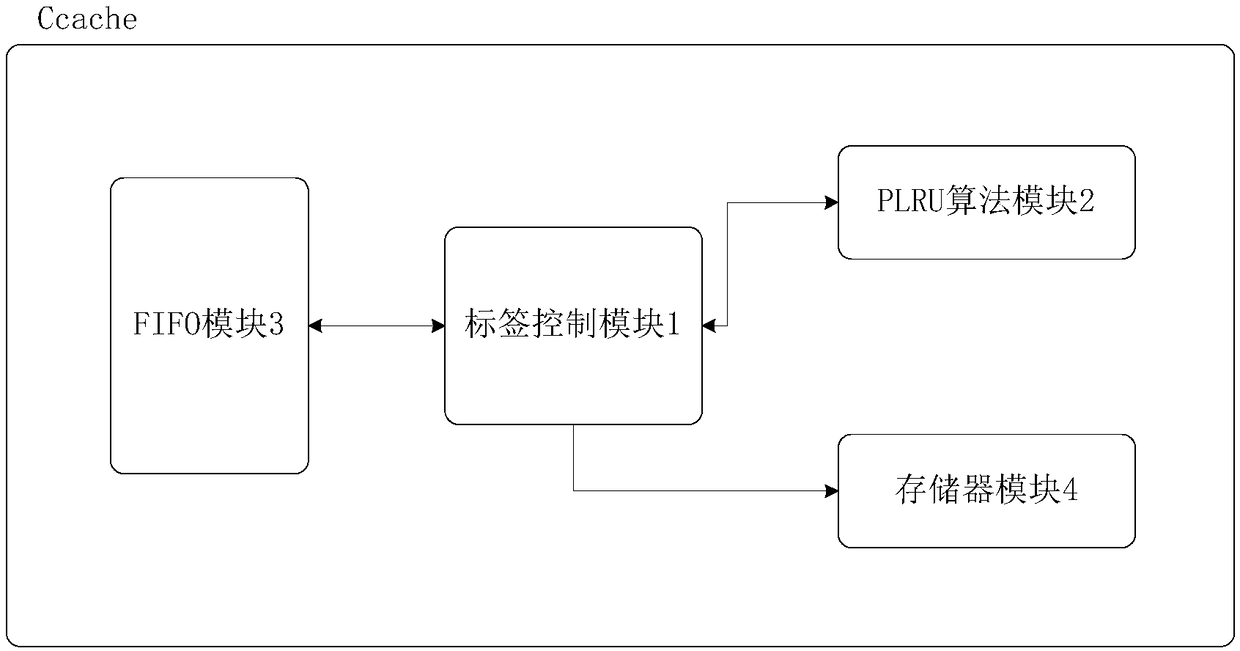

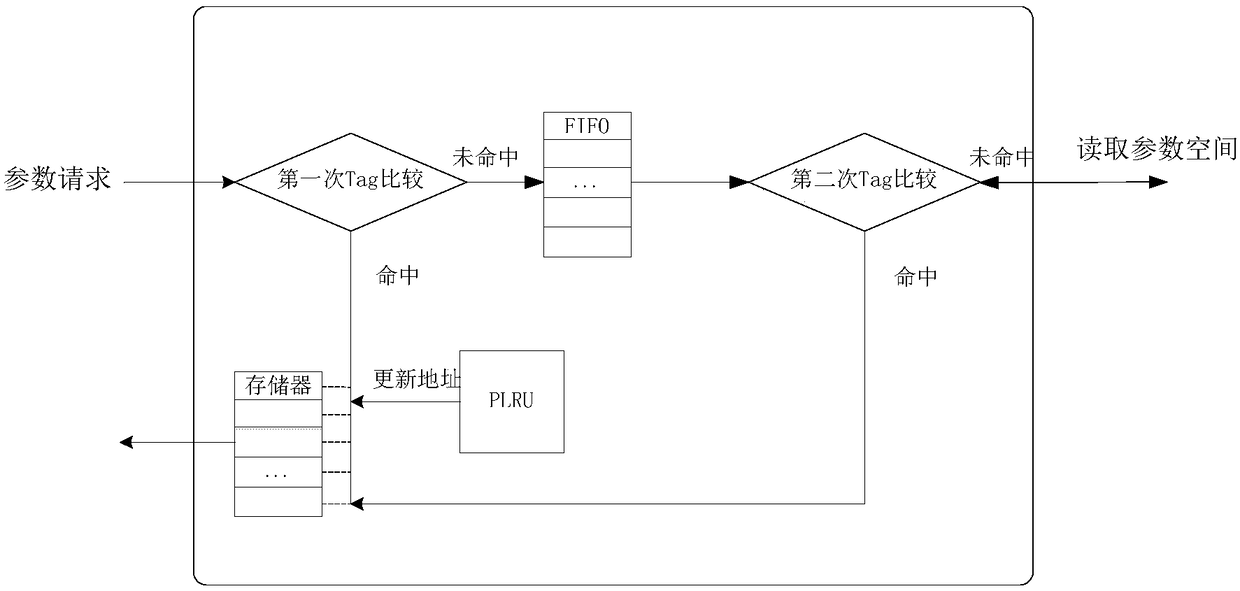

[0027] Such as figure 1 As shown, when the constant Cache receives a parameter request, the tag control module will compare the address of the parameter request with the mapping address saved in the tag register, and if it hits, it will directly read the data from the memory and return it to the requester; if If it is not hit, save the request to FIFO. Once it is detected that the FIFO module is not empty, the tag register module will read the FIFO and perform a second comparison. If it still misses, it will generate a read request for the parameter space. After the data is read back, the update address generated according to the PLRU algorithm , to update the corresponding memory and tag registers. During the above process, the Cache can still receive parameter requests, and the miss requests will be stored in the FIFO and wait for processing.

[0028] For the PLRU module, by using the MRU (Most Recently Used) bit to mark the historical access situation of each Cache block ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com