3D Model Retrieval Method Based on Optimal View and Deep Convolutional Neural Network

A convolutional neural network and 3D model technology, applied in the field of computer graphics, can solve problems such as inconsistent viewpoint quality and large difference in model extraction effect, and achieve good expression effect, improved retrieval effect, and strong feature robustness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

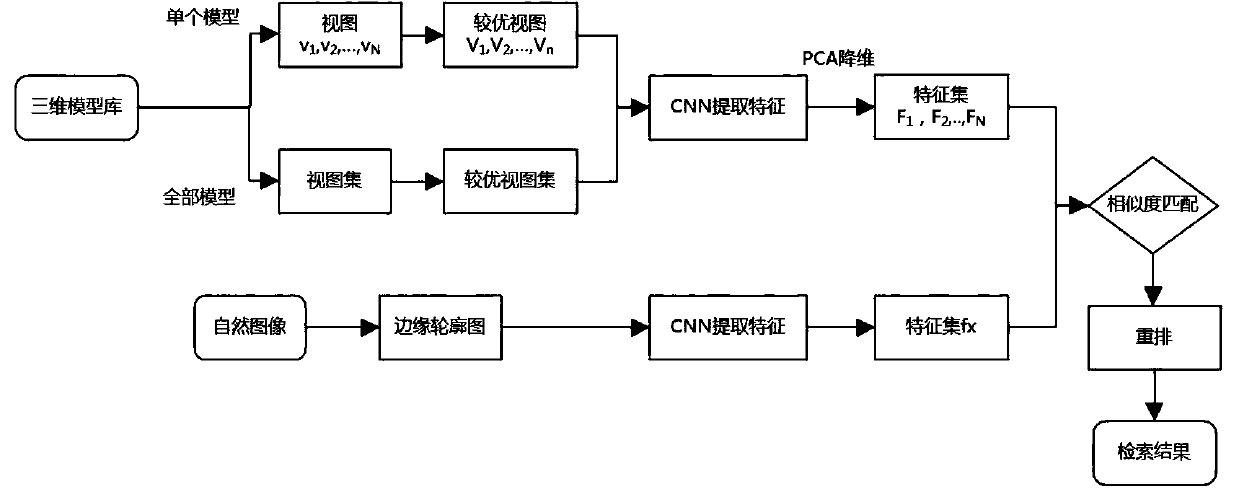

[0037] Example: such as figure 1 As shown, the 3D model retrieval method based on the optimal view and deep convolutional neural network includes the following steps:

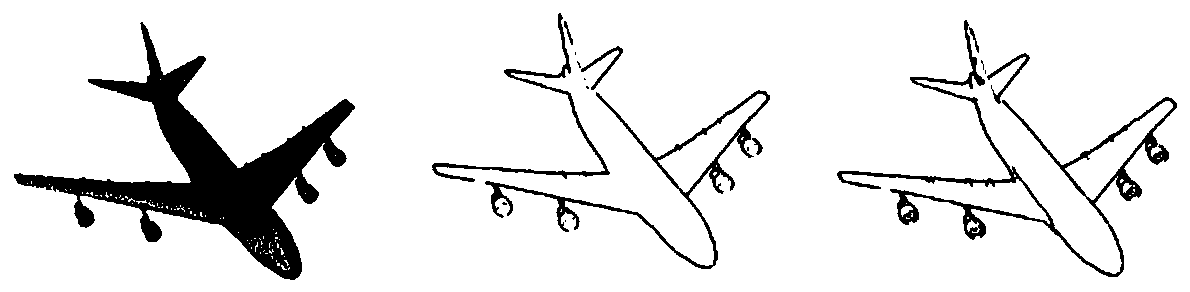

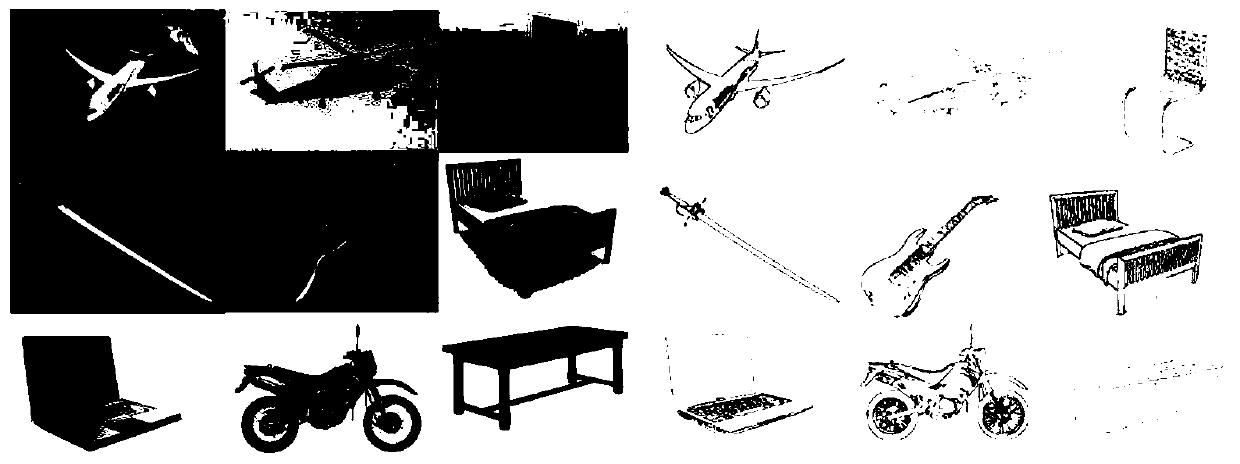

[0038] Step 1: Extract views from a total of 2106 3D models. Firstly, according to the predefined initial viewpoint, the 3D model is wrapped with a viewpoint sphere centered at the center of mass of the 3D model and contains multiple viewpoints. In terms of rendering method, the rendering method of closed contour line combined with implied contour (hereinafter referred to as mixed contour line) is adopted; the closed contour line is obtained by detecting the part perpendicular to the normal vector of the viewpoint vector and the model surface, and drawing it out, while the implied The contour line finds some lines around it that conform to the curvature close to human vision for further drawing to obtain a two-dimensional view of the three-dimensional model. Extract a 2D view of a 3D model using a hybrid silho...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com