Unsupervised video segmentation method integrated with temporal-spatial multi-feature representation

A video segmentation and multi-feature technology, applied in image analysis, image data processing, instruments, etc., can solve problems such as inaccurate motion information, blurred objects, etc., to achieve improved robustness, improved robustness, and accurate edge segmentation low degree of effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0024] The present invention will be further described below in conjunction with the accompanying drawings, so that those skilled in the art can implement it by referring to the text of the description.

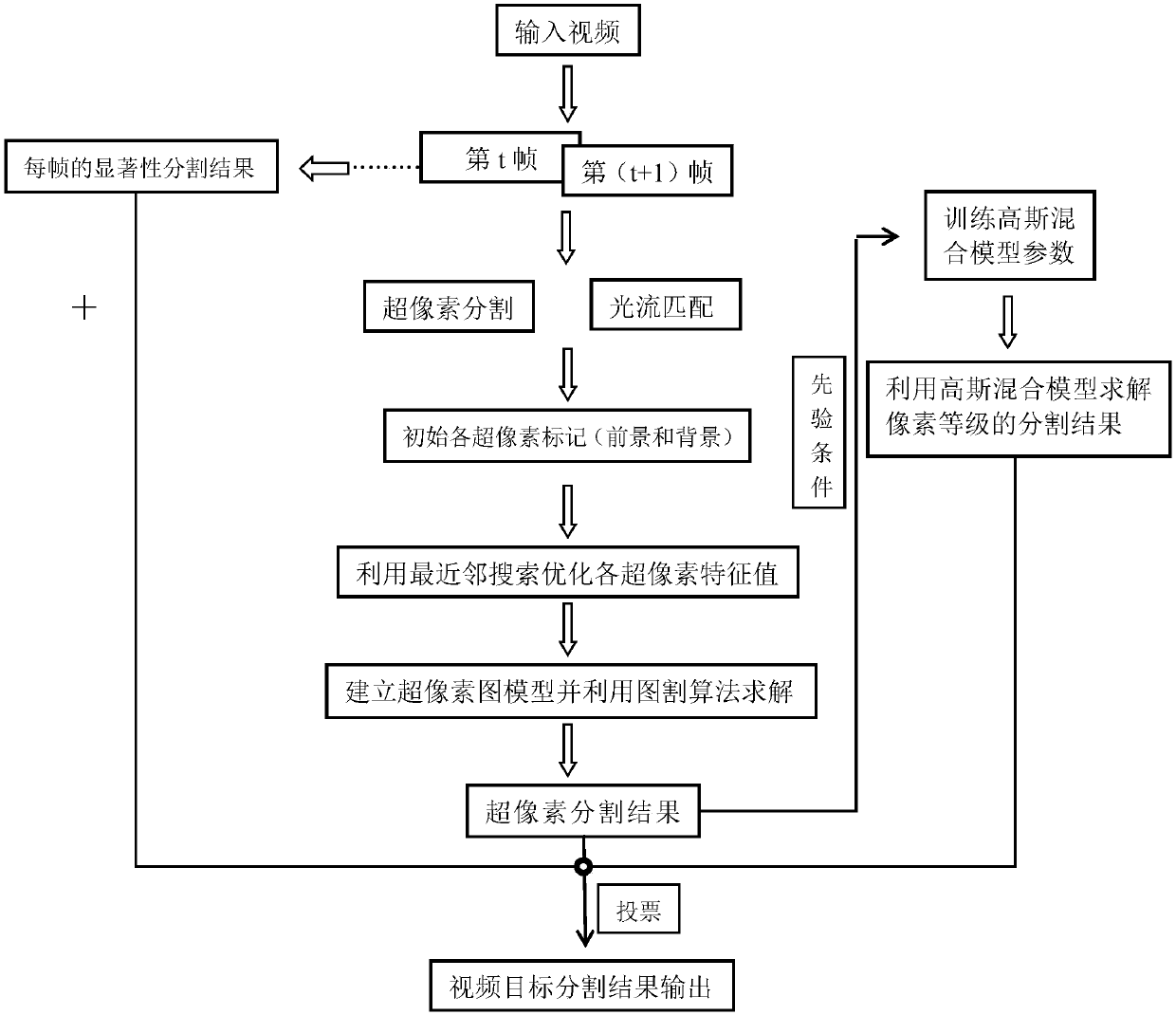

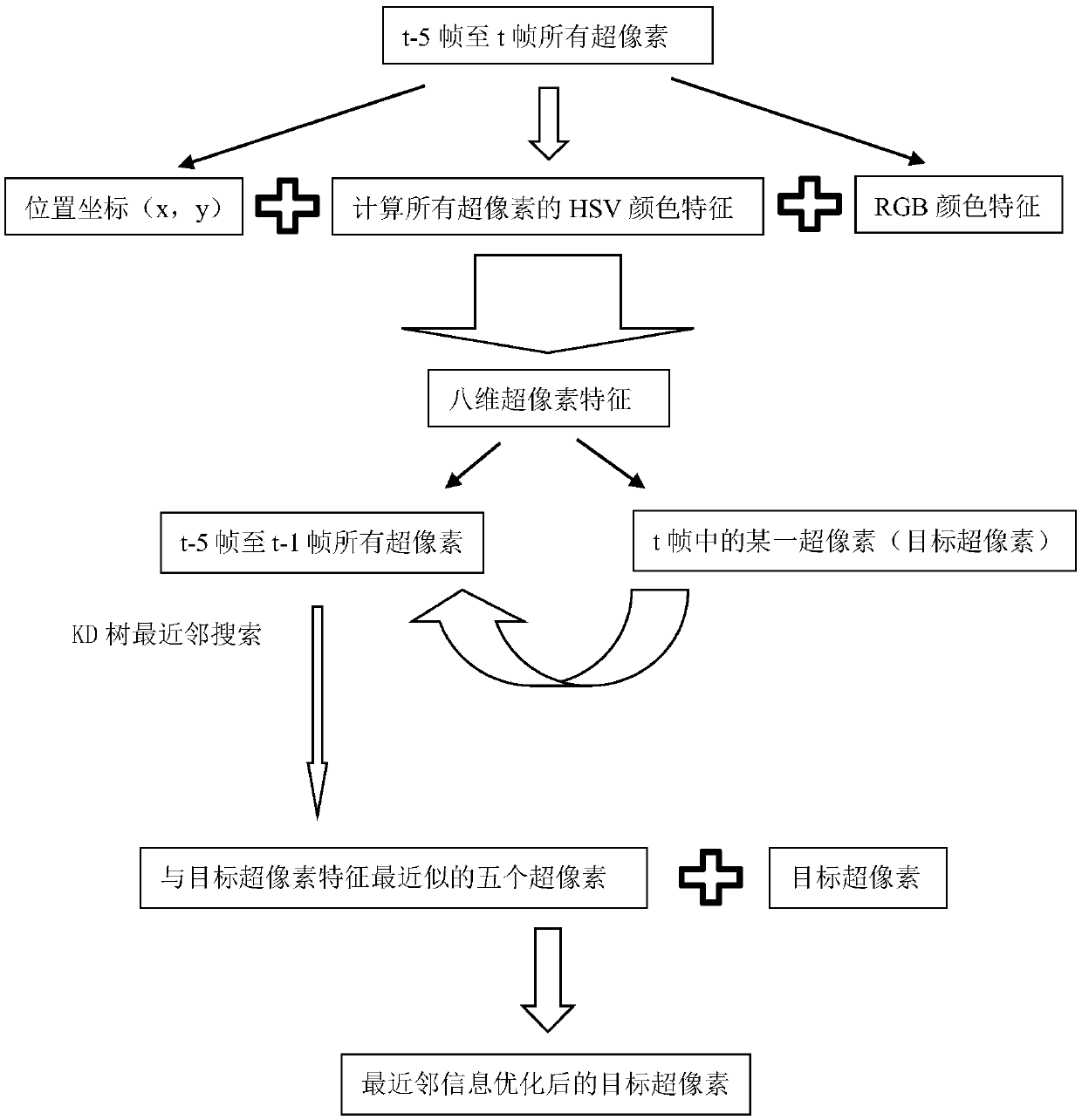

[0025] Such as figure 1 As shown, the present invention provides an unsupervised video segmentation method based on non-local spatio-temporal feature learning, including obtaining the video sequence to be segmented, using superpixel segmentation to process the video sequence, using optical flow to match front and rear frame information, and according to the video sequence The optical flow information of adjacent frames obtains the approximate range of the moving target, uses non-local spatiotemporal information to optimize the matching result, establishes a graph model, solves and outputs the superpixel level segmentation result, and uses the superpixel level segmentation result as a priori training mixture Gaussian model, using the mixed Gaussian model trained to carry out p...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com