Regional convolutional neural network-based method for gesture identification and interaction under egocentric vision

A technology of convolutional neural network and gesture recognition, which is applied in the direction of neural learning method, biological neural network model, character and pattern recognition, etc. It can solve the problems of algorithm model recognition rate speed, direction, hand size, etc., to improve the recognition speed The effect of accuracy, gesture recognition rate stability, and long distance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

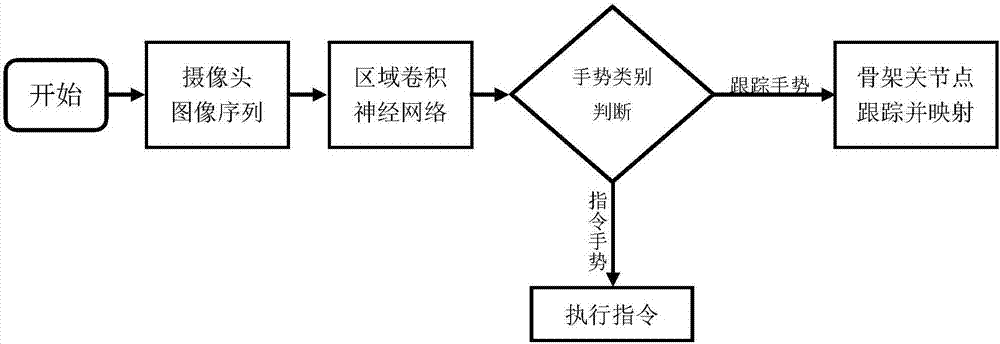

[0020] like figure 1 As shown, the first-view gesture recognition and interaction method based on the regional convolutional neural network of the present invention includes the following steps:

[0021] S1. Acquire training data, and manually calibrate the labels of the training data. The labels include the upper left and lower right corners of the foreground of the hand area, the coordinates of the skeleton nodes of different gestures, and different gesture categories manually marked.

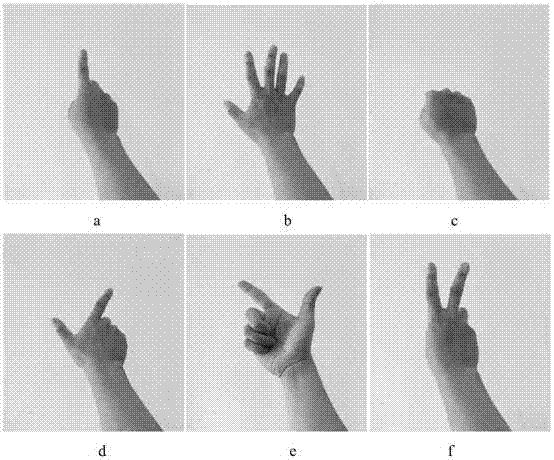

[0022] When acquiring data, the camera is placed at the position of the human eye, and the visual direction is consistent with the direct gaze direction of the eyes. Continuously collect video stream information and convert it into RGB images. The images include various gestures (such as figure 2 shown in a-f). Wherein, the camera is an ordinary 2D camera, and the collected image is an ordinary RGB image with a size of 640*480. The training data includes a variety of different gestures, an...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com