Intelligent automatic following method based on visual sensor, system and suitcase

A visual sensor and automatic following technology, applied in luggage, control/adjustment systems, instruments, etc., can solve the problems of large influence on positioning accuracy, inability to automatically follow, large positioning errors, etc. The effect of environmental interference and high recognition accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

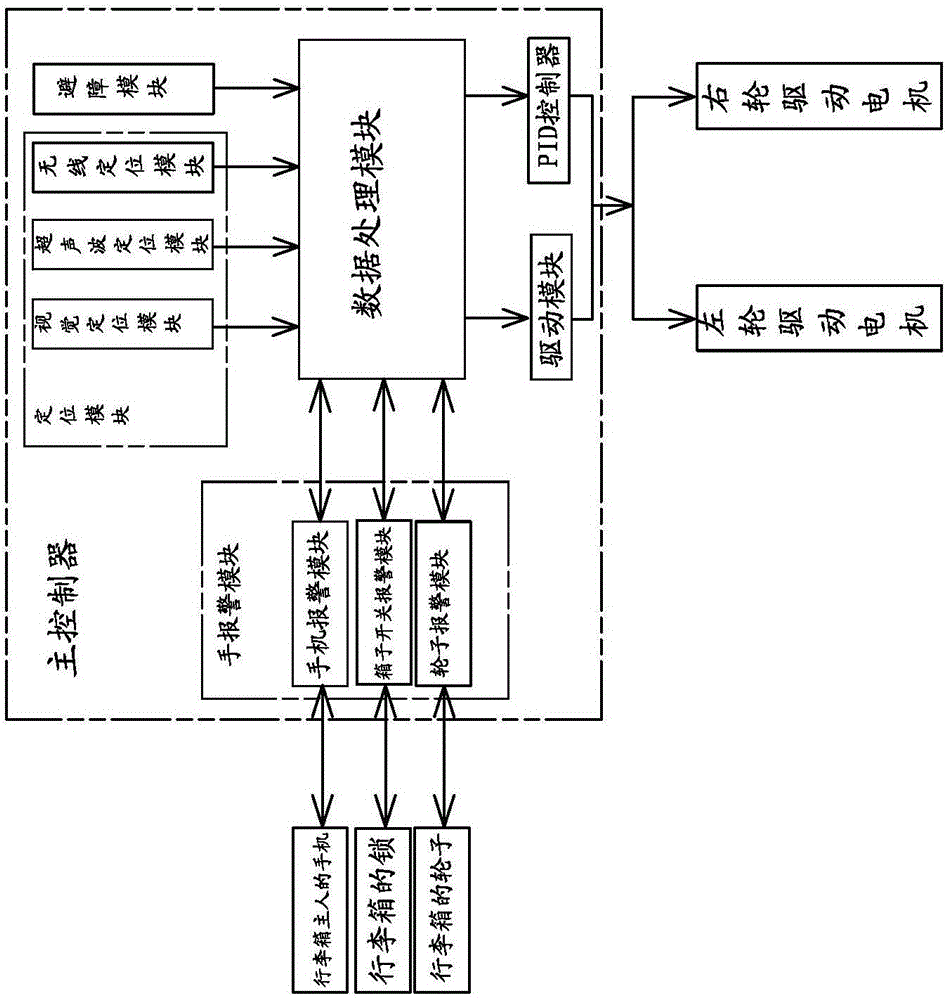

[0035] This embodiment is an example specifically applied to luggage. In this embodiment, the intelligent automatic following suitcase based on the visual sensor, referred to as the intelligent automatic following suitcase, mainly includes: a box body, a traveling mechanism, a driving motor, a steering mechanism, a power supply and a following system. The following system here is the abbreviation of intelligent automatic following system based on visual sensor.

[0036] The running mechanism includes a plurality of wheels arranged on the outer surface of the box, and the plurality of wheels are respectively a left front wheel, a right front wheel, a left rear wheel and a right rear wheel. The left front wheel, right front wheel, left rear wheel and right rear wheel can use universal wheels respectively, the left rear wheel and the right rear wheel provide power for the suitcase, on the one hand, the left front wheel and the right front wheel reduce the load of the rear wheel d...

Embodiment 2

[0075] In this embodiment, the vision sensor may adopt a depth vision sensor as the "eye" of the suitcase to perceive the position of the owner of the suitcase relative to the suitcase.

[0076] The visual sensor can use ASUS Xtion Pro Live, which is connected to the main controller through the USB interface. After the main controller obtains the data from Xtion Pro Live, it can identify the owner of the suitcase through the human body recognition algorithm, and obtain the position of the owner of the suitcase relative to the suitcase.

[0077] After obtaining the position of the owner of the suitcase relative to the suitcase, the main controller sends an instruction to the drive module, which drives the left wheel motor and the right wheel motor to run, and maintains the relative position between the suitcase and the suitcase owner at a certain value through the PID controller. within a reasonable setting range.

[0078] The main controller is powered by USB 5V voltage, and t...

Embodiment 3

[0081] In addition to being applicable to suitcases, the present invention can also be applied to the following various devices, for example:

[0082] 1. Intelligently and automatically follow stationery based on visual sensors: intelligently and automatically follow schoolbags based on visual sensors.

[0083] 2. Intelligently and automatically follow household items based on visual sensors: baby carriages, toys, tables, chairs, wheelchairs, shopping carts, airport luggage carts, pet robots, cleaning robots, nursing robots, service robots, bags, etc.

[0084] 3. Intelligently and automatically follow office supplies based on visual sensors: intelligently and automatically follow transportation tools based on visual sensors.

[0085] 4. Intelligently and automatically follow fitness products based on visual sensors.

[0086] 5. Intelligently and automatically follow home and office supplies based on visual sensors.

[0087] When the present invention is applicable to the abo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com