A visual attention detection method for 3D video

A technology of visual attention and 3D video, applied in image data processing, instrumentation, computing, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0123] The technical solutions of the present invention will be further described in detail below in conjunction with the accompanying drawings.

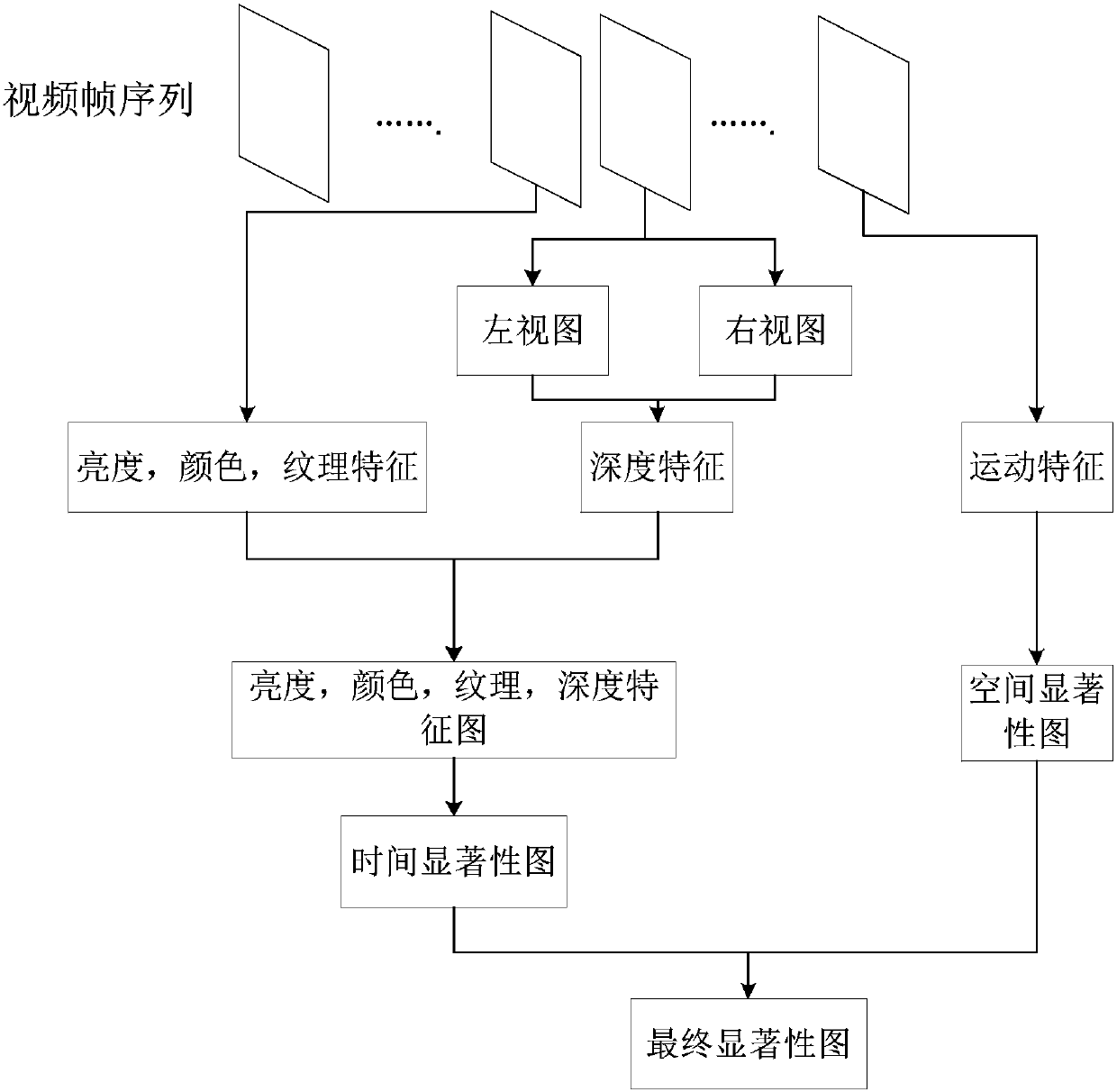

[0124] The process of the present invention is as figure 1 As shown, the specific process is as follows.

[0125] Step 1: Extract low-level visual features in the 3D video frame to calculate the feature contrast, and use the Gaussian model of Euclidean distance to obtain the spatial saliency map of the 3D video frame;

[0126] First divide the video frame into 8*8 image blocks, let r, g, b represent the red, green and blue channels of the image, define the new features of the image block, the new red feature R=r-(g+b) , new green feature G=g-(r+b) / 2, new blue feature B=b-(r+g) / 2, new yellow feature According to the above definition, we can calculate the following features of the image block:

[0127] (1) Brightness component I:

[0128] I=(r+g+b) / 3 (1)

[0129] (2) The first color component C b :

[0130] C b = B-Y (2)

...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com