Power weight quantification-based nerve network forward operation hardware structure

A neural network and hardware structure technology, applied in computing, digital data processing parts, instruments, etc., can solve the problems of increasing the complexity of neural network operations, not showing advantages in computing overhead, and high computing overhead, so as to reduce computing resource overhead , Reduce computing overhead, reduce the effect of storage capacity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0024] Embodiments of the present invention are described in detail below, examples of which are shown in the drawings, wherein the same or similar reference numerals designate the same or similar elements or elements having the same or similar functions throughout. The embodiments described below by referring to the figures are exemplary only for explaining the present invention and should not be construed as limiting the present invention.

[0025] The hardware structure of the neural network forward operation based on power weight quantization according to the embodiment of the present invention will be described below with reference to the accompanying drawings.

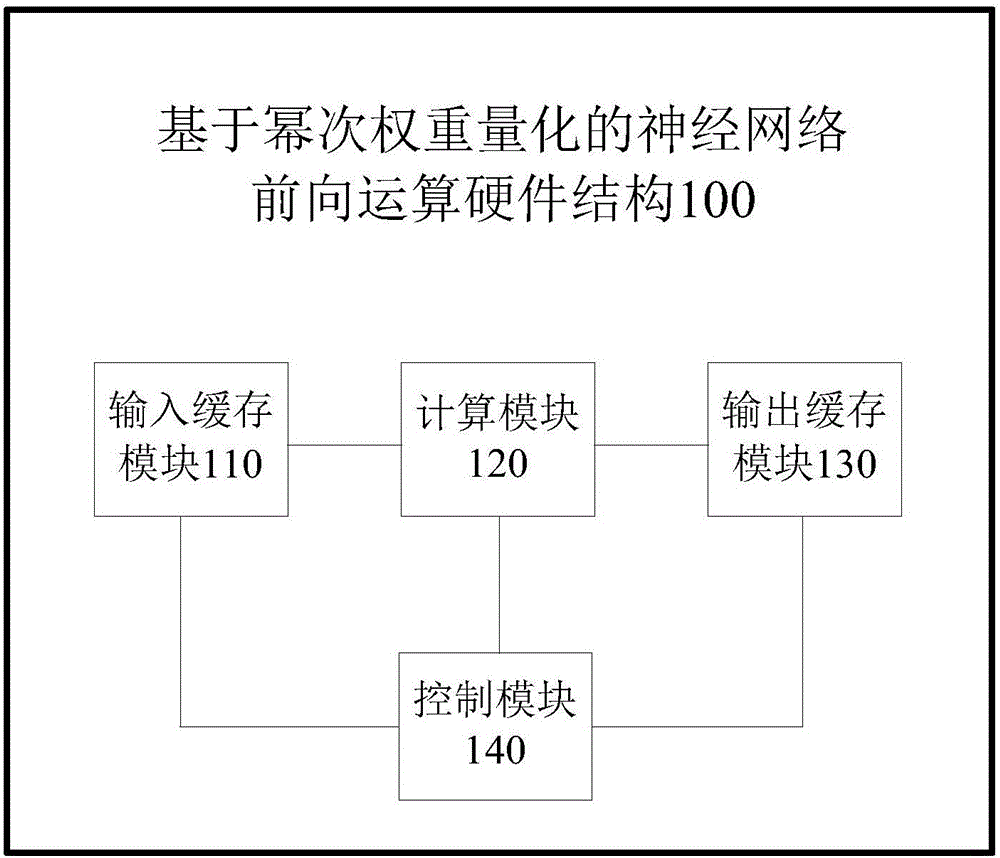

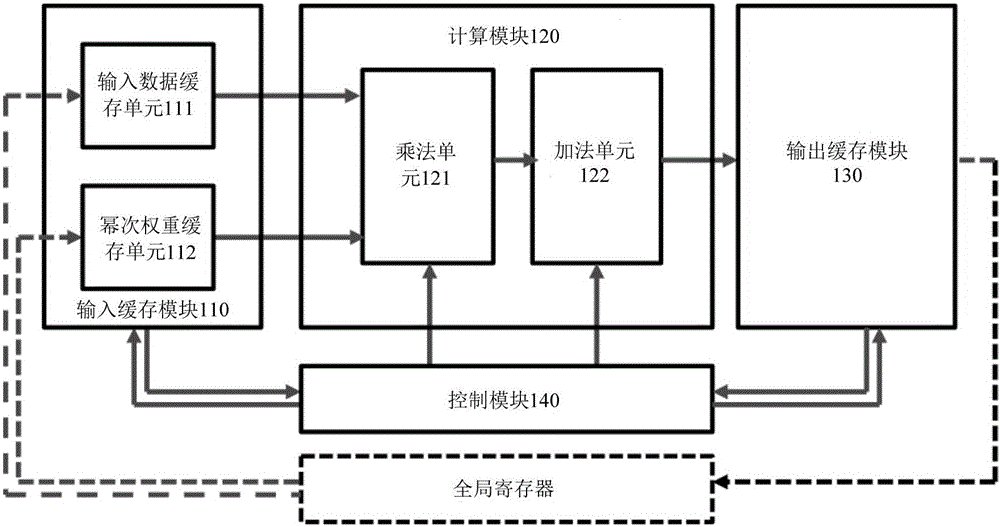

[0026] figure 1 It is a structural block diagram of a neural network forward operation hardware structure based on power weight quantization according to an embodiment of the present invention. figure 2 It is a circuit structure diagram of a neural network forward operation hardware structure based on power wei...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com